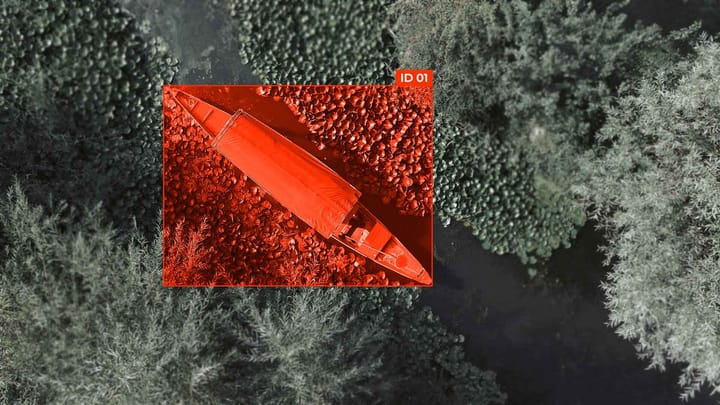

Bird's Eye View (BEV) Annotation: Converting Camera to Top-Down Representation

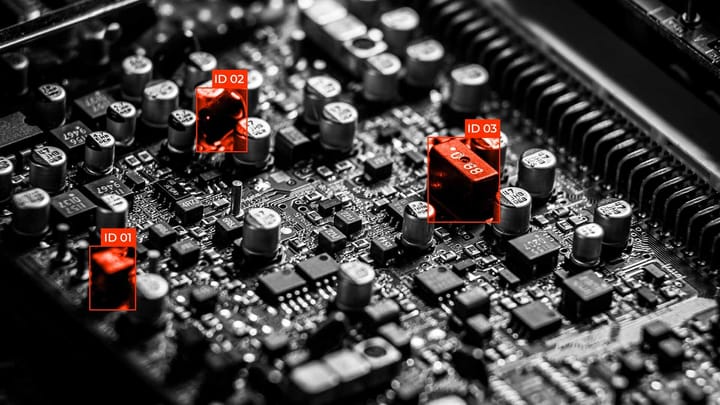

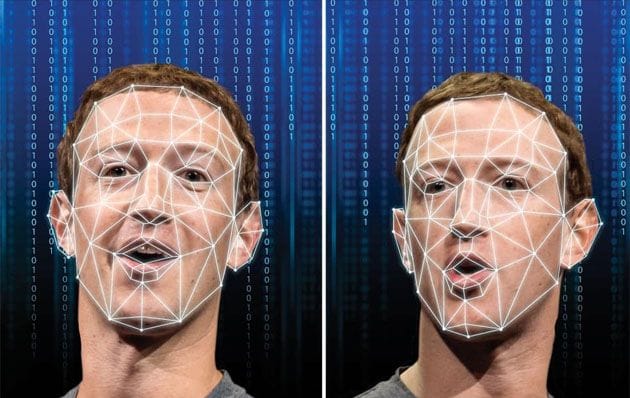

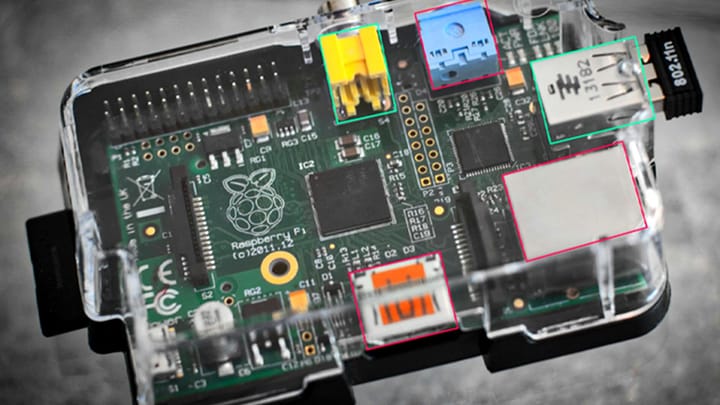

In modern computer vision and autonomous vehicle control systems, accurate object recognition and localization are particularly important. Traditional cameras provide perspective images that limit the view and distort spatial relationships between objects. To overcome these limitations, the Bird’s Eye View (BEV) Annotation approach is used - converting the camera