There is a bias in large language models. Is logic able to save them?

Apparently, even language models perceive themselves to be biased. In response to the question in ChatGPT, the author replied, "Yes, language models have biases since they reflect the biases of society in which they were collected. Language models can perpetuate and amplify these biases, for example, if they are trained on real-world datasets that contain gender and racial biases, which are common in real-world datasets. This is an issue that is well known, but potentially dangerous.

The human brain is capable of both logical and stereotypical reasoning when it comes to learning. In spite of this, language models tend to mimic the latter rather than the former. This unfortunate narrative has been repeated ad nauseam when the ability to exercise reasoning and critical thinking is lacking. Would injecting logic into the fray serve as a means of mitigating such behavior?

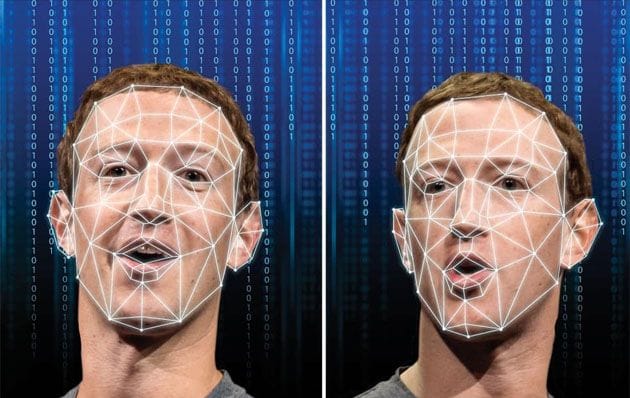

Computer scientists at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) were intrigued by this possibility, so they set about investigating whether logic-aware language models could avoid more harmful stereotypes. With the help of a dataset with labels for text snippets detailing whether a second phrase "entails," "contradicts," or is neutral, they trained a language model to predict the relationship between two sentences. It was found that the newly trained models were significantly less biased than other baselines when using this dataset - natural language inference - without the addition of any further data, data editing, or additional training algorithms.

Considering that the premises "the individual is a doctor" and the hypothesis "the individual is masculine" do not indicate that the individual is male, the relationship would be classified as "neutral." If the training data were biased, two sentences could appear to be related, such as "doctor" with "masculine," despite no evidence that such a connection exists.

We are well aware of the omnipresence of language models: Applications include natural language processing, speech recognition, conversational AI, and generative tasks. The field of research is not a new one, but growing pains may arise as it becomes more complex and capable.

Hongyin Luo, a postdoc at MIT CSAIL, explains that current language models have difficulties with fairness, computational resources, and privacy. As a result of the amount of parameters and computational resources required to run these large language models, running them is also very expensive due to the amount of parameters and the amount of computational resources required to do so. As far as privacy concerns are concerned, state-of-the-art language models developed by places such as ChatGPT or GPT-3 have APIs where you must upload your language, but there is no place for sensitive information like health care or finance.

Taking into account these challenges, we proposed a logical language model that is 500 times smaller than current state-of-the-art models, has the capability of being deployed locally, and does not require human annotations for downstream training. As compared to the largest language models, our model uses 1/400 parameters, performs better on some tasks, and significantly reduces computation resources."

On logic-language understanding tasks, this model, which contains 350 million parameters, outperformed some very large-scale language models with 100 billion parameters. On stereotype, profession, and emotion bias tests, the team compared popular BERT pretrained language models with their "textual entailment" models. As a result, the latter outperformed other models with significantly lower bias, while maintaining the ability to model language. The level of "fairness" was evaluated by means of an ideal context association test (iCAT), in which higher iCAT scores are indicative of fewer stereotypes. A strong language understanding model had an iCAT score of greater than 90%, whereas other strong language understanding models ranged between 40% and 80%.

Luo co-authored the paper with James Glass, a senior research scientist at MIT. In Croatia, they will present their findings at the Conference of the European Chapter of the Association for Computational Linguistics.

It is not surprising that the original pretrained language models examined by the team were full of bias, as demonstrated on a number of reasoning tests which demonstrated that words associated with professional and emotion terms are significantly biased towards the words associated with femininity or masculinity.

With respect to professions, a language model (which is biased) suggests that "flight attendants," "secretaries," and "physicians assistants" are feminine jobs, whereas "fishers," "lawyers," and "judges" are masculine positions. Language models are based on the assumption that emotions such as "anxious," "depressed," and "devastated" are feminine.

The pursuit of a neutral language model utopia may still be far off, but this research is ongoing. Presently, the model is purely based on reasoning among existing sentences in order to understand language. However, it is currently not able to generate sentences, so the next step for the researchers is to target the uber-popular generative models based on logical learning to ensure greater fairness and computational efficiency.

According to Luo, "though stereotypical reasoning is a natural part of human recognition, fairness-aware individuals use logic when necessary rather than stereotypes." "We show that language models have similar properties. Without explicit logic learning, language models produce biased reasoning, but adding logic learning can significantly reduce bias in reasoning. As a result of the model's robust zero-shot adaptation capability, it can be directly applied to different tasks with greater fairness, privacy, and speed."

Src: MIT Computer Science & Artificial Intelligence Lab

Comments ()