The Different Features of Data Annotation Tools and What To Consider

Data annotation tools are no exception to the rapid advance of technology. You will often see upgrades and new features monthly, sometimes on a week-by-week basis. To help navigate this field, we’ve identified the top features you should be looking for when shopping for data annotation tools. The first step is to pinpoint the problem that you want to solve.

Getting high-quality predictive solutions or pattern recognition depends on the quality of the annotated data. So, to obtain high-quality data, you need to identify the specific problem that needs solving.

When approaching data annotation tools, you can start with two baseline options. Buy a tool commercially or build your own. Building your own can be appealing as you can make exactly what you need for your specific problem. However, data annotation is not a simple task, and realizing in the middle of a project that you don’t have the right tool built can be a huge waste of time and resources.

Therefore, when looking to buy the right tool, you’ll want to consider these important features; Annotation Methods, Data Quality Control, Dataset Management, and Security.

Dataset Management: How To Start Your Project Off Right

Always begin with the basics. Taking care of your dataset(s) is not the most exciting part of ML-based AI systems, but it is the building block on which every project is based. You’ll need a tool that can import and handle the amount of data that is going to be coming in. Once annotated, the data will also have to be stored somewhere. Different tools output data in different ways; make sure that the output type fits the output requirements of your project.

All of this must be carefully planned at the beginning of your project, as lost or corrupted data can sink a project quickly. Or if you reach the end and realize that the output requirements don’t match, leaving you floundering to use all the data that you spent time annotating.

Choosing The Right Data Annotation Methods For Your Project

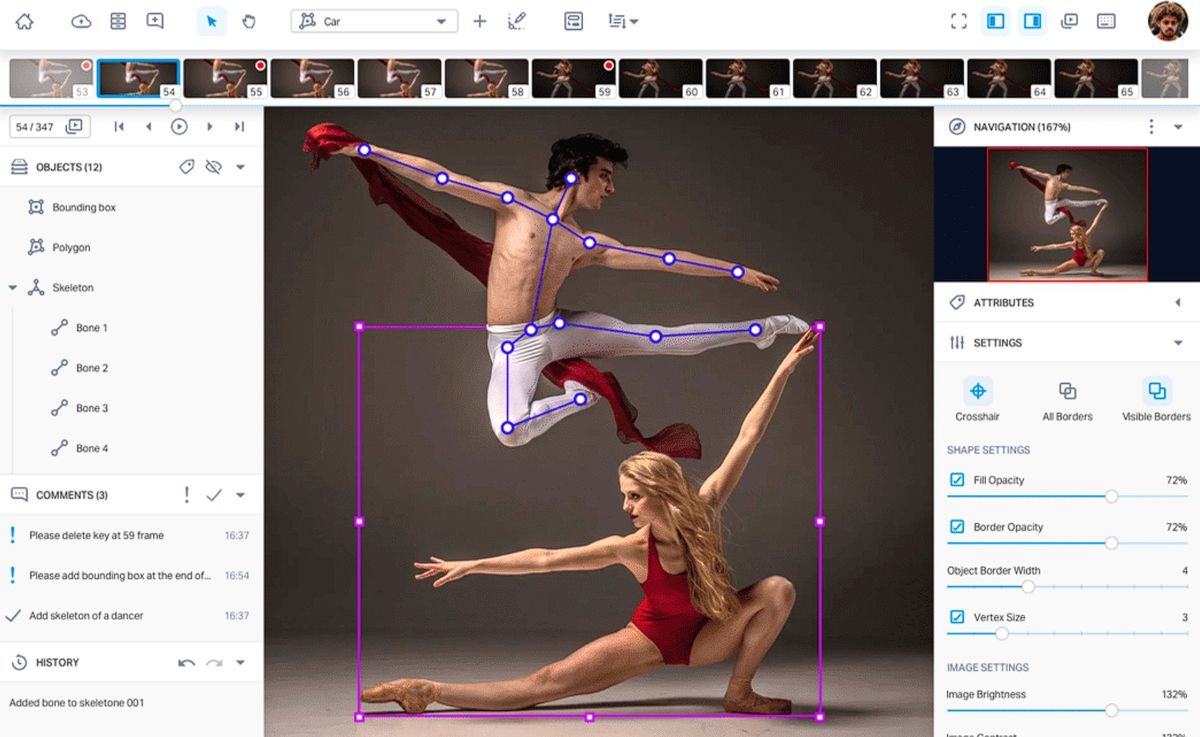

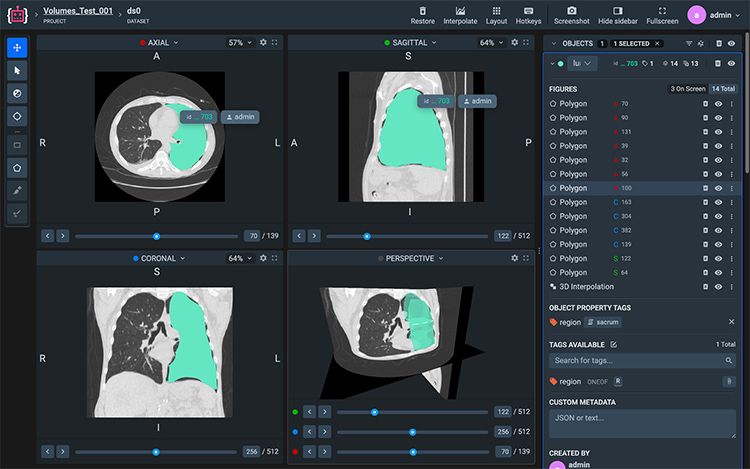

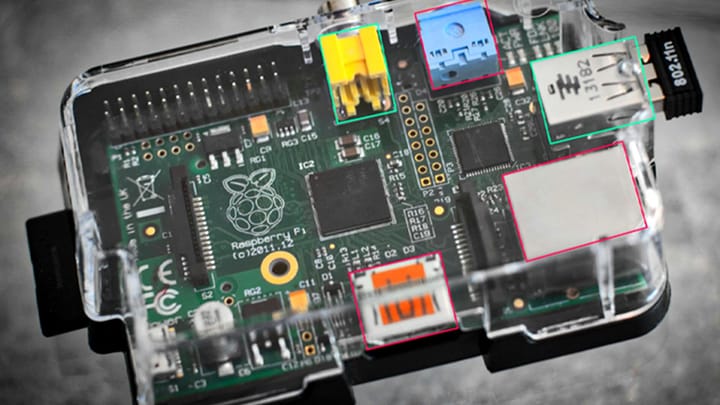

There are three main types of data to be annotated: Image/Video, Text, and Audio. At the core of every project is raw data. That is why you need a data annotation tool to sift through all of it and find what is relevant. So it makes sense that having a data annotation tool that can do that would be the most crucial part of the data annotation tool you’ll want to have.

Certain tools specialize in certain types of data, however, so it’s vital to make sure that the one you purchase (or build) is providing you with the right type of annotated data.

To get the right tool, consider what type of project you are working on and what type of data you will be using. Many projects use multiple types of collected data, and for this, a more general tool would be better. You’ll want a specialized data annotation tool for specific projects to get the most accurate results.

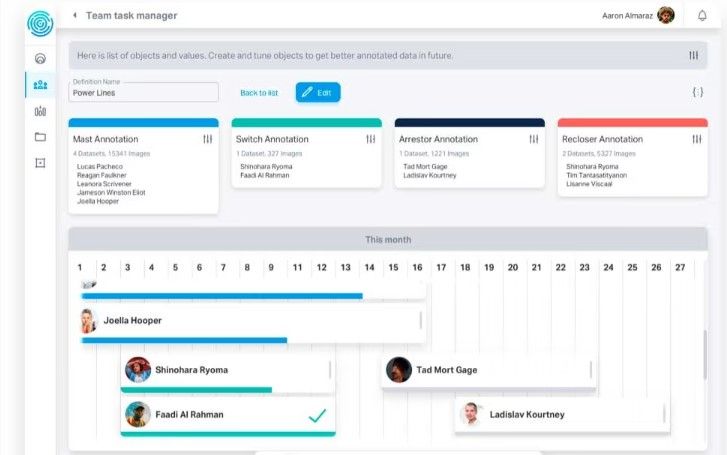

Quality Control: How To Check And Double Check Your Annotated Data

Once you have the data that you need annotated, it’s time to make sure that the data is good. Many data annotation tools will have quality control measures in place. However, there are a few factors to consider. To begin, you must consider who will be using the data. Will your workforce need access to the tool? How long will training take? These are factors to consider as they may add additional time to the project’s length.

Sample review is another important task that should be available from any data annotation tool. Here, the tool reviews a sample of the completed data annotation to find potential errors. This is important because it may recognize a pattern of error. In some tools, there is automation available.

This is when the AI begins to label data based on patterns it identifies from previously annotated data. Data annotation tools should include checks by experts who can comb over for inconsistencies and mislabeled data.

Even a small percentage of error can snowball into a disastrous effect when working with large amounts of data. Consider a 1% error situation. If you are working with 100 images, then that’s 1 error. However, if you are working with 10,000 images, then that is 100 errors. Having a protective buffer in the form of human checkpoints is crucial for any project and should be part of any data annotation tool.

Security: Protecting Your Project and Your Data

Data privacy has been an important issue in recent years. No matter what data you are using, it’s crucial to protect the integrity of your information. If your project is subject to specific regulations by a governing body, then you have to get a data annotation tool compatible with those regulations. Governing bodies such as the CCPA, SSAE 16, PCI DSS, and HIPAA are common examples.

Features that you should be looking for are data encryption, a backup/recovery system, and safeguards from unauthorized access. Data should only be accessible to a limited amount of people, and providing a secure but still, easy-to-navigate interface is incredibly important.

It’s also important to know how the tool is deployed, whether it’s on-premise at the data annotation service site or online via the cloud. This is very important when considering how the data will be transported and whether the selected tool has the infrastructure you feel comfortable with.

Choosing a data annotation tool is the first big step toward completing any project. Finding one that gets the job done, is compatible with your expectations, and provides the technical structure that you need can feel difficult.

Comments ()