Synthetic video generation for AI training

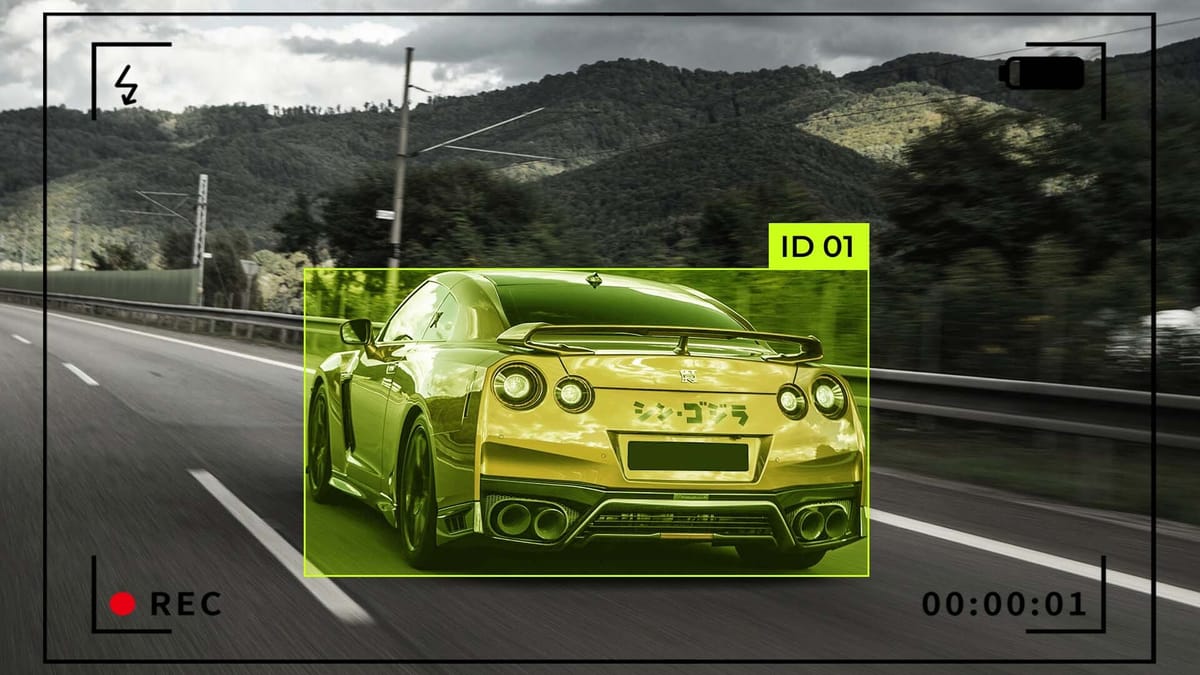

Creating realistic video data for AI training has moved from a niche experiment to a core tool in many development pipelines. Every element, including lighting, camera angles, object placement, and even subtle motion patterns, can be carefully controlled, giving AI models exposure to a wide variety of situations without the usual logistical headaches.

Variations in weather, traffic density, or unusual object behavior can be introduced systematically, helping models learn to generalize rather than memorize. While these synthetic sequences don't completely replace real-world footage, they complement it in ways that accelerate training and improve reliability. Often, the most effective approach blends both synthetic and real videos, offering a balance between coverage, realism, and safety, while also opening opportunities to explore edge cases that would otherwise be inaccessible.

Key Takeaways

- Solves critical shortages of diverse training materials for complex AI tasks.

- Enables safe testing of sensitive scenarios through controlled digital environments.

- Accelerates development cycles for autonomous systems and computer vision models.

What is synthetic video generation

Synthetic video generation creates video content entirely through computational methods rather than capturing it from the real world. It generates simulated scenes using computer graphics, machine learning, or other algorithmic techniques, allowing developers to control every aspect of the environment, objects, lighting, and camera movement. These videos can range from realistic recreations of real-world settings to entirely imaginative scenarios, and they are increasingly used in training AI systems, particularly for applications like autonomous vehicles, where testing every situation in reality would be unsafe or impractical.

The rise of AI-driven video tools

In recent years, AI-driven video tools have moved from experimental projects to essential components of modern content creation and model training pipelines. These tools can generate simulated scenes from scratch, giving developers and creators unprecedented control over every detail.

Lighting, camera angles, object behaviors, and subtle motions can be adjusted programmatically. This is especially useful for testing systems like autonomous vehicles, where replicating rare or dangerous scenarios in the real world is costly and risky. A single synthetic video can be modified to show different weather conditions, lighting changes, or unexpected interactions, giving models a richer, more diverse learning experience.

Benefits of Using AI for Video Generation

- Controlled and safe testing environments. AI-generated videos allow developers to create simulated scenes that would be too dangerous or expensive to film in reality. This is particularly crucial for autonomous vehicles, where edge cases like sudden obstacles or extreme weather can be tested safely.

- Faster iteration and experimentation. Synthetic video enables teams to adjust lighting, camera angles, object placement, or scene dynamics without setting up new physical shoots, speeding up model training and reducing development cycles.

- Diverse data through video augmentation. With video augmentation, a single synthetic clip can produce dozens of variations, helping AI models handle weather conditions, lighting, and unexpected scenarios, improving generalization.

- Cost efficiency. Producing synthetic videos eliminates many costs associated with real-world filming, such as travel, equipment, actors, or road closures, while still providing high-quality training material.

- Enhanced coverage of rare events. AI generation allows training on rare but critical events that might rarely occur naturally, ensuring models are better prepared for real-world unpredictability.

- Flexible and scalable. Synthetic video can be scaled up to create massive datasets, supporting continuous training and testing as AI systems evolve, without the bottlenecks of traditional data collection.

- Complementing real-world data. Combining synthetic and real footage gives AI models the best of both worlds: realism from real videos and controlled variability from synthetic ones, maximizing robustness.

Featured product roundup

Sora has quickly established itself as a leading platform for teams working with synthetic video, offering tools that make creating simulated scenes surprisingly straightforward. Unlike traditional video production, where capturing specific scenarios can be time-consuming, expensive, or outright dangerous, Sora allows developers to design and control every scene element, from lighting and camera angles to object behaviors and subtle environmental effects.

One of Sora's standout features is its robust video augmentation capabilities. A single synthetic scene can be modified to produce dozens of variations, including changes in weather, time of day, or object interactions. This ensures that AI models are exposed to a broader range of situations, helping them generalize better when faced with real-world unpredictability.

Beyond technical capabilities, Sora's platform emphasizes usability. Its intuitive interface lets both AI engineers and content creators experiment with complex scenarios without needing advanced programming skills. This lowers the barrier to entry, allowing teams to test creative ideas, explore unusual edge cases, and iterate quickly.

Key Features and Benefits of AI Video Generators

- Creation of simulated scenes. AI video generators allow teams to build simulated scenes with complete control over lighting, camera angles, object placement, and motion, enabling safe testing of complex scenarios, especially for autonomous vehicles.

- Video augmentation capabilities. These platforms can automatically produce multiple variations of a single scene, including changes in weather, lighting, or object behavior, helping AI models generalize better to real-world situations.

- Cost and time efficiency. Generating synthetic video eliminates many expenses related to real-world filming, such as equipment, actors, location logistics, and travel, while significantly reducing production time.

- Safe testing of edge cases. Rare or hazardous scenarios that would be difficult or unsafe to recreate in reality can be simulated, allowing AI systems to learn from situations they might never encounter.

- Rapid iteration and experimentation. AI-generated videos make it easy to tweak scenarios and run multiple test cycles quickly, accelerating model development and refinement.

- Hybrid training flexibility. Synthetic footage can be combined with real-world video to maximize diversity, coverage, and robustness, striking the right balance between realism and controlled experimentation.

- Scalability. AI video generators support large-scale dataset creation, providing the training material needed for modern machine learning pipelines without being limited by real-world constraints.

- User-friendly interfaces. Many platforms are designed to be accessible to AI engineers and content creators, making it easier to experiment with complex scenarios without deep programming knowledge.

- Continuous improvement. Updates to simulation libraries, assets, and augmentation options allow teams to stay on top of emerging challenges and explore new applications for AI video generation.

- Enhanced model robustness. Synthetic video generation exposes AI systems to a wide variety of controlled, repeatable scenarios, improving overall model reliability and performance in unpredictable real-world conditions.

Innovative solutions for modern creators

Platforms today can generate simulated scenes with precise control over lighting, camera angles, object behaviors, and motion, making it possible to test scenarios that would be too expensive, time-consuming, or risky in real life. This opens up new opportunities, from accelerating AI model development to giving content creators creative freedom with highly customizable video outputs.

Comparative Insights Across Tools

- Google Veo 2 offers high-quality generation and extensive video augmentation, which is ideal for autonomous vehicle scenarios where realism and variety are crucial.

- Hailuo focuses on usability and rapid iteration, allowing teams to experiment with edge cases without deep technical expertise. Kling provides a robust library of assets and flexible simulation options, perfect for blending synthetic clips with real-world footage to improve model robustness.

- LTX Studio emphasizes creative flexibility, making tweaks to weather, lighting, and object interactions easy while keeping videos realistic.

Across all these tools, the shared advantage is the ability to create diverse simulated scenes, accelerate development cycles, and enhance model reliability. Choosing the right platform depends on whether the priority is ease of use, creative flexibility, dataset scale, or realism. Still, all contribute to safer, faster, and more effective AI video generation.

Summary

Synthetic video generation has quickly become crucial for AI development and modern content creation. By producing highly controllable simulated scenes, developers and creators can safely test complex scenarios that would be difficult, expensive, or risky to capture in the real world. Platforms with strong video augmentation capabilities allow a single scene to be transformed in multiple ways, helping AI models generalize better and handle diverse real-world situations.

FAQ

What is synthetic video generation?

Synthetic video generation creates video content entirely through computational methods rather than filming in the real world. For AI training, it allows developers to build simulated scenes with controlled lighting, camera angles, and object behaviors.

Why is synthetic video important for autonomous vehicles?

It provides a safe way to test edge cases, like sudden obstacles or extreme weather, without putting anyone at risk. Models trained on these simulated scenes can better handle real-world unpredictability.

What role does video augmentation play in synthetic video?

Video augmentation introduces lighting, weather, or object behavior variations, helping AI models generalize across diverse scenarios instead of memorizing specific footage.

How does synthetic video reduce costs and time?

By eliminating the need for physical filming, actors, and locations, synthetic video allows faster iteration and cheaper production, making AI training more efficient.

What are the key benefits of AI video generators?

They allow the creation of simulated scenes, safe testing of edge cases, scalable dataset generation, and rapid iteration, improving AI model robustness and reliability.

Which platforms are leading in AI video generation?

Notable platforms include Google Veo 2, Hailuo, Kling, LTX Studio, Datagen, and Synthesis AI, each offering different mixes of realism, scalability, and video augmentation capabilities.

How do AI video tools support modern content creators?

They allow creators to experiment with highly customizable simulated scenes, enabling creative exploration and data generation for AI models without technical barriers.

What makes synthetic video scalable for AI training?

Platforms can generate massive datasets programmatically, allowing training on hundreds or thousands of varied simulated scenes without real-world constraints.

How does combining synthetic and real footage improve AI models?

Blending both data types ensures AI sees realistic scenarios while benefiting from controlled variations, enhancing robustness, accuracy, and adaptability in applications like autonomous vehicles.

Comments ()