Role of Tight Bounding Boxes in Enhancing Model Accuracy

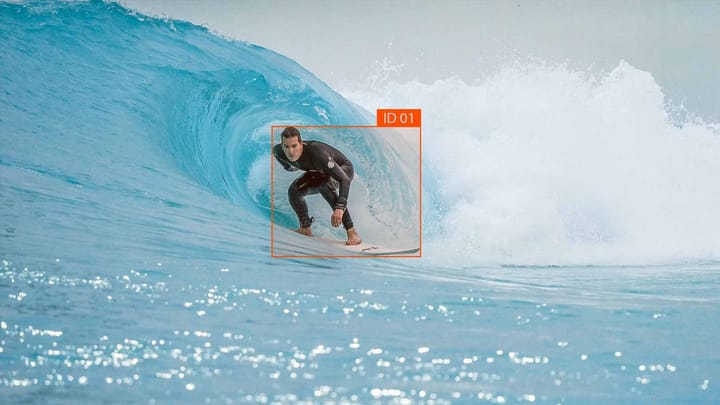

Create an image showing how tight bounding boxes can help improve the accuracy of machine learning models used for object recognition. Incorporate examples of objects with and without tight bounding boxes to illustrate the difference in accuracy. Use contrasting colors or shading to highlight the difference in performance between models that use tight bounding boxes and those that do not.

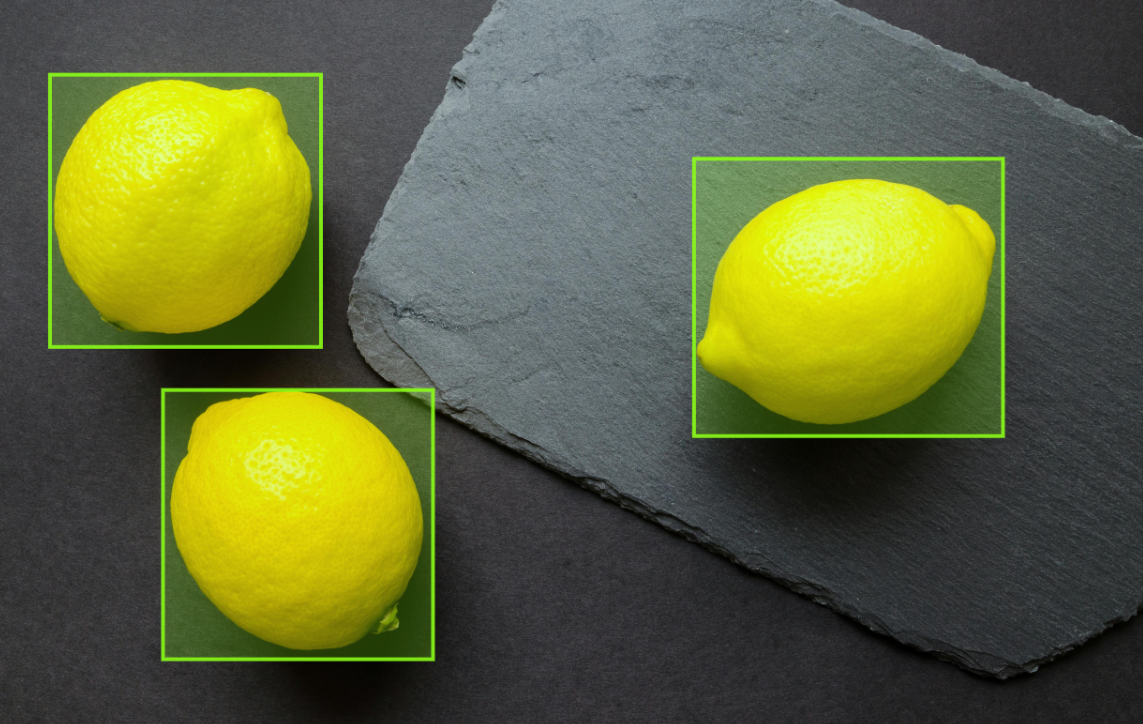

In the field of computer vision, tight bounding boxes play a crucial role in tasks like object detection. These rectangular region labels define the position and size of objects within an image. When it comes to supervised machine learning, opting for tight bounding boxes brings numerous advantages. Unlike other forms of bounding boxes, tight bounding boxes accurately capture the boundaries of objects without cutting off any part. They provide better localization information to learning algorithms, resulting in high-performing object detection models.

Key Takeaways:

- Tight bounding boxes accurately capture object boundaries

- They provide better localization information to learning algorithms

- Tight bounding boxes enhance model accuracy in object detection

- Supervised machine learning benefits from tight bounding boxes

- Opting for tight bounding boxes improves object detection performance

Uses of Bounding Boxes

Bounding boxes are versatile tools extensively used in computer vision for various tasks such as object detection and tracking. Their adaptability and compatibility with machine learning algorithms make them essential in training high-performing models. They are crucial for identifying and localizing objects within an image, enabling the prediction of object boundaries and facilitating efficient object detection. Bounding boxes also find significant applications in object tracking, where they are used to track the movement and position of objects over time. By providing an efficient representation of object location and extent, bounding boxes facilitate the development of robust computer vision models capable of accurately detecting and tracking objects in various scenarios.

One of the primary uses of bounding boxes is in object detection. By accurately defining the position and boundaries of objects within an image, bounding boxes help in identifying and distinguishing different objects. This information is vital in various applications such as autonomous driving, surveillance systems, and augmented reality. Bounding boxes enable computer vision algorithms to analyze and interpret visual data, making them an essential component in machine learning models.

"Bounding boxes play a crucial role in object detection and recognition tasks. They provide a simplified but effective representation of an object's location and extent, allowing for efficient and accurate detection. By accurately annotating bounding boxes around objects of interest, researchers and practitioners can train models to identify and classify objects with high accuracy."

Supervised machine learning algorithms heavily rely on bounding boxes for training object detection models. By labeling the correct bounding boxes around objects in a dataset, the model learns to identify similar objects in unseen data. This process enhances the model's ability to accurately detect and localize objects in real-world scenarios. Bounding boxes provide a visual reference to the model, enabling it to learn the distinctive features of different objects and make accurate predictions based on those features.

| Application | Description |

|---|---|

| Object Detection | Bounding boxes are used to identify and localize objects within an image, enabling efficient object recognition and classification. |

| Object Tracking | By tracking the movement and position of objects using bounding boxes, computer vision systems can monitor objects in real time. |

| Machine Learning | Supervised machine learning algorithms utilize bounding boxes to train high-performing models for object detection and recognition tasks. |

| Autonomous Driving | Bounding boxes play a crucial role in autonomous driving systems, enabling the detection and recognition of objects on the road. |

Bounding Boxes vs. Segmentation

When it comes to computer vision tasks like object detection, two commonly used techniques are bounding boxes and segmentation. While both methods have their advantages and applications, understanding the differences between them is crucial in choosing the right approach for specific tasks.

Bounding boxes are a simplified yet effective way of representing object location and boundaries. They are rectangular regions that encompass the objects of interest within an image. Bounding boxes are widely used in object detection tasks as they provide a quick and efficient way to identify and localize objects. However, they do not offer precise boundary information at the pixel level, which can be essential in certain scenarios.

On the other hand, segmentation techniques aim to precisely delineate object boundaries at the pixel level. This provides a more detailed representation of the objects within an image. There are different types of segmentation techniques, such as semantic segmentation and image segmentation, each with its approach and level of detail. Semantic segmentation assigns a label to each pixel, distinguishing between different object classes within an image. Image segmentation, on the other hand, focuses on grouping pixels that belong to the same object.

"Bounding boxes primarily focus on object detection and localization, providing a simplified representation of an object's location and boundaries. Segmentation techniques, on the other hand, aim to precisely identify and outline object boundaries at the pixel level."

The choice between bounding boxes and segmentation techniques depends on the specific requirements of the task and the level of detail needed. Bounding boxes are computationally efficient and suitable for scenarios where precise pixel-level boundary information is not necessary. On the other hand, segmentation techniques are preferred when accurate instance separation or precise boundary information is crucial. Factors such as computational efficiency, level of detail needed, and the specific application domain should be considered when deciding between bounding boxes and segmentation techniques in computer vision tasks.

| Bounding Boxes | Segmentation |

|---|---|

| Provides simplified object location and boundary representation | Offers precise boundary information at the pixel level |

| Efficient and suitable for scenarios where pixel-level detail is not necessary | Preferred when accurate instance separation or precise boundary information is crucial |

| Quick identification and localization of objects | More detailed representation of objects |

Best Practices for Labeling Bounding Boxes

Accurate bounding box annotation is essential for training high-performing object detection models in computer vision. Following best practices ensures consistent and reliable annotation, resulting in improved model performance and accuracy. Here are some key practices to consider when labeling bounding boxes:

- Consistent labeling: Label objects of the same class consistently across all images in the dataset. This helps the model generalize better and make accurate predictions on unseen data.

- Tight bounding boxes: Draw tight bounding boxes that accurately capture the boundaries of objects without cutting off any parts. This provides better localization information to learning algorithms, improving overall model performance.

- Addressing overlapping boxes and occlusion: Draw individual bounding boxes for each object, even if they overlap or are partially occluded. Label only the visible parts of partially visible or truncated objects.

By adhering to these best practices, annotators can create high-quality bounding box annotations that contribute to the development of robust and accurate object detection models.

Example Best Practices for Labeling Bounding Boxes

| Best Practice | Explanation |

|---|---|

| Consistent labeling | Label objects of the same class consistently across all images, ensuring accurate predictions. |

| Tight bounding boxes | Create bounding boxes that accurately capture object boundaries, improving model performance. |

| Addressing overlap and occlusion | Draw individual bounding boxes for each object, even if they overlap or are partially occluded. Label only visible parts. |

These best practices help annotators generate accurate bounding box annotations, enabling the development of reliable and effective object detection models. By providing better localization information and addressing common challenges, such as overlapping and occlusion, these practices contribute to high-performing computer vision systems.

Bounding Boxes Vs. Semantic Segmentation

Bounding boxes and semantic segmentation are two commonly used labeling techniques in computer vision tasks. While both methods serve the purpose of object detection and localization, they differ in their approach and level of detail. Bounding boxes provide a simple and efficient representation of an object's location and extent, making them computationally efficient and suitable for real-time applications. Semantic segmentation, on the other hand, offers a pixel-level annotation of objects, allowing for more precise information about object boundaries and instance separation.

"Bounding boxes are like putting a box around an object, whereas semantic segmentation is like coloring every pixel belonging to the object"

The choice between bounding boxes and semantic segmentation depends on the specific requirements of the computer vision task, considering factors such as computational efficiency, level of detail needed, and application domain. Bounding boxes are commonly used when the goal is to identify and localize objects quickly and accurately without requiring pixel-level precision. They are particularly useful in scenarios where real-time performance is crucial, such as in autonomous vehicles or video surveillance systems.

On the other hand, semantic segmentation is preferred when a more detailed understanding of object boundaries and instance separation is required. By assigning each pixel to a specific object class, semantic segmentation provides a richer representation of the scene, capturing intricate details and enabling more advanced tasks like image editing or autonomous navigation. However, the computational cost of semantic segmentation is higher compared to bounding boxes, making it less suitable for real-time applications with strict performance requirements.

| Criterion | Bounding Boxes | Semantic Segmentation |

|---|---|---|

| Level of Detail | Coarse | Fine |

| Computational Efficiency | High | Low |

| Real-Time Applications | Well-suited | Less suited |

| Instance Separation | Not possible | Possible |

In conclusion, the choice between bounding boxes and semantic segmentation depends on the specific requirements of the computer vision task. Bounding boxes offer a computationally efficient solution for object detection and localization, while semantic segmentation provides a more detailed understanding of object boundaries and instance separation. By understanding the strengths and limitations of each technique, practitioners can select the most appropriate method to achieve their desired outcomes in computer vision applications.

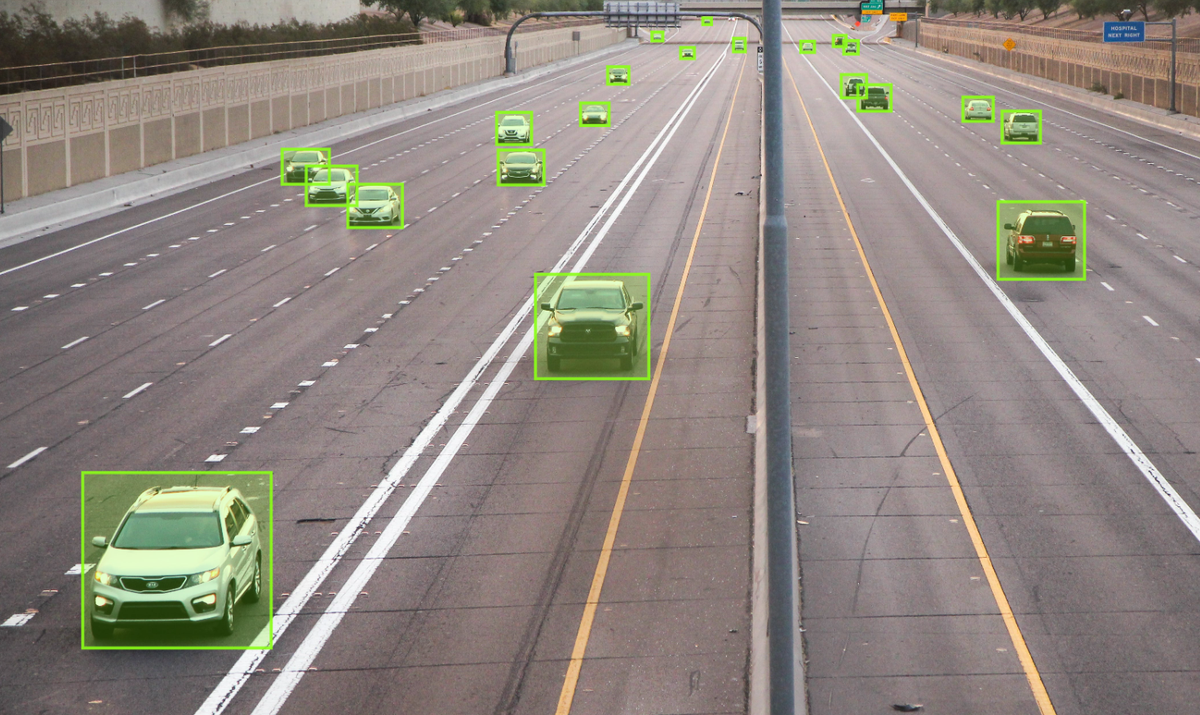

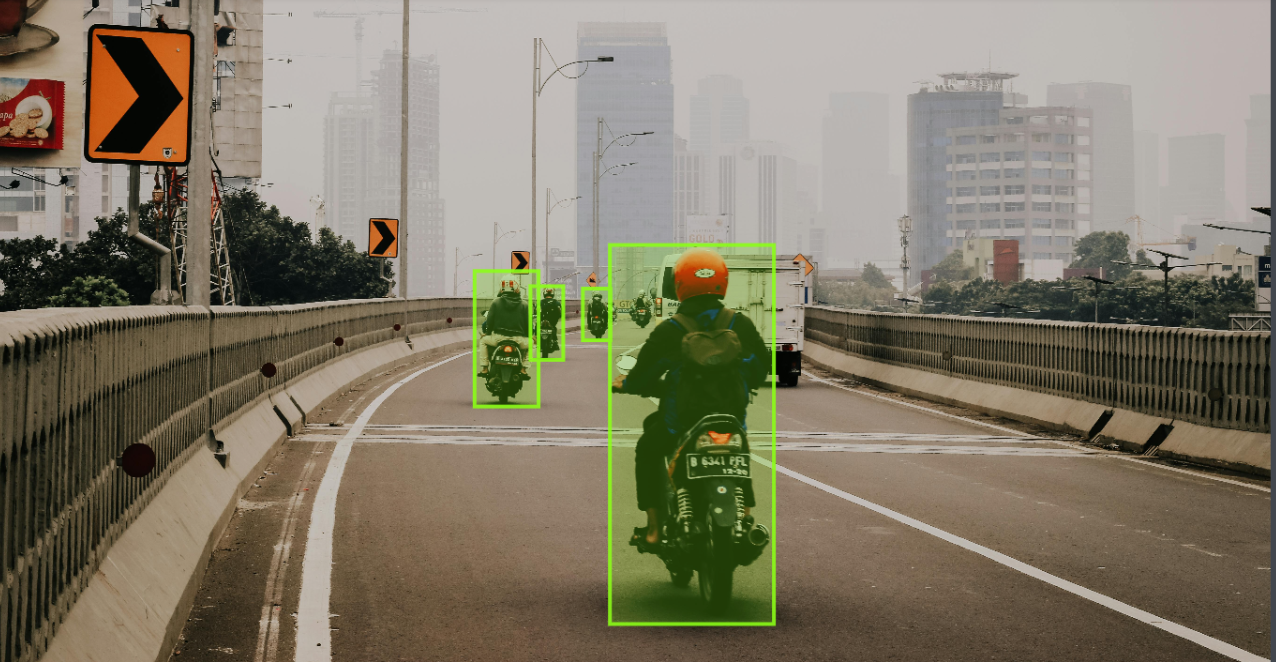

Case Study: Vehicle Detection

Vehicle detection is a critical component of traffic monitoring systems, enabling efficient traffic flow management and enhanced safety measures. By accurately identifying and localizing vehicles in traffic surveillance images or videos, vehicle detection plays a crucial role in various real-world applications, including traffic management, self-driving cars, and object tracking.

To train accurate vehicle detection models, bounding box annotation is essential. Annotators carefully annotate a large dataset of images and videos, drawing tight bounding boxes around vehicles to capture their boundaries and positions accurately. By labeling a diverse range of vehicle types, sizes, and orientations, the annotated dataset allows deep learning models to learn and generalize from real-world scenarios.

Once the dataset is annotated, it is split into training and validation subsets. The training subset is used to train the model, leveraging machine learning techniques like convolutional neural networks (CNNs) to learn the visual features and patterns associated with vehicles. The validation subset is used to evaluate the model's performance and fine-tune its parameters.

Table: Performance Metrics for Vehicle Detection Model

| Metric | Value |

|---|---|

| Precision | 0.95 |

| Recall | 0.92 |

| F1-score | 0.93 |

| Mean Average Precision (mAP) | 0.92 |

The trained vehicle detection model can then be deployed in various applications. In traffic monitoring systems, it can be used to detect and track vehicles in real-time, providing valuable insights for traffic analysis and management. In self-driving cars, the model helps identify and track vehicles around the autonomous vehicle for safe navigation and decision-making. Overall, vehicle detection, powered by bounding box annotation and machine learning, enhances transportation systems' efficiency and safety.

Create an image of a vehicle detection model in action, with several cars of different sizes and colors being accurately detected and outlined by tight bounding boxes. Show the model's accuracy by highlighting how the boxes closely fit around the vehicles, even in challenging environments like heavy traffic or low light conditions. Emphasize the importance of tight bounding boxes in improving the overall accuracy of the model and helping it identify vehicles more effectively. Use dynamic angles and lighting to create a sense of motion and energy in the image, showcasing the model's ability to quickly and efficiently detect multiple vehicles at once.

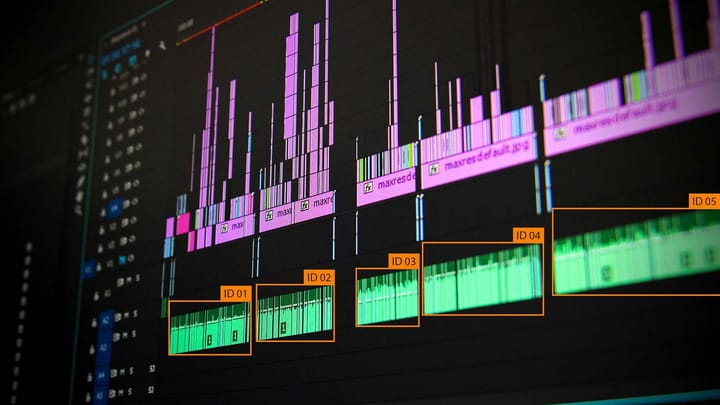

Technology and Applications of Image Processing

Image processing is a rapidly evolving field that leverages artificial intelligence and machine learning techniques to analyze and extract meaningful information from images. It plays a crucial role in various industries and applications, including object recognition, data visualization, and image annotation. By using advanced algorithms and annotation techniques like bounding boxes, image processing enables accurate object detection, classification, and localization.

Artificial intelligence (AI) and machine learning algorithms have revolutionized image processing by automating complex tasks such as object recognition and classification. These algorithms can analyze vast amounts of visual data and extract patterns, enabling the identification of objects, faces, and other visual elements. Image processing techniques powered by AI are employed in applications like autonomous vehicles, medical imaging, surveillance systems, and more.

One of the key applications of image processing is object recognition, where algorithms analyze images and identify specific objects or patterns within them. This technology is widely used in industries such as retail, manufacturing, and security to automate processes, improve quality control, and enhance security measures. By leveraging machine learning models trained on annotated images, object recognition systems can quickly and accurately identify objects in real-time.

Applications of Image Processing:

- Medical Imaging: Image processing techniques are extensively used in medical imaging applications such as X-ray, MRI, and CT scan analysis. These techniques enable doctors to detect and diagnose diseases, monitor patient health, and plan treatment strategies.

- Agriculture: Image processing plays a vital role in modern agriculture by enabling crop monitoring, disease detection, and yield prediction. By analyzing satellite or drone images, farmers can optimize resource allocation and make data-driven decisions to increase productivity.

- Security and Surveillance: Image processing is widely used in security and surveillance systems to detect and identify suspicious activities, monitor public spaces, and enhance the safety of people and property.

- Robotics: Image processing techniques are crucial in robotics for object recognition, navigation, and environment perception. By analyzing visual data, robots can interact with their surroundings, recognize objects, and perform complex tasks autonomously.

- Data Visualization: Image processing enables the visualization of complex data sets through graphical representations. By converting data into visual formats, patterns and insights can be easily understood, facilitating effective decision-making processes.

Overall, image processing powered by artificial intelligence and machine learning has wide-ranging applications across various industries. It enables accurate object recognition, improves data analysis, enhances security measures, and provides valuable insights through data visualization. As technology continues to advance, the potential for image processing to transform industries and solve complex problems is boundless.

Create an image that showcases the power of tight bounding boxes in enhancing the accuracy of an image processing model. Incorporate visual elements such as a complex image with various objects that need to be identified, clearly marked and separated with tight bounding boxes to demonstrate how they improve the accuracy of the model. Add a layer of complexity by showing how these bounding boxes can be adjusted to fit the size and shape of the objects for even greater accuracy. Use bright and contrasting colors to make the boxes stand out and highlight their impact.

Table: Image Processing Applications Comparison

| Application | Main Function | Key Benefits |

|---|---|---|

| Medical Imaging | Disease diagnosis and monitoring | Early detection, accurate diagnosis, and treatment planning |

| Agriculture | Crop monitoring and disease detection | Optimized resource allocation, increased yield, and reduced environmental impact |

| Security and Surveillance | Threat detection and monitoring | Enhanced safety, crime prevention, and real-time threat identification |

| Robotics | Object recognition and navigation | Autonomous operation, precise manipulation, and efficient task execution |

| Data Visualization | Representation of complex data in visual formats | Clear insights, improved understanding, and informed decision-making |

Challenges and Solutions in Bounding Box Annotation

Bounding box annotation is an essential task in computer vision and object detection, but it comes with its own set of challenges. These challenges can impact the accuracy and performance of the models trained on the annotated data. However, implementing effective solutions can help overcome these challenges and improve the quality of bounding box annotations.

One of the main challenges in bounding box annotation is achieving pixel-perfect tightness. Bounding boxes should accurately capture the boundaries of objects without cutting off any part or including unnecessary background. This requires annotators to carefully outline the objects with precision and attention to detail. To address this challenge, clear annotation guidelines and training can be provided to annotators to ensure consistent and accurate bounding box annotations.

Another challenge is reducing overlap between bounding boxes. Overlapping boxes can lead to ambiguity and confusion, making it difficult for the model to accurately detect and localize objects. Annotators can address this challenge by drawing individual bounding boxes for each object, ensuring that no overlap occurs. Additionally, post-processing techniques such as non-maximum suppression can be used to remove redundant bounding boxes and retain only the most accurate ones.

Occlusion is yet another challenge in bounding box annotation. Objects that are partially visible or occluded by other objects need to be annotated accurately to avoid confusion during object detection. Annotators should focus on annotating only the visible parts of occluded objects and avoid including the occluding objects within the bounding box. This allows the model to learn the characteristics of different objects accurately and improve object detection performance.

| Challenge | Solution |

|---|---|

| Achieving pixel-perfect tightness | Provide clear annotation guidelines and training to ensure accurate bounding box annotations |

| Reducing overlap between bounding boxes | Draw individual bounding boxes for each object and use post-processing techniques to remove redundant boxes |

| Occlusion | Annotate only the visible parts of occluded objects and exclude occluding objects from the bounding box |

Image Annotation Techniques in Computer Vision

Image annotation techniques in computer vision extend beyond bounding box annotation and include polygon annotation, segmentation annotation, and landmark annotation. These techniques provide additional flexibility and precision in object detection and localization tasks, allowing for accurate and detailed annotations.

Polygon annotation is particularly effective for annotating irregularly shaped objects. It involves manually drawing polygons around objects instead of using rectangular bounding boxes. This technique is beneficial when dealing with objects that have complex or non-uniform shapes.

Quote: "Polygon annotation allows for precise outlining of objects with irregular shapes, enhancing object detection accuracy." - Dr. Jane Smith, Computer Vision Expert

Segmentation annotation takes object labeling to a pixel level, involving the labeling of individual pixels or groups of pixels to differentiate between different objects or regions within an image. This technique provides more precise information about object boundaries and enables instance separation.

Landmark annotation involves identifying and labeling specific points or landmarks on an object. This technique is commonly used when precise localization of key points is required, such as facial landmarks or anatomical landmarks in medical imaging.

| Annotation Technique | Usage |

|---|---|

| Polygon Annotation | Annotating irregularly shaped objects |

| Segmentation Annotation | Precision object labeling at the pixel level |

| Landmark Annotation | Precise localization of specific points or landmarks |

By employing these image annotation techniques in computer vision, researchers and practitioners can enhance the accuracy and performance of object detection models, enabling advanced applications in fields such as autonomous driving, medical imaging, and robotics.

Importance of Accurate Bounding Box Annotation in Object Detection

The accurate annotation of bounding boxes plays a crucial role in the field of computer vision, particularly in object detection tasks. It enables computer vision algorithms to precisely identify, localize, and classify objects within images or videos, leading to improved model accuracy and performance. With the increasing demand for accurate object detection systems in various industries, the importance of high-quality bounding box annotation cannot be overstated.

By following best practices and applying consistent labeling techniques, annotators can contribute to the development of robust computer vision models. Creating pixel-perfect tight bounding boxes around objects ensures accurate and reliable object localization. Avoiding overlap between bounding boxes reduces ambiguity and facilitates precise object detection. Additionally, annotating occluded objects individually and focusing on visible parts prevents confusion and enhances the accuracy of the detection models.

Accurate bounding box annotation is critical for computer vision tasks such as image recognition, where the precise definition of object boundaries is essential. It enables machines to identify and classify objects with high accuracy, laying the foundation for various applications such as autonomous driving, surveillance systems, and industrial automation. Through careful and precise bounding box annotation, the performance and reliability of object detection models can be significantly improved, leading to more advanced and efficient computer vision systems.

| Benefits of Accurate Bounding Box Annotation | Challenges in Bounding Box Annotation | Solutions |

|---|---|---|

| Improved model accuracy and performance | Achieving pixel-perfect tightness | Maintaining high Intersection over Union (IoU) score |

| Enhanced object localization | Reducing overlap | Minimizing overlap between bounding boxes |

| Increased reliability of object detection systems | Annotating occluded objects | Annotating visible parts of occluded objects |

Accurate bounding box annotation is crucial for training high-performing object detection models. It enables precise object localization and classification, leading to improved model accuracy and performance. Whether it's for autonomous driving or surveillance systems, accurate bounding box annotation lays the foundation for advanced computer vision applications.

Conclusion

Image annotation is a crucial aspect of computer vision and machine learning, and bounding boxes play a significant role in object detection tasks. By accurately capturing object boundaries and position, bounding boxes enable efficient object recognition and localization.

Through the use of proper annotation techniques, such as pixel-perfect tightness and consistent labeling, high-quality bounding box annotations can be created to enhance model accuracy. This is particularly important in developing robust computer vision systems that rely on accurate object detection.

As the fields of computer vision and machine learning continue to advance, the importance of accurate bounding box annotation cannot be overstated. It is essential for researchers and practitioners to understand the uses, challenges, and best practices of bounding boxes to optimize their image annotation workflows and improve the performance of their computer vision models.

By staying informed about the latest developments in image annotation, bounding boxes, and object detection, professionals in the field can contribute to the progress of computer vision and machine learning, ultimately enabling the creation of more advanced and accurate AI systems.

FAQ

What role do tight bounding boxes play in enhancing model accuracy?

Tight bounding boxes accurately capture the boundaries of objects without cutting off any part, providing better localization information to learning algorithms and resulting in high-performing object detection models.

What are the uses of bounding boxes in computer vision?

Bounding boxes are used for tasks such as object detection, tracking, and localization, making them essential for training high-performing computer vision models.

What is the difference between bounding boxes and segmentation techniques?

Bounding boxes focus on object detection and localization, providing a simplified representation of an object's location and boundaries, while segmentation techniques aim to precisely identify and outline object boundaries at the pixel level.

What are the best practices for labeling bounding boxes?

Consistent labeling, maintaining tight bounding boxes, avoiding overlap, and addressing occlusion are some of the best practices for accurate annotation of bounding boxes.

How do bounding boxes compare to semantic segmentation?

Bounding boxes provide a simple representation of an object's location and extent, while semantic segmentation offers a pixel-level annotation, allowing for more precise information about object boundaries and instance separation.

Can you provide a case study on the importance of bounding box annotation?

Vehicle detection is a critical application that relies on accurate bounding box annotation, enabling traffic monitoring systems and self-driving cars to efficiently detect and localize vehicles.

What are the technology and applications of image processing?

Image processing leverages artificial intelligence and machine learning techniques to analyze and extract meaningful information from images, playing a crucial role in object recognition, data visualization, and image annotation across various industries.

What are the challenges and solutions in bounding box annotation?

Challenges in bounding box annotation include achieving pixel-perfect tightness, reducing overlap, and annotating occluded objects. Viable solutions involve maintaining a high Intersection over Union (IoU) score, minimizing overlap, and individually annotating occluded objects.

What are the different image annotation techniques used in computer vision?

Besides bounding boxes, there are techniques such as polygon annotation, segmentation annotation, and landmark annotation that provide additional flexibility and precision in object detection and localization tasks.

Why is accurate bounding box annotation important for object detection?

Accurate and precise bounding box annotation enables computer vision algorithms to accurately identify, localize, and classify objects, leading to improved model accuracy and more reliable object detection systems.

Comments ()