Challenges and Solutions in Implementing Tight Bounding Boxes

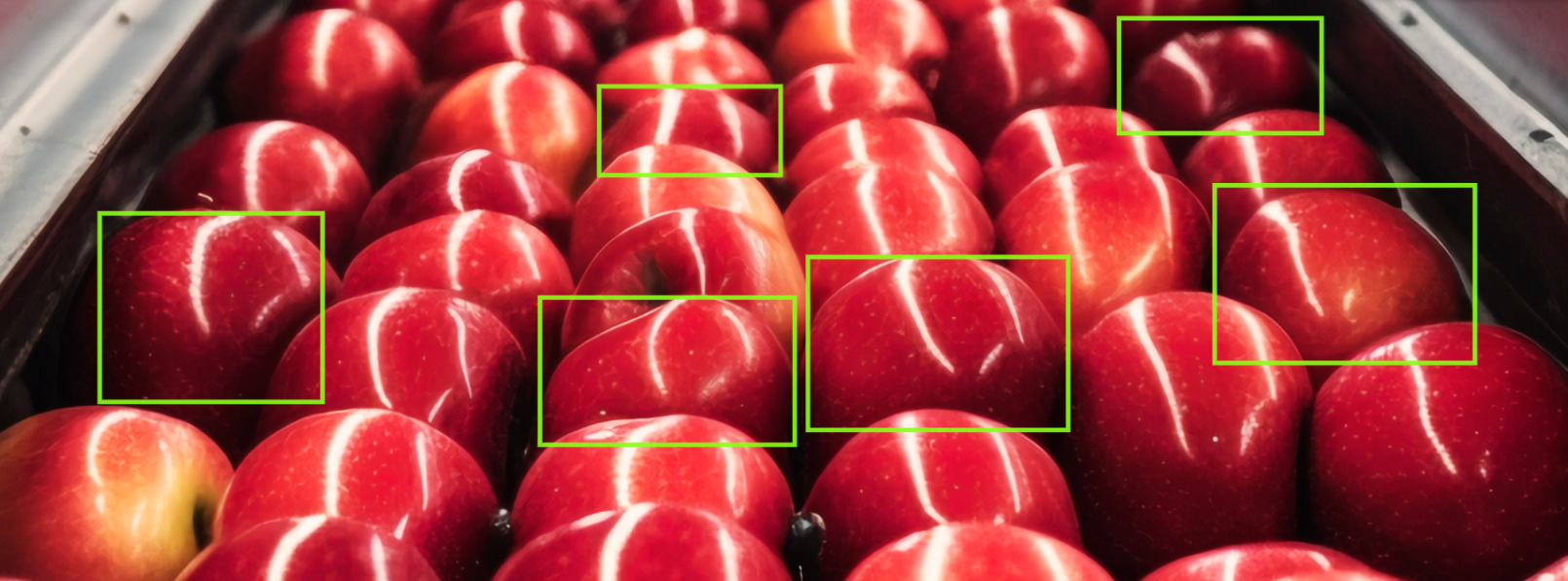

Bounding box annotation is a fundamental and widely used technique in the field of computer vision and machine learning. It involves manually labeling or annotating an image with a bounding box around a specific object or feature of interest. However, implementing tight bounding boxes can present several challenges. This article will explore these challenges and provide viable solutions to achieve accurate and effective bounding box annotation.

Key Takeaways:

- Accurate and tight bounding boxes are essential for precise object detection and classification.

- Challenges in implementing tight bounding boxes include achieving pixel-perfect tightness, reducing overlap, and annotating occluded objects.

- Best practices for bounding box annotation include maintaining a high Intersection over Union (IoU), labeling and tagging all objects of interest, and varying box sizes based on object size.

- Additional tips and tricks include using appropriate annotation tools, leveraging artificial intelligence for automated detection, and using a consistent approach in drawing bounding boxes.

- Bounding boxes play a crucial role in object detection, image processing, and various computer vision and machine learning applications.

What is Bounding Box Annotation?

Bounding box annotation is a process used in computer vision and machine learning applications to manually label or annotate an image with a rectangular box that encloses a specific object or feature of interest. This technique is commonly employed in tasks such as object detection, where the goal is to identify and locate objects within an image or video.

The annotated bounding box defines the coordinates of the object, typically consisting of the top-left and bottom-right corners of the box. Additionally, a class label is assigned to each object, providing valuable information for training image recognition algorithms, building image databases, and conducting various computer vision tasks.

Image Annotation Techniques

Alongside bounding box annotation, there are several other image annotation techniques commonly used in computer vision, including:

- Polygon annotation: This technique involves manually drawing polygons around objects instead of using rectangular bounding boxes. It is particularly effective for annotating irregularly shaped objects.

- Segmentation annotation: Segmentation annotation involves labeling individual pixels or groups of pixels to differentiate between different objects or regions within an image. This technique is commonly used in applications such as semantic segmentation and instance segmentation.

- Landmark annotation: Landmark annotation involves identifying and labeling specific points or landmarks on an object, such as facial landmarks or keypoints on a human body.

Bounding box annotation remains one of the most widely used and versatile techniques due to its simplicity and effectiveness in various computer vision and machine learning applications.

Why Are Bounding Boxes Important?

Bounding boxes play a vital role in various applications, including object detection, image annotation, data visualization, and object recognition. These boxes enable the identification and localization of objects within an image or video, which facilitates tasks such as image classification, object tracking, and face detection. Additionally, bounding boxes provide valuable information for building image databases and training machine learning algorithms to efficiently recognize and classify objects.

In object detection, bounding boxes serve as the foundation for accurate identification and localization of objects within an image. By enclosing an object with a bounding box, computer vision algorithms can precisely delineate the boundaries of the object for further analysis and processing. This information is crucial for tasks that involve understanding and interpreting visual data, such as autonomous driving, surveillance systems, and medical imaging.

Data visualization is another area where bounding boxes are essential. By visualizing the location and dimensions of objects within an image or video, bounding boxes provide a clear representation of the spatial relationships between objects. This aids in the interpretation and understanding of complex visual data, making it easier to extract meaningful insights and make informed decisions based on the analyzed information.

| Application | Description |

|---|---|

| Object Detection | Identifying and localizing objects within an image or video |

| Image Annotation | Labeling and tagging objects of interest within an image |

| Data Visualization | Visualizing objects and their spatial relationships in an image or video |

| Object Recognition | Training machine learning algorithms to recognize and classify objects |

Overall, bounding boxes play a crucial role in computer vision and machine learning applications. They enable the accurate identification, localization, and interpretation of objects within visual data, facilitating tasks such as object detection, image annotation, data visualization, and object recognition. By incorporating bounding boxes into the analysis workflow, researchers and practitioners can enhance the efficiency and effectiveness of their computer vision and machine learning systems.

Types of Bounding Box Annotation

When it comes to bounding box annotation, there are various types that can be used depending on the specific application and characteristics of the objects being enclosed. Each type of bounding box offers its own advantages and is suitable for different scenarios.

Axis-Aligned Bounding Boxes (AABBs): These types of bounding boxes align with the axes (x and y) of the image, making them quick and easy to implement. AABBs are widely used in object detection tasks due to their simplicity and efficiency.

Minimum Bounding Boxes (MBBs): MBBs are designed to tightly fit the object of interest by aligning the box with the object's orientation. They provide a more accurate representation of the object's boundaries compared to AABBs.

Rotated Bounding Boxes: These bounding boxes allow for rotation and can accurately enclose objects with irregular shapes or orientations. They provide a more precise representation of the object's boundaries, especially when dealing with rotated or skewed objects.

Oriented Bounding Boxes (OBBs): Similar to rotated bounding boxes, OBBs accommodate objects with non-axis-aligned orientations. They provide a tighter fit for objects that are inclined or tilted, enabling better object representation.

Minimum Volume Bounding Boxes: These bounding boxes aim to enclose the object of interest with the minimum possible volume. They are useful when precise measurement or analysis of the object's volume is required.

Convex Hull Bounding Boxes: Convex hull bounding boxes are created by connecting the outermost points of a set of objects. They are commonly used when dealing with objects that have complex shapes or contain concave regions.

Understanding the different types of bounding box annotation allows annotators to choose the most suitable approach based on the requirements of their specific task or application. By selecting the appropriate type, accuracy and efficiency can be maximized, leading to improved results in computer vision and machine learning algorithms.

| Bounding Box Type | Description |

|---|---|

| Axis-Aligned Bounding Boxes (AABBs) | Rectangular boxes aligned with the axes of the image |

| Minimum Bounding Boxes (MBBs) | Tightly fit the object of interest by aligning with its orientation |

| Rotated Bounding Boxes | Allow for rotation and accurately enclose objects with irregular shapes or orientations |

| Oriented Bounding Boxes (OBBs) | Accommodate non-axis-aligned objects and provide a tighter fit for inclined or tilted objects |

| Minimum Volume Bounding Boxes | Enclose the object with the minimum possible volume |

| Convex Hull Bounding Boxes | Created by connecting the outermost points of a set of objects, useful for objects with complex shapes |

By understanding the characteristics and applications of each type of bounding box, annotators can make informed decisions during the annotation process. Selecting the appropriate type of bounding box ensures accurate object detection, classification, and localization, contributing to the overall success of computer vision and machine learning tasks.

Best Practices for Bounding Box Annotation

Accurate and precise bounding box annotation is crucial for object detection and computer vision tasks. To ensure high-quality annotations, there are several best practices that should be followed:

- Tightness: Bounding boxes should be tightly fitted around the object of interest to accurately capture its boundaries. This ensures that the annotation provides precise localization information.

- Intersection over Union (IoU): IoU is a metric used to evaluate the accuracy of object detection algorithms. Maintaining a high IoU score indicates that the bounding box aligns well with the ground truth annotation.

- Pixel-perfect tightness: In some cases, objects with complex shapes may require pixel-perfect tightness. This means that the bounding box should closely follow the contours of the object to capture its intricate details.

- Avoiding overlap: Overlapping bounding boxes can lead to ambiguity and confusion. It is important to avoid or minimize overlap between bounding boxes, especially when annotating objects that are close to each other.

- Annotating diagonal items: When dealing with diagonal objects, such as rectangles or tilted objects, it is recommended to use polygons or instance segmentation to accurately outline their shapes.

- Labeling and tagging names: Each bounding box should be labeled and tagged with the appropriate class name or label. This ensures that the objects can be easily identified and categorized during object detection.

- Varying box sizes: Bounding boxes should be sized according to the object they enclose. Larger objects may require larger boxes, while smaller objects may require smaller boxes. This helps improve the overall accuracy of object detection algorithms.

- Annotation of occluded objects: Occluded objects, which are partially obstructed by other objects, should be annotated using appropriate techniques. This can include annotating the visible portions of the object or using specialized annotation methods to capture occlusion accurately.

- Tagging every object of interest: It is important to annotate and tag every object of interest in an image, even if they are small or partially visible. This ensures comprehensive object detection and enhances the performance of computer vision algorithms.

Following these best practices helps ensure the accuracy, consistency, and reliability of bounding box annotation for object detection and other computer vision applications.

Additional Tips and Tricks

When it comes to bounding box annotation, there are several tips and tricks that can help improve your efficiency and accuracy. By utilizing appropriate tools and software, you can streamline the annotation process and enhance your productivity. Consider using keyboard shortcuts to speed up the annotation process, allowing you to quickly draw and adjust bounding boxes with ease. These shortcuts can save you valuable time and help you maintain a consistent workflow.

Another helpful technique is to create templates for annotating similar objects. Templates can be pre-defined bounding box shapes that you can easily apply to objects of the same category or shape. This can significantly reduce the time spent on annotation, especially when dealing with large datasets.

Artificial intelligence can also be leveraged to automate the detection and annotation process. By utilizing AI-powered annotation tools, you can speed up the annotation process and ensure consistent results. These tools can assist in detecting and labeling objects, making the annotation process more efficient and accurate.

Lastly, it's important to approach bounding box annotation with a consistent methodology. This includes maintaining a standardized approach to drawing and labeling bounding boxes, ensuring that they accurately enclose the desired objects. By following a consistent approach, you can reduce errors and improve the overall quality of your annotations.

Table: Comparison of Bounding Box Annotation Tools

| Tool | Features | Price |

|---|---|---|

| Annotate.ai | AI-powered annotation, keyboard shortcuts, template creation | Free trial, subscription-based |

| Labelbox | Collaborative annotations, customizable workflows, AI integration | Free trial, enterprise pricing |

| Keylabs | Super fast video annotation, customizable workflow, all models and formats | Free trial, flexible pricing |

| RectLabel | Mac-based annotation tool, object tracking, bulk annotations | One-time purchase |

Image Processing and Bounding Boxes

Image processing is a vital component in the field of computer vision and involves performing various operations on images to extract meaningful information or enhance their quality. There are two main methods of image processing: analog image processing and digital image processing. Analog image processing utilizes hard copies of images for analysis, while digital image processing employs computers and algorithms to process digital images. Bounding boxes play a crucial role in image processing by enabling the localization and classification of objects within images.

When it comes to analog image processing, bounding boxes are often used to define the region of interest (ROI) within an image. This allows for selective processing of specific areas, enabling targeted analysis or modification of the image. In digital image processing, bounding boxes are utilized to identify and isolate objects within an image. This is particularly valuable for feature extraction and information extraction, as bounding boxes provide precise boundaries for the objects of interest.

Bounding boxes can be used in various image analysis tasks, such as object detection, segmentation, and tracking. By accurately defining the boundaries of objects, bounding boxes facilitate the extraction of features and relevant information from images. This information can then be further analyzed and used for applications such as object recognition, image classification, and pattern recognition. Bounding boxes are an essential tool for researchers and practitioners in the field of computer vision, enabling efficient image analysis and interpretation.

Table: Applications of Bounding Boxes in Image Processing

| Application | Description |

|---|---|

| Object Detection | Bounding boxes are used to localize and classify objects within an image, enabling tasks like object recognition and tracking. |

| Image Segmentation | Bounding boxes can be used to define the boundaries of regions or objects within an image, allowing for accurate segmentation. |

| Feature Extraction | By isolating objects with bounding boxes, precise features can be extracted from images, aiding in analysis and pattern recognition. |

| Information Extraction | Bounding boxes provide boundaries for objects, enabling the extraction of relevant information and data from images. |

Overall, bounding boxes are a fundamental tool in image processing, allowing for the precise localization and classification of objects within images. Whether in analog or digital image processing, bounding boxes play a crucial role in various applications, from object detection to feature extraction and information extraction. By correctly implementing and utilizing bounding boxes, researchers and practitioners can enhance the capabilities of image processing systems and advance the field of computer vision.

Object Detection and Bounding Boxes

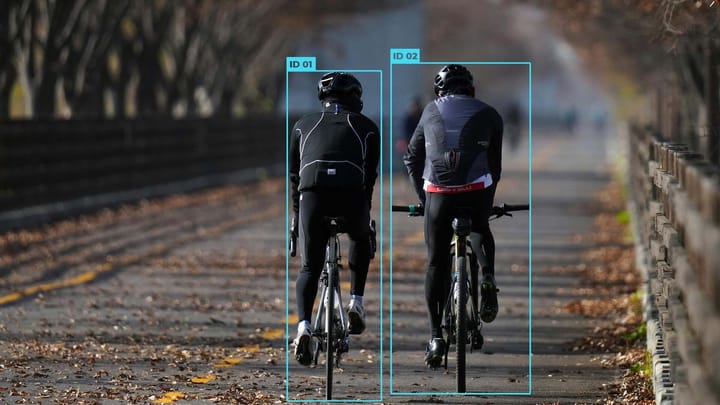

Object detection is a crucial task in computer vision and machine learning, which involves identifying and locating objects within an image or video. Bounding boxes play a vital role in object detection by providing precise boundaries for the objects of interest. These bounding boxes define the spatial extent of the objects, enabling accurate classification and localization.

Neural networks, particularly convolutional neural networks (CNNs), have revolutionized the field of object detection. Frameworks like fast R-CNN, faster R-CNN, and YOLO (You Only Look Once) utilize bounding boxes to achieve accurate and efficient object detection. These frameworks employ deep learning algorithms to analyze the image and predict the presence and location of objects based on the bounding boxes.

The key goal in object detection is to balance accuracy and speed. With the increasing demand for real-time object detection in applications like autonomous vehicles and surveillance systems, there is a need for fast and accurate detection algorithms. Single-shot detectors, such as YOLO, have emerged to fulfill this need by performing detection in a single pass, optimizing speed without compromising accuracy.

Table:

| Framework | Accuracy | Speed |

|---|---|---|

| Fast R-CNN | High | Medium |

| Faster R-CNN | High | Medium |

| YOLO | Medium | High |

| Single-shot detectors | Medium | High |

These object detection frameworks, along with advancements in neural networks and deep learning, have significantly improved the accuracy and efficiency of object detection. Researchers continue to explore new techniques and architectures to further enhance the capabilities of these frameworks, aiming to achieve real-time, high-accuracy object detection in a variety of applications.

Object Localization and Classification

Object detection encompasses two main tasks: localization and classification. Localization involves determining the precise location of objects within an image by predicting the bounding boxes' coordinates. Classification, on the other hand, involves assigning a class label to each detected object to identify the object's category.

The accuracy of object localization and classification relies on the quality and accuracy of the bounding boxes. The bounding boxes should tightly enclose the objects of interest, leaving minimal background or context. This ensures that the neural network focuses on the relevant information within the bounding boxes, improving the accuracy of both localization and classification.

Furthermore, the selection and design of bounding box annotation tools and techniques can also impact the accuracy and efficiency of object detection. These tools should provide intuitive and user-friendly interfaces, allowing annotators to create precise and consistent bounding boxes with ease. The quality of training data, including the annotated bounding boxes, plays a crucial role in training robust and accurate object detection models.

Challenges in Object Detection

Bounding box annotation and object detection involve overcoming various challenges to achieve accurate and efficient results. In the context of object detection, several key challenges arise:

- Dual priorities: Object detection requires simultaneously addressing the tasks of object classification and localization. Balancing these dual priorities ensures accurate identification and accurate localization.

- Speed for real-time detection: Real-time object detection scenarios, such as autonomous driving or video surveillance, demand fast processing and detection algorithms to ensure timely and responsive results.

- Multiple spatial scales and aspect ratios: Objects in images or videos can vary in size and shape. Detecting objects at different spatial scales and aspect ratios requires robust algorithms capable of handling this variability.

- Limited data: The availability of annotated data for training object detection models is often limited. This scarcity of data can hinder the development of accurate and generalizeable models.

Researchers and developers have been actively working to address these challenges through the exploration of various techniques and frameworks. These efforts aim to enhance the accuracy, speed, and efficiency of object detection systems, enabling their widespread application across diverse industries and use cases.

Emerging Techniques in Object Detection

To overcome the challenges in object detection, researchers have proposed several innovative techniques:

- Multi-task loss function: Simultaneously optimizing object classification and localization through multi-task loss functions enables the improvement of overall detection accuracy.

- Regional-based CNNs with anchor boxes: The integration of regional-based CNNs, which generate region proposals using anchor boxes, streamlines the detection process.

- Feature pyramid network: Feature pyramid networks facilitate the detection of objects at different spatial scales, enhancing detection performance across various object sizes.

- Deep learning methods: Leveraging deep learning techniques, such as convolutional neural networks (CNNs), advances the accuracy of object detection by enabling sophisticated feature extraction.

- Data augmentation: Augmenting available data through techniques such as image rotation, flipping, or zooming helps alleviate the limitations posed by limited annotated data.

These emerging techniques offer promising pathways to address the challenges in object detection, fostering continued advancements in the field.

| Challenges in Object Detection | Emerging Techniques |

|---|---|

| Dual priorities | Multi-task loss function |

| Speed for real-time detection | Regional-based CNNs with anchor boxes |

| Multiple spatial scales and aspect ratios | Feature pyramid network |

| Limited data | Deep learning methods |

Through the continuous exploration of innovative approaches and the refinement of existing methodologies, the challenges in object detection can be overcome. These advancements continue to drive progress in computer vision and machine learning, opening up new possibilities for object detection across a wide range of applications.

Solutions and Emerging Techniques

Researchers have proposed several solutions and emerging techniques to address the challenges in object detection. These include:

Multi-Task Loss Function

A multi-task loss function is used to simultaneously optimize both classification and localization in object detection. This approach allows the model to learn and improve its ability to accurately detect and classify objects within an image. By leveraging a multi-task loss function, researchers can achieve better overall performance and accuracy in object detection algorithms.

Regional-Based CNNs with Anchor Boxes

Regional-based convolutional neural networks (CNNs) combined with anchor boxes have emerged as an efficient approach for region proposal generation in object detection. Anchor boxes serve as reference bounding boxes of varying sizes and aspect ratios, allowing the model to predict the location and size of objects within an image. This technique enhances the efficiency and accuracy of object detection algorithms.

Feature Pyramid Network

A feature pyramid network is utilized to detect objects at multiple scales within an image. This technique enables the model to effectively capture objects of different sizes, improving the detection performance for both small and large objects. By incorporating a feature pyramid network, researchers can enhance the robustness and accuracy of object detection systems.

Deep Learning and CNN Base

The utilization of deep learning methods and convolutional neural network (CNN) base architectures has significantly advanced object detection capabilities. Deep learning algorithms, such as YOLO (You Only Look Once) and faster R-CNN (Region-based Convolutional Neural Network), have shown remarkable speed and accuracy in detecting objects. These techniques leverage the power of deep learning to achieve state-of-the-art performance in object detection tasks.

Data Augmentation

Data augmentation techniques play a crucial role in improving the performance and generalization of object detection models. By artificially expanding the training dataset through techniques like rotation, scaling, and flipping, researchers can enhance the model's ability to recognize and classify objects under different variations and perspectives. Data augmentation helps to mitigate overfitting and improves the robustness of object detection algorithms.

These solutions and emerging techniques contribute to the continuous development and improvement of object detection systems. By combining the power of multi-task loss functions, regional-based CNNs with anchor boxes, feature pyramid networks, deep learning, and data augmentation, researchers can overcome challenges and achieve more accurate and efficient object detection results.

Conclusion

Bounding box annotation and object detection play critical roles in the fields of computer vision and machine learning. While implementing tight bounding boxes may present challenges, there are various solutions and best practices available to overcome these obstacles and achieve accurate and effective object detection.

By leveraging advancements in deep learning, researchers have made significant progress in improving the speed, accuracy, and efficiency of object detection algorithms. The integration of multi-task loss functions, regional-based CNNs with anchor boxes, feature pyramid networks, and data augmentation techniques has led to significant advancements in this field.

Object detection is a vital aspect of computer vision and machine learning applications. The challenges associated with bounding box annotation and object detection continue to be addressed, and ongoing research and development efforts are focused on enhancing the capabilities of object detection systems.

Through the collective efforts of the scientific community, object detection technologies will continue to evolve and contribute to the advancement of computer vision and machine learning, paving the way for a wide range of applications in various fields.

FAQ

What is bounding box annotation?

Bounding box annotation is a process of manually labeling or annotating an image with a rectangular box that encloses a specific object or feature of interest.

Why are bounding boxes important?

Bounding boxes are important in object detection, image annotation, data visualization, and object recognition as they enable the identification and localization of objects in an image or video.

What are the types of bounding boxes annotation?

The types of bounding boxes annotation include axis-aligned bounding boxes, minimum bounding boxes, rotated bounding boxes, oriented bounding boxes, minimum volume bounding boxes, and convex hull bounding boxes.

What are the best practices for bounding box annotation?

The best practices for bounding box annotation include ensuring tightness of the bounding box, maintaining high Intersection over Union (IoU), achieving pixel-perfect tightness when necessary, avoiding or reducing overlap between bounding boxes, annotating diagonal objects with polygons and instance segmentation, labeling and tagging all objects of interest, varying the size of bounding boxes based on the object size, and annotating occluded objects with appropriate techniques.

What are some additional tips and tricks for bounding box annotation?

Some additional tips and tricks for bounding box annotation include using appropriate tools and software, utilizing keyboard shortcuts, creating templates for similar objects, leveraging artificial intelligence for automated detection and annotation, and using a consistent approach when drawing bounding boxes and labeling objects.

How are bounding boxes used in image processing?

Bounding boxes are used in image processing to localize and classify objects within images, enabling feature extraction and information extraction for various applications.

What is the role of bounding boxes in object detection?

Bounding boxes play a crucial role in object detection, allowing for the identification and location of objects within an image or video. They are utilized in various object detection frameworks and neural networks, such as fast R-CNN, faster R-CNN, and YOLO, to achieve accurate detection and classification of objects.

What are the challenges in object detection?

The challenges in object detection include the dual priorities of object classification and localization, the need for speed in real-time detection, handling objects at multiple spatial scales and aspect ratios, and the limited availability of annotated data for training object detection models.

What are some solutions and emerging techniques in object detection?

Some solutions and emerging techniques in object detection include the use of multi-task loss functions, regional-based CNNs with anchor boxes, feature pyramid networks, and deep learning methods. Data augmentation techniques are also employed to enhance the performance and generalization of object detection models.

What is the significance of bounding box annotation and object detection?

Bounding box annotation and object detection are essential components of computer vision and machine learning applications. They allow for accurate and effective identification and localization of objects, contributing to advancements in various fields.

Comments ()