Reflections are used in computer vision to visualize the world

Researchers from MIT and Rice University have created a computer vision technique that leverages reflections to image the world by using them to turn glossy objects into "cameras," enabling a user to see the world as if they were looking through the "lenses" of everyday objects like a ceramic coffee mug or a metallic paper weight.

When a car navigates through a constricted city lane, the glossy paint or side mirrors of parked vehicles can reflect hidden scenarios, like a child playing on a sidewalk behind the parked cars. Inspired by this concept, MIT and Rice University researchers have developed a computer vision approach that utilizes reflections to visualize the surroundings. They've ingeniously transformed glossy objects into "cameras", providing a unique perspective of the world as if seen through commonplace objects like a ceramic coffee mug or a metallic paperweight.

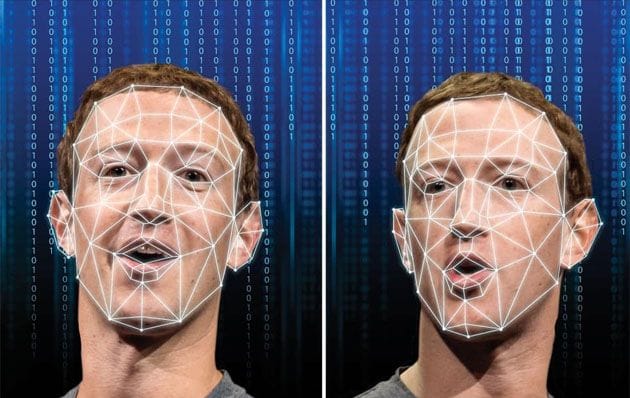

By capturing images of an object from various angles, the technique turns the object's surface into a virtual sensor that records reflections. The AI-powered system interprets these reflections to estimate scene depth and capture views only visible from the object's perspective. This technique could be leveraged to observe around corners or past obstacles obstructing the viewer's sight.

The technology could be particularly advantageous for autonomous vehicles, enabling self-driving cars to utilize reflections from nearby objects, like lampposts or buildings, to visualize around a parked truck.

Kushagra Tiwary, a graduate student in the Camera Culture Group at the Media Lab and co-lead author of the research paper, stated that their formulation converts any surface into a sensor, making it applicable in various fields. Tiwary's co-authors include Akshat Dave from Rice University, Nikhil Behari, Tzofi Klinghoffer, Ashok Veeraraghavan, and senior author Ramesh Raskar. A pre-print version of the research will be presented at the Computer Vision and Pattern Recognition Conference in Vancouver.

In crime TV shows, characters often "zoom and enhance" surveillance footage to spot reflections, such as those in a suspect's sunglasses, to solve crimes. However, in reality, utilizing reflections is not as straightforward due to distortions in reflections caused by the object's shape, color, texture, and the 3D world it reflects.

Overcoming these challenges, the researchers devised a technique called ORCa (Objects as Radiance-Field Cameras), which captures multiple reflections on the glossy object from various angles. Then, it employs machine learning to transform the object's surface into a virtual sensor, capturing light and reflections. Finally, it models the 3D environment from the object's perspective.

By imaging the object from different angles, ORCa captures multi-view reflections, estimating depth between the glossy object and other objects in the scene, and even the shape of the glossy object itself. It models the scene as a 5D radiance field, capturing additional details about the intensity and direction of light rays. This approach allows the user to see hidden features blocked by corners or obstacles.

Once ORCa captures the 5D radiance field, a virtual camera can be placed anywhere in the scene to synthesize what that camera would see. The user could also add virtual objects into the environment or alter an object's appearance.

The researchers compared their technique with other reflection modeling methods and found that it performed well at distinguishing an object's actual color from the reflections and extracting accurate object geometry and textures. They also found ORCa's depth predictions to be trustworthy.

Moving forward, the researchers aim to apply ORCa to drone imaging, where it could use faint reflections from objects a drone flies over to reconstruct a scene from the ground. They also plan to enhance ORCa so it can employ other cues, such as shadows, to reconstruct hidden information or combine reflections from two objects to image new parts of a scene.

Raskar mentions that estimating specular reflections is crucial for seeing around corners, and this is the next logical step in using faint reflections for this purpose.

Achuta Kadambi, an assistant professor not involved with the work, appreciates the creative approach of the paper which turns the shininess of objects, typically a challenge for vision systems, into an advantage. By using the environmental reflections off a glossy object, the system not only reveals hidden parts of the scene but also understands how the scene is lit. This opens up possibilities in 3D perception, including seamlessly integrating virtual objects into real scenes, even under challenging lighting conditions.

He notes that one reason others haven't been able to employ shiny objects in this manner is that most previous works required surfaces with known geometry or texture. However, the authors have introduced an innovative formulation that doesn't require such knowledge.

Src: Massachusetts Institute of Technology

Comments ()