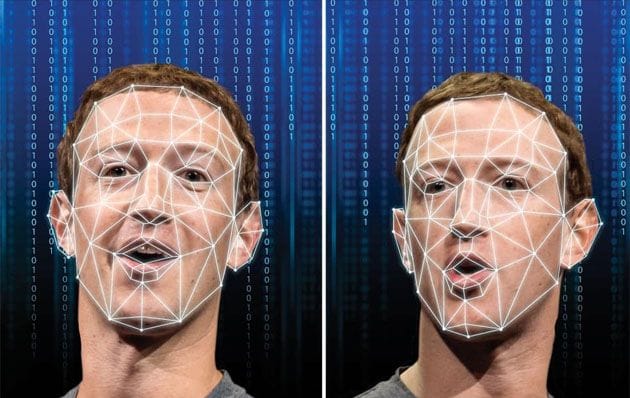

Racial bias in artificial intelligence restricts access to healthcare and financial services

From banks to police forces, artificial intelligence plays a vital role in modern life. In spite of this, an increasing body of evidence suggests that the AI used by these organizations is capable of entrenching systemic racism.

According to a leading industry expert, this can negatively impact Black and ethnic minority applicants for a mortgage or seeking health care.

Biases that need to be confronted

The author is a Distinguished Engineer at IBM. His research shows that the technology used by law enforcement and judicial systems has biases built into it as a result of human prejudices and systemic or institutional preferences. In order to redress the imbalance, AI developers and technologists can take several steps.

In the words of Lawrence, "AI is an inescapable component of modern society, and it affects everyone. Unfortunately, AI's internal biases are rarely confronted - and I believe now is the time for us to address them."

"Hidden in White Sight", Lawrence's new book, examines the broad range of AI applications in the United States and Europe, including health care, policy, advertising, banking, education, and loan applications.

A new documentary film, "Hidden in White Sight," exposes the sobering reality that AI outcomes can be detrimental to those who most need them.

I have found through my research and in my personal life, that artificial intelligence is far from being the great equalizer that promotes fairness by removing human bias.

Society's tool

As a software developer for the last thirty years, Lawrence has worked on many AI-based systems at the U.S. Department of Defense, NASA, Sun Microsystems, and IBM.

As a result of Lawrence's expertise and experience, he provides readers with advice on what they can do to fight this issue and how developers and technologists can build systems that are more equitable.

As part of these recommendations, AI systems should be rigorously tested, datasets should be fully transparent, opt-out options should be provided, and a "right to be forgotten" should be built in. Additionally, Lawrence believes people should have the ability to easily check what information is stored against their names and to have clear access to recourse if the information is incorrect.

According to Lawrence, this issue is not limited to one group, but has societal implications. It is a question of who we wish to be as a society and whether we wish to be in control of technology or whether we wish it to control us.

If you are a CEO, a tech developer, or somebody who utilizes AI in your daily life, I would urge you to be intentional with how you use this powerful tool."

Src: Taylor & Francis, Techxplore

Comments ()