Mastering AI Model Lifecycle Management

Tasks like data preparation and preprocessing can take up to 80% of time in an AI project. This shows the importance of managing the AI model lifecycle well. Doing so ensures better results and maximizes AI investment value.

AI model lifecycle management involves many phases. Each phase is key to AI project health. This begins with data prep and goes through model development, deployment, and maintenance. Attention to detail and following best practices is crucial through every step.

Understanding the AI model lifecycle helps in smoother project development and deployment. It lets you improve resource use, model performance, and ensure responsible AI use. Ultimately, it enhances your business outcomes.

Let's look deeper into the AI model lifecycle management. We will cover key aspects, best practices, and tools. These insights will help streamline your AI projects. This is crucial for anyone working with AI, from data scientists to business leaders, aiming to fully leverage AI in their organization.

Key Takeaways

- AI model lifecycle management covers all AI stages.

- Good data prep is vital for AI project success.

- Developing, training, and validating models needs careful selection and testing.

- Deploying models involves scaling and ongoing performance checks.

- AI governance is crucial for responsible AI, focusing on risk and fairness.

Understanding the Importance of AI Model Lifecycle Management

AI technologies are evolving rapidly. With this growth, companies are realizing the need for a solid strategy in handling their AI models. This approach, known as AI model lifecycle management, helps businesses effectively work with their AI. It merges the fields of data science and software engineering, allowing for better creation, deployment, and management of AI systems.

Managing the lifecycle of AI models is vital. It offers a systematic way to deal with the development and use of AI. This method covers every crucial step, ensuring the success of AI projects and meeting set goals.

Improving model performance and reliability is a clear advantage of this method. By putting models through various tests and adjustments, accuracy and robustness are improved. The process involves creating and comparing different models to select the best for active use. It also includes regular checks to keep the models up-to-date and performing at their best for as long as possible.

According to industry experts, data preparation is often considered the hardest and most time-consuming phase of the AI lifecycle, emphasizing the importance of effective data management within the AI model lifecycle management framework.

The method also focuses on scalability and ongoing maintenance of AI models. As models grow and are used more, they need to handle more data and changing business needs. With the right planning, organizations can ensure their AI system grows with their needs. This includes regular updates and retraining to keep the models current.

Key Components of AI Model Lifecycle Management

AI model lifecycle management is critical for successful AI projects. It ensures model reliability and drives business impact. We will explore the vital stages of AI model lifecycle management.

Data Preparation and Preprocessing

Data preparation and preprocessing are the foundations of robust AI models. It begins with collecting data from many sources. Then, the data is cleaned to remove errors and inconsistencies and made suitable for training.

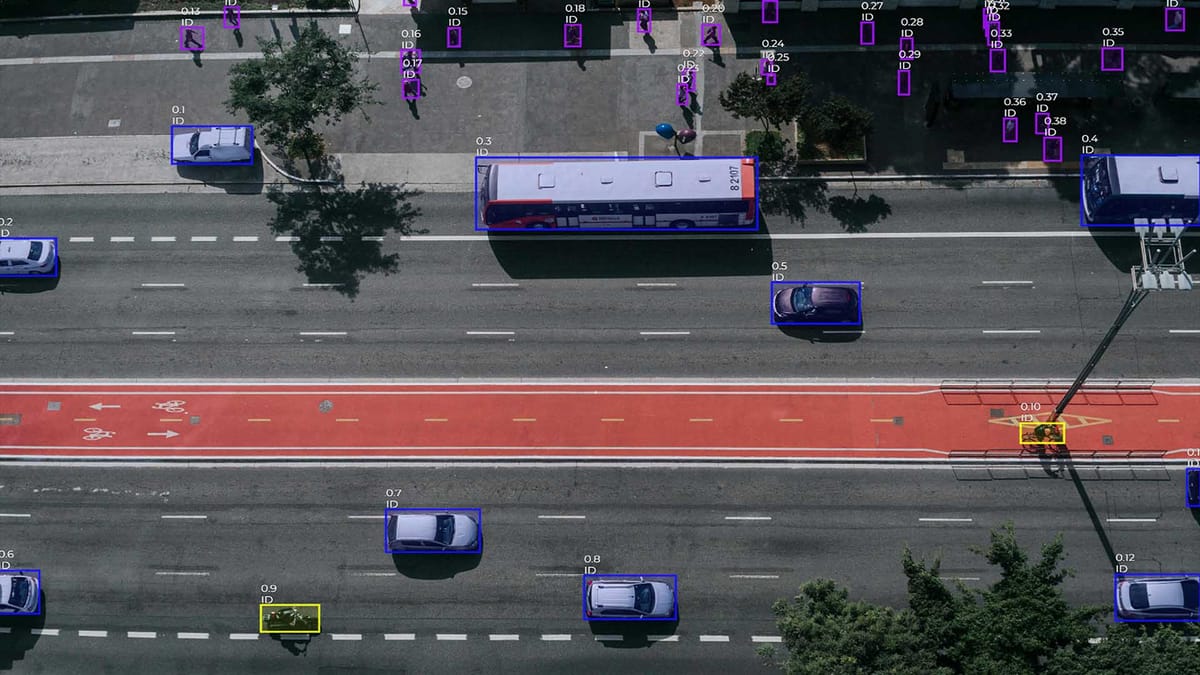

It also involves data normalization and handling missing values. These methods are key for data quality. Data preparation is time-intensive, highlighting the need for effective data handling approaches. This step requires accurate data, and if you're working with computer vision, oftentimes a lot of annotation work, too.

Model Development and Training

After data preparation, we move to model development and training. This includes choosing algorithms and architectures, setting hyperparameters, and refining based on performance. Techniques like cross-validation and tuning enhance the model’s performance and applicability.

Model training may require significant resources. Technologies like TensorFlow and PyTorch ease the development. They offer easy integration within the AI lifecycle.

Model Evaluation and Validation

Evaluating and validating models is crucial. It includes assessing accuracy, precision, and recall on validation datasets. Techniques like k-fold validation ensure model robustness.

If a model is found lacking, it needs fine-tuning. This signifies the lifecycle’s iterative nature. Rigorous testing helps spot biases and other issues, aiding in better decision-making during deployment.

Model Deployment and Monitoring

After thorough evaluation, models are ready for deployment. This step involves integrating models into production systems and creating easy-to-use interfaces. Strategies like Docker and Kubernetes assist in efficient deployment.

Post-deployment, continuous monitoring is vital. It helps spot model degradation. Tools for drift detection and alerts ensure models operate at their best. They trigger actions like retraining or updating the model.

Model Governance and Maintenance

Model governance and maintenance are vital for long-term model success. Governance sets standards for development and usage. It includes practices like version control and access mechanisms for accountability and traceability.

Regular maintenance tasks keep models current with business needs. This includes retraining on new data and incorporating user feedback. Governance also addresses ethical concerns, bias mitigation, and compliance with standards.

| Component | Key Activities | Tools and Techniques |

|---|---|---|

| Data Preparation and Preprocessing | Data collection, labeling, cleansing, transformation | Data normalization, feature scaling, handling missing values |

| Model Development and Training | Algorithm selection, hyperparameter tuning, model refinement | Cross-validation, distributed training, TensorFlow, PyTorch |

| Model Evaluation and Validation | Performance metrics, bias detection, robustness assessment | Accuracy, precision, recall, k-fold cross-validation |

| Model Deployment and Monitoring | Model serving, integration, performance monitoring | Containerization (Docker), orchestration (Kubernetes), drift detection |

| Model Governance and Maintenance | Policy establishment, version control, model updates | Documentation, access control, retraining, ethical considerations |

By effectively managing the AI model lifecycle, organizations can ensure AI models meet expectations. A structured approach enhances the overall development and maintenance process. This drives value and competitive advantage for the business.

Benefits of Implementing an Effective AI Model Lifecycle Management Strategy

Strategies that manage the life cycle of AI models bring numerous advantages for companies. They allow firms to handle every stage of an AI model's life, from the start of an idea to its implementation and continuous upkeep. This approach helps in realizing the full power of AI efforts. It aids in making choices based on data, using resources better, and boosting the AI model's reliability and performance.

AI model lifecycle management particularly improves decision-making. It ensures goals are clear and AI projects align with the business's aims, providing reliable insights for informed decisions. According to Gartner, advanced AI adopters often set business metrics early in new projects. This shows setting clear goals is critical. It means you're more likely to succeed if you know exactly what you're aiming for.

Enhanced Decision-Making

Empowering companies to base decisions on AI-generated insights is a key highlight of lifecycle management. By defining problems precisely and linking AI projects to business goals, firms can get meaningful insights. They use these to make crucial decisions effectively. Establishing important metrics early, according to Gartner, is more common among advanced AI users. This demonstrates its importance in AI success.

Improved Model Reliability and Performance

The reliability and performance of AI models are key benefits of lifecycle management. Creating a strong foundation for developing, evaluating, and refining models leads to consistent and precise outcomes. This includes a focus on collecting data systematically, exploring thoroughly, and optimizing algorithms, which significantly boosts an AI model's predictive strength and efficiency.

Also, scaling AI solutions becomes easier with lifecycle management. It improves how AI models can grow and adapt across different parts of a business. Regular oversight and updates keep the models progressive and significant. This ensures the AI continues to meet its intended purpose over time.

To wrap up, a sound AI model lifecycle strategy brings various pluses, such as better decision-making, sparing on resources, and boosting model trustworthiness and operation. By employing such a strategy, businesses can enhance their AI projects significantly. This leads to more innovation, competitiveness, and growth in the fast-changing digital world.

Challenges in AI Model Lifecycle Management

AI model lifecycle management presents several organizational hurdles alongside its benefits. Chief among these is the growing complexity of AI models. Advanced models and large data sets make maintaining data quality and consistency a challenge. The data preparation phase, critical for quality, demands detailed attention and strong data governance.

Model interpretability poses another key issue. Understanding how sophisticated AI systems reach conclusions is arduous. This opacity can erode trust and create compliance risks, especially in regulated sectors. To overcome this, investing in tools for model interpretability, like feature importance analysis, is vital.

Addressing model bias stands as a significant challenge. Biases may enter models from improper training data, algorithmic flaws, or historic data biases. Recent focus on ethical AI underscores the importance of actively seeking and mitigating bias. Employing diverse data collection and bias auditing can offer solutions.

"54% of AI projects successfully transition from pilot to production, highlighting the importance of adherence to a structured process in AI model lifecycle management."

Model drift and performance decay over time underscore further challenges. As data and contexts shift, model accuracy can falter. It's crucial to continuously monitor models, establish retraining triggers, and have update processes in place. This includes setting monitoring thresholds and automated alerts.

Ensuring AI model scalability is yet another obstacle. With the increasing need for AI applications, scalability is paramount. This entails evaluating and optimizing infrastructure for efficient model use. Given the computational costs of model training, access to advanced resources and distributed computing is often necessary.

Establishing robust model governance frameworks is critical. Compliance with various regulations like GDPR, CCPA, and HIPAA shapes the deployment of AI models. To comply, organizations must ensure strict data privacy and security, perform impact assessments, and keep detailed audit trails. Effective model governance includes delineating roles, ethical standards, and promoting responsible AI use.

| Challenge | Description |

|---|---|

| Model Complexity | Managing the increasing complexity of AI models and ensuring data quality throughout the lifecycle. |

| Model Interpretability | Addressing the lack of transparency in complex AI models and enhancing explainability. |

| Model Bias | Detecting and mitigating bias in AI models caused by imbalanced data or flawed algorithms. |

| Model Drift | Handling performance degradation over time and implementing continuous monitoring and retraining. |

| Model Scalability | Ensuring AI models can scale to meet evolving business needs and optimizing resource utilization. |

| Model Governance | Establishing frameworks for compliance, ethics, and accountability in AI model deployment and management. |

Meeting these challenges necessitates a comprehensive approach. Technical skill, organizational clarity, and a commitment to ethical AI are necessary. Tackling these obstacles ensures the beneficial use of AI technology and guards against pitfalls, securing the success of AI ventures in the long term.

Best Practices for Successful AI Model Lifecycle Management

To ensure AI models are both successful and sustainable for the long term, organizations must follow key best practices during the entire lifecycle. These include defining clear team roles and using automated processes for testing and deployment.

Establish Clear Roles and Responsibilities

Defining roles and responsibilities is pivotal in managing the AI model lifecycle. This fosters collaboration and assigns accountability within the development team. Essential roles span from data exploration to model deployment and project objectives setting:

- Data scientists responsible for data exploration, model development, and training

- Machine learning engineers focused on model deployment and integration

- DevOps professionals handling infrastructure and deployment pipelines

- Business stakeholders providing domain expertise and defining project objectives

Implement Robust Data Governance Policies

Strong data governance is crucial for AI model success. It involves setting high standards for data quality, security, and compliance through several measures:

- Establishing data quality standards and validation processes

- Implementing data access controls and security measures

- Ensuring compliance with relevant regulations, such as GDPR and HIPAA

- Regularly auditing and monitoring data pipelines for anomalies and inconsistencies

Adopt Agile Development Methodologies

Agile development has shown promise in AI. It allows for iterative model development, quickening the experimentation and feedback processes. Essential agile strategies comprise the following:

- Breaking down projects into smaller, manageable sprints

- Conducting regular stand-up meetings to discuss progress and challenges

- Encouraging collaboration and knowledge sharing among team members

- Continuously integrating and delivering model updates and improvements

Leverage Automated Testing and Deployment Pipelines

For smoother model validation and deployment, automated pipelines are a must. These cut down on human errors, boost efficiency, and maintain uniformity across setups. Automation strategies include:

- Implementing continuous integration and continuous deployment (CI/CD) pipelines

- Automating tests for models, including unit and performance tests

- Using containerization technologies, such as Docker, for unified deployment

- Monitoring and logging model performance and resource use in Live

Other AI model lifecycle management best practices involve:

| Best Practice | Description |

|---|---|

| Model Versioning | Implementing version control for models, enabling easy rollbacks and reproducibility |

| Comprehensive Documentation | Maintaining detailed documentation of models, including assumptions, limitations, and performance metrics |

| Cross-functional Collaboration | Fostering collaboration between data scientists, engineers, and business stakeholders for aligned objectives and knowledge sharing |

Following these strategies allows organizations to smoothly navigate the AI model lifecycle, achieving prosperity, and enhancing AI solutions continuously.

The Role of MLOps in AI Model Lifecycle Management

MLOps (Machine Learning Operations) plays a vital role in managing the life cycle of AI models. It acts as a link between development and deployment phases. This facilitates a smooth transition and enhances collaboration among data scientists, DevOps teams, and others. With MLOps, organizations can optimize their AI projects, boost model performance, and create more business value.

The AI project lifecycle relies on consistent actions, which MLOps automation can achieve. Notably, just 15% of top businesses have managed to scale AI capabilities effectively. This underlines the obstacles faced in AI model lifecycle management. It points to the critical need for structured methods like MLOps.

Streamlining Model Development and Deployment Processes

MLOps enhances model development and deployment by incorporating automation and best practices such as continuous integration and deployment (CI/CD). It allows data scientists to concentrate on developing models while ensuring easy deployment and monitoring. Azure Machine Learning (Azure ML), for instance, offers in-built deployment features that include key metrics like response time and failure rates.

Using MLOps principles and tools, organizations can speed up model development, maintain reproducibility, and ensure ongoing model performance in production. This bridges the knowledge and interest gaps between data science and operations teams. It establishes a productive environment for testing, deploying, and maintaining models.

Enabling Collaboration Between Data Scientists and DevOps Teams

Effective collaboration between data scientists and DevOps teams is paramount for successful AI model management. MLOps facilitates this by defining clear roles and workflows. It cultivates a culture of shared responsibility and ensures models are built to meet production demands.

With MLOps, the transition of models between data scientists and DevOps is smooth, enhancing overall efficiency. This approach lets data scientists focus on development, while DevOps manage deployment tasks. The strategy ensures seamless deployment of models that are easy to monitor and retrain when necessary.

| MLOps Practice | Benefit |

|---|---|

| Automated workflows | Streamlines model development and deployment processes |

| Version control | Enables tracking and management of model versions |

| CI/CD pipelines | Facilitates continuous integration and deployment of models |

| Collaboration between data scientists and DevOps | Fosters shared ownership and accountability |

| Model monitoring and retraining | Ensures model performance and adapts to changing data patterns |

Failure to monitor machine learning models can lead to operational risks from outdated models. MLOps highlights the importance of continuous model monitoring and updating. By regularly checking data and model performance, organizations maintain the relevance and utility of their models.

In wrap-up, MLOps significantly improves the handling of AI model lifecycles. It smoothes development and deployment steps, enhances team collaboration, and ensures model upkeep. Adopting MLOps leads to optimized AI projects, unlocking their full value and supporting long-term business growth.

AI Model Monitoring and Maintenance Strategies

Ensuring your AI models remain effective over the long haul demands vigilant model monitoring and maintenance strategies. This includes constant model performance checks, spotting anomalies, and sounding alarms when critical thresholds are crossed. Tasks such as retraining models on fresh data, adjusting hyperparameters, and integrating user feedback are part of the routine.

Monitoring goes beyond just the model performance. It includes watching model usage, managing resources, and ensuring scalability. A survey showed that only 54% of AI trials make it to live use, stressing the vital role of ongoing checks to maintain model reliability.

Model drift poses a significant threat, where AI performance drops because the data it's built on changes. Coping means regularly updating and retraining the models. Explainable AI (XAI) becomes crucial here, offering a peek into the models' inner workings to tackle biases and enhance model explainability.

Using MLOps tools to closely watch model statistics like accuracy, precision, and recall is key for a successful AI upkeep.

Top-notch AI monitoring and upkeep involve:

- Always tracking performance metrics

- Spotting anomalies early and reacting

- Keeping models updated with new data

- Adjusting hyperparameters based on performance

- Watching how models are used and their resource use

- Making sure models can grow with the business

- Integrating explainable AI for clear insights

| Monitoring Metric | Description | Importance |

|---|---|---|

| Accuracy | Checks how often the model is right | High |

| Precision | Looks at the percentage of correct positive predictions | Medium |

| Recall | Tests how well the model finds all the essential items | Medium |

| F1 Score | Blends precision and recall to show overall model skill | High |

| Latency | Tracks the time to make and share predictions | High |

With sturdy AI upkeep plans in place, your AI solutions will stand the test of time, offering steady business benefits and innovation in the competitive AI arena.

Ensuring Ethical and Responsible AI Practices

As AI technologies integrate into our lives more, focusing on ethical and responsible practices becomes key. Across the lifecycle of AI models, it's vital to spot and address biases. This helps in creating AI systems that are fair, open, and trustworthy. By adhering to regulatory standards, we ensure accountability.

Mitigating Bias and Ensuring Fairness

Addressing bias in AI systems is a significant challenge. These systems learn from data, often reflecting social biases. For instance, a speech recognition model based on US adults may not perform well for teens in the UK. Defining fairness in AI is complex, as it's influenced by cultural and ethical norms.

Organizations tackle bias by fostering diversity internally and checking datasets for biases. They use algorithms to detect unfairness, facilitate adversarial testing, and aim for ongoing fairness.

- Fostering an inclusive workforce and seeking input from diverse communities

- Assessing training datasets for potential biases and taking steps to remove them

- Implementing bias detection algorithms and fairness metrics to identify and address disparities

- Conducting ongoing adversarial testing to uncover and rectify unfair outcomes

Promoting Transparency and Explainability

Transparency in AI systems is crucial for trust. Users should understand decisions made by AI models. This can be achieved with easy-to-follow models, comprehensive documentation, and clear prediction explanations. Strategies to achieve this include using interpretable models and engaging with stakeholders openly.

Strategies for transparency and explainability include:

- Developing interpretable models that allow for easy understanding of decision-making processes

- Providing detailed documentation on model architecture, training data, and performance metrics

- Implementing explainable AI techniques that provide insights into model predictions

- Engaging in open communication with stakeholders about the capabilities and limitations of AI systems

Adhering to Regulatory Compliance Requirements

Growing AI use brings about new ethical and regulatory challenges. To stay compliant, organizations need to be current with data privacy and AI regulations. This helps avoid risks and maintain a good reputation.

Organizations should focus on data governance, regular audits, and a clear AI governance framework. They must collaborate with legal experts to understand and follow changing regulations.

Emphasizing ethical AI practices is critical for building trust and avoiding risks. Success requires a team effort involving various stakeholders. Together, they can ensure AI is developed in ways that meet societal standards and values.

Tools and Technologies for AI Model Lifecycle Management

Managing the lifecycle of AI models effectively requires a mix of tools and tech. These help in simplifying processes, encouraging teamwork, and sustaining model efficiency. This stack includes tools for handling data, controlling versions, building and pushing models, watching their behavior, and logging. With these tools, companies can upscale their AI model creation, deployment, and maintenance without a hitch.

Data Management and Version Control Systems

At the core of AI model lifecycle management are robust data and version control tools. Such as Git and DVC help in tracking and controlling data and model changes, ensuring work can be redone and team members can collaborate. They make it easy for data experts and developers to wrangle diverse datasets, test variant models, and trace back through the history of changes.

Model Training and Deployment Platforms

Platforms like TensorFlow, PyTorch, and Kubeflow serve as launchpads for AI model construction, training, and action. They come packed with pre-built models, APIs, and libraries, speeding up the creation process. Moreover, they handle distributed training well, making it possible for companies to expand their AI ventures to handle substantial amounts of data.

| Platform | Key Features |

|---|---|

| TensorFlow |

|

| PyTorch |

|

| Kubeflow |

|

Monitoring and Logging Solutions

Model success hinges on monitoring and logging. Tools like Prometheus, Grafana, and ELK stack allow for real-time model checks and system monitoring. They offer insights into model working and resource utilization. This helps in catching problems early, ensuring AI system health and efficiency.

According to a recent Gartner study, only 54% of AI projects move from the pilot to production. Using the right lifecycle management tools and strategies is key to reaching this success level.

Creating, deploying, and managing AI models effectively relies on a solid strategy supported by advanced tools. These solutions streamline operations, promote team engagement, and guarantee model success. They enable organizations to fully leverage AI's power and derive real business benefits.

FAQ

What is AI model lifecycle management?

AI model lifecycle management is a big framework. It covers many phases like data preparation and model training. It also includes evaluation, deployment, and monitoring. This framework helps companies smoothly handle their AI projects, from start to finish.

Why is AI model lifecycle management important?

Managing the AI model lifecycle well is key to success. It makes decision-making better and helps use resources wisely. Also, it boosts model quality and performance. By following this framework, organizations can tackle AI complexities and get more from their investments.

What are the key components of AI model lifecycle management?

The main parts of AI model lifecycle management are:

- Data preparation and preprocessing.

- Model development and training.

- Evaluation and validation.

- Deployment and monitoring.

- Governance and maintenance. These pieces all work together to make sure the AI models are working well.

What are the benefits of implementing an effective AI model lifecycle management strategy?

An effective strategy brings several advantages. It makes decision-making sharper and resource use more effective. It also raises model quality and performance. A good strategy aligns AI work with business goals and keeps models running well over time.

What challenges are associated with AI model lifecycle management?

However, there are many hurdles in managing AI lifecycles. These include model complexity, data issues, and ethics. There's also the challenge of keeping the models updated and scalable for business growth. Setting up strong governance and ethical practices is important too.

What are the best practices for successful AI model lifecycle management?

Success requires setting clear roles and having strong data policies. It also means using agile methods and automated tools for testing and deployment. Adding model version control and good documentation is vital. Plus, everyone from scientists to business must work as a team.

How does MLOps contribute to AI model lifecycle management?

MLOps helps by automating workflows and handling versions. It supports continuous update and deployment practices. This approach boosts teamwork between data scientists and DevOps. The result is faster development and stable models in real use.

What strategies are involved in AI model monitoring and maintenance?

For model care, keep an eye on its performance and spot any issues. Use alerts set for performance drops. Regularly update training data and tweak settings. Also, listen to user feedback and watch how the model is used.

How can organizations ensure ethical and responsible AI practices?

To be ethical, fight bias and aim for fairness in decisions. Use varied data and check for bias regularly. Make your models easy to understand and follow the regulations. This builds trust and cuts risks.

What tools and technologies support AI model lifecycle management?

Tools like Git and TensorFlow aid in managing the AI lifecycle. They keep data and models organized. Platforms like Kubeflow help with training and deployment. And monitoring solutions ensure everything runs smoothly.

Comments ()