How to spot AI generated text?

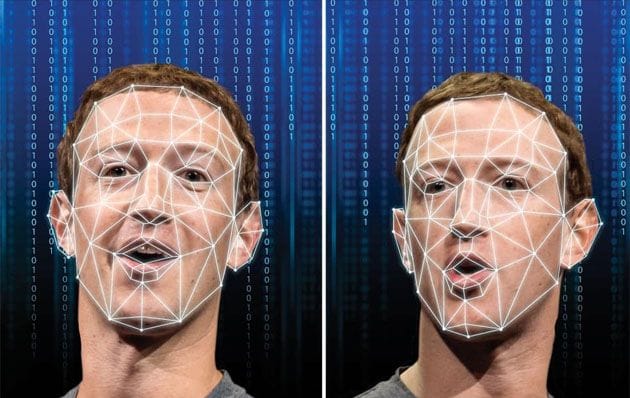

In recent years, chatbots have raised concerns about AI's growing sophistication and accessibility.

In the wake of ChatGPT, educators are rethinking learning as they worry about the integrity of the job market.

While concerns about employment and schools dominate headlines, the truth is that language models like ChatGPT will have an impact on just about everything.

Tech users can mitigate these risks with the help of University of Pennsylvania researchers. Researchers demonstrated that people can learn to tell the difference between machine-generated text and human-written text in a peer-reviewed paper presented at the AI AI meeting in February 2023.

You should take steps to discern the credibility of your source before choosing a recipe, sharing an article, or providing your credit card information.

Chris Callison-Burch, Associate Professor in the Department of CIS, assisted by Liam Dugan and Daphne Ippolito, both Ph.D. students in CIS, conducted the study.

As a result of Callison-Burch's research, it has been demonstrated that people are capable of learning to recognize machine-generated text. The assumption that a machine would make certain errors is not necessarily correct, and people start with certain assumptions about it. It is possible to learn to recognize the types of errors made by machines over time, provided we are given enough examples and explicit instruction."

It is surprising how well artificial intelligence can produce fluent and well-grammatized text today, says Dugan. There is, however, the possibility that it may make mistakes. We have demonstrated that machines can make different types of errors -- such as common sense errors, relevance errors, reasoning errors, and logical errors -- that we can learn to identify.

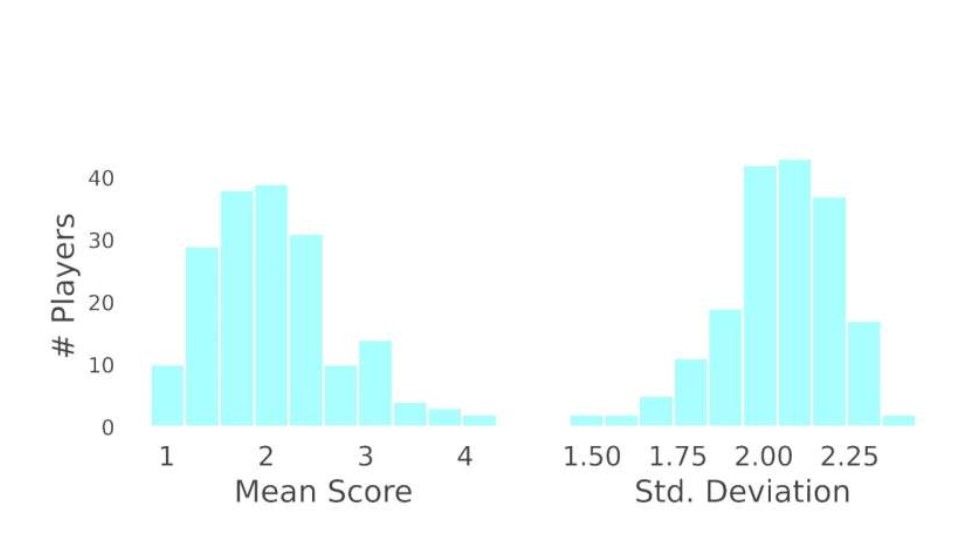

"The study uses data collected using Real or Fake Text?, a web-based training game designed by the authors.

As a result of this training game, the standard experimental method for detection research has been transformed into a better representation of how human beings use AI to generate text.

A standard method involves asking participants to indicate whether a machine generated a given text by answering yes or no. In this task, you are required to categorize a text as a real or a fake text and to assess the correctness or incorrectness of your responses.

The Penn model refines the standard detection study into an effective training exercise. Each example is followed by a transition to generated text, and participants are asked to indicate where, in their opinion, the transition begins. A score is assigned to the students based on their identification and description of characteristics in the text that indicate error.

As a result of this study, participants outperformed random chance by a significant amount, providing evidence that AI-generated text can, to some extent, be detected by humans.

Our method not only provides a realistic context for training, but also gamifies the task, making it more engaging," explains Dugan. Text generated by ChatGPT begins with human-provided prompts.

"Ten years ago, models had difficulty staying on topic or producing fluent sentences. Today, they rarely make a grammar mistake. Our study identifies the kinds of errors that characterize AI chatbots. However, these errors have evolved and will continue to evolve. The issue is not that AI-written text is undetectable. There will be a need for people to continue to train themselves to recognize the differences and to use detection software as a supplement."

According to Callison-Burch, people are concerned about artificial intelligence for valid reasons. This study provides evidence that dispels these concerns. Once we have harnessed our optimism regarding AI text generators, we will turn our attention to their capacity to assist us in writing more imaginative, more interesting texts.

As the co-leader of the Penn study and a current Research Scientist at Google, Ippolito complements Dugan's focus on detection with her work focusing on the most effective applications of these tools. As an example, she was involved in the development of Wordcraft, an AI creative writing tool developed in collaboration with published authors.

Src: University of Pennsylvania

Comments ()