How to improve computer vision in a simpler manner

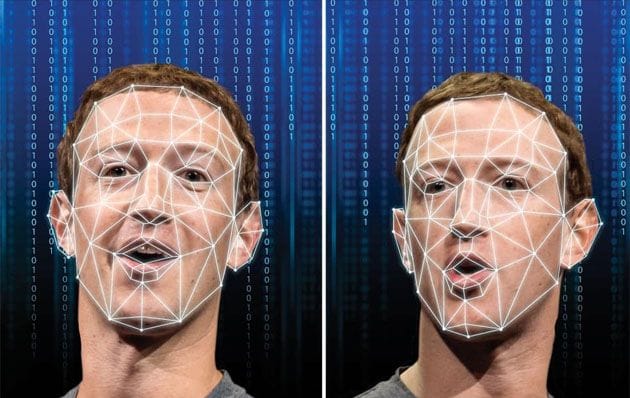

It is necessary to train a machine-learning model before it is capable of performing a specific task, such as identifying cancer from a medical image.

In order to train an image classification model, millions of examples of images are presented in a massive dataset.

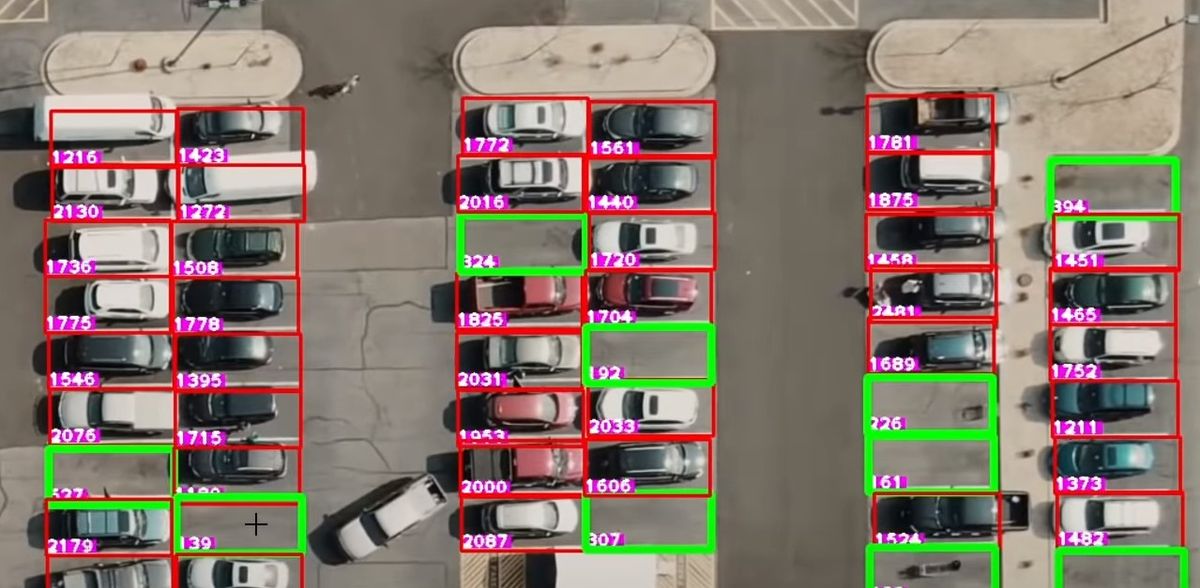

In spite of this, using real image data can raise a number of practical and ethical issues: The images could violate people's privacy, or they may be biased toward a particular racial or ethnic group. Researchers can avoid these pitfalls by creating synthetic data for model training using image generation programs. It is important to note that these methods are limited because they require expert knowledge in order to create an image generation program that can provide effective training data.

MIT, IBM Watson AI Lab, and other researchers have taken a different approach. As an alternative to developing customized image generation programs for a particular training task, the researchers collected a dataset of 21,000 publicly available image generation programs from the internet. After that, a computer vision model was trained using a large collection of basic image generation programs.

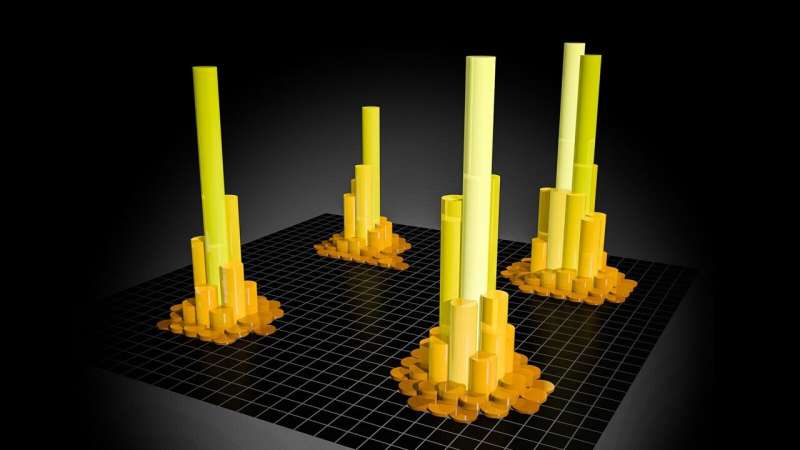

As a result of these programs, a variety of images are produced that display simple colors and textures. There were no modifications made to the programs, which consisted of only a few lines of code each.

This large dataset of programs provided more accurate results when compared with other synthetically trained models. In addition, the researchers demonstrated that increasing the number of image programs in the dataset increased model performance, indicating a path to improved accuracy, even if their models performed worse than those trained with real data.

It has been shown that using many programs that are uncurated is more beneficial than using a small set of programs that must be manipulated by individuals. Manel Baradad, a graduate student in (EECS) working at the Computer Science and Artificial Intelligence Laboratory, wrote the paper describing this technique, despite the fact that data are crucial.

Taking a fresh look at pretraining

In most cases, machine-learning models are pretrained, which means they are trained on one dataset before being applied to another. In order to train a model to classify X-rays, a large dataset of synthetically generated images might be used before a much smaller dataset of actual X-rays is used.

Researchers previously demonstrated the possibility of creating synthetic data for model pretraining using a number of image generation programs, but the programs had to be carefully designed so that the synthetic images matched certain properties of real images. Consequently, scaling up the technique was difficult.

A large dataset of uncurated image generation programs was instead utilized in the new study.

Initially, 21,000 image generation programs were collected from the Internet. Baradad explains, "These programs were designed by developers worldwide to produce images that possess some of the properties we are interested in. They produce images that are similar to abstract art."

Using these simple programs, the researchers did not need to produce images in advance in order to train the model. It was discovered that the researchers were able to generate images and train the model at the same time.

Computer vision models were pretrained using their massive dataset of image generation programs for both supervised and unsupervised image classification tasks.

Unsupervised learning involves categorizing images without labels, while supervised learning involves labeling the image data.

Accuracy improvement

When compared with state-of-the-art computer vision models pretrained using synthetic data, their pretrained models were more accurate, putting images into the correct categories more frequently. In spite of the fact that their technique did not yield higher accuracy levels, it did result in a 38 percent reduction in the gap between real-data trained models and those trained on synthetic data.

As a result, we demonstrate that performance scales logarithmically as the number of programs is increased. As our model does not saturate, it would perform even better if we collected more programs.

In addition to pretraining each image generation program individually, the researchers also examined factors that contribute to model accuracy. The researchers found that the model performed better when a program generates a variety of images. Moreover, researchers have found that colorful images with scenes that fill the entire canvas tend to enhance the performance of the model.

Following their demonstration of the effectiveness of this pretraining approach, the researchers plan to extend their technique to other types of data, including multimodal data, which includes both text and images. In addition, they wish to explore methods for improving the performance of image classification.

It is still necessary to train models on real data, according to him. The purpose of this is to provide a direction to the research that will be followed by others.

Src: Massachusetts Institute of Technology

Comments ()