Federated Learning for Privacy-Preserving Data Annotation

Federated learning offers a powerful solution for privacy-preserving data annotation by enabling decentralized labeling without transferring raw data to a central server. Instead of aggregating raw data, federated learning relies on secure aggregation techniques that combine model updates from multiple sources to prevent any party from accessing individual contributions.

A key advantage of federated learning is its alignment with GDPR compliance, making it suitable for industries where regulatory obligations are non-negotiable. Through secure aggregation, insights can be extracted at scale while preserving the anonymity of participants, fostering trust between organizations and data owners.

Key Takeaways

- Centralized data collection faces legal and technical challenges in privacy-focused markets.

- Device-level processing eliminates raw data transmission vulnerabilities.

- Collaborative labeling maintains model accuracy across distributed sources.

- Industry adoption reduces compliance risks while cutting cloud costs.

- Technical frameworks balance security needs with AI performance requirements.

Understanding Federated Learning: Privacy and Distributed Annotation

Federated learning is a distributed machine learning paradigm that supports privacy-preserving data annotation through decentralized labeling. In traditional workflows, data must be collected and centralized before annotation and model training, creating vulnerabilities around privacy and control. Federated learning changes this by enabling local model training on edge devices or within organizational boundaries, keeping annotated data where it was created. With secure aggregation, only encrypted model updates are shared, ensuring that individual contributions remain inaccessible even to coordinating servers.

In sectors involving sensitive or regulated data, federated learning offers a compelling balance between utility and privacy. Organizations can meet stringent GDPR compliance requirements while accessing diverse and richly annotated datasets. Decentralized labeling becomes feasible and efficient, as distributed teams or devices label data independently and feed their learnings into a global model.

Centralized vs. Distributed System Architectures

In centralized system architectures, a single server or a tightly controlled infrastructure manages all data and processing. This structure simplifies deployment and maintenance but introduces critical vulnerabilities, especially in the context of data annotation. When raw data is transferred to a central hub, concerns about privacy, data sovereignty, and potential breaches become prominent. Centralized systems often struggle with scalability and latency, particularly when serving global annotation teams or high-volume streams. These limitations are further compounded by the increasing demand for GDPR compliance, which restricts the movement and storage of personal data across jurisdictions.

In contrast, distributed system architectures support decentralized labeling and model training, allowing data processing to occur locally across multiple nodes or devices. Using secure aggregation, model updates are combined without exposing individual datasets, preserving confidentiality and reducing regulatory risks. Data sovereignty is inherently respected, as information stays within its original location, giving contributors complete control over its use.

Advanced Protection Frameworks

Advanced protection frameworks combine cryptographic techniques, such as homomorphic encryption and differential privacy, with secure aggregation protocols to ensure that individual data points remain hidden during processing. Such frameworks are vital in regulated industries, where data exposure could lead to serious compliance violations.

A core strength of advanced protection frameworks is their support for data sovereignty and GDPR compliance. Rather than relying on centralized controls that place data at risk, these systems empower data owners to retain control while still contributing to large-scale annotation efforts. By design, federated learning environments incorporate these frameworks to ensure that only aggregated insights, never raw or identifiable data, are shared. This enables training accurate AI models across diverse sources without breaching local data governance rules.

Fundamentals of Data Labeling in Machine Learning

- Definition of Data Labeling. Data labeling assigns meaningful tags or annotations to raw data, such as images, text, or audio, to help machine learning models understand patterns and make predictions. Labels can represent categories, object locations, sentiments, or other tasks' relevant features.

- Role in Model Training. Labeled data is the foundation for supervised learning, where models learn by example. The quality and accuracy of labels directly impact the model's performance, making precise annotation a critical step in the pipeline.

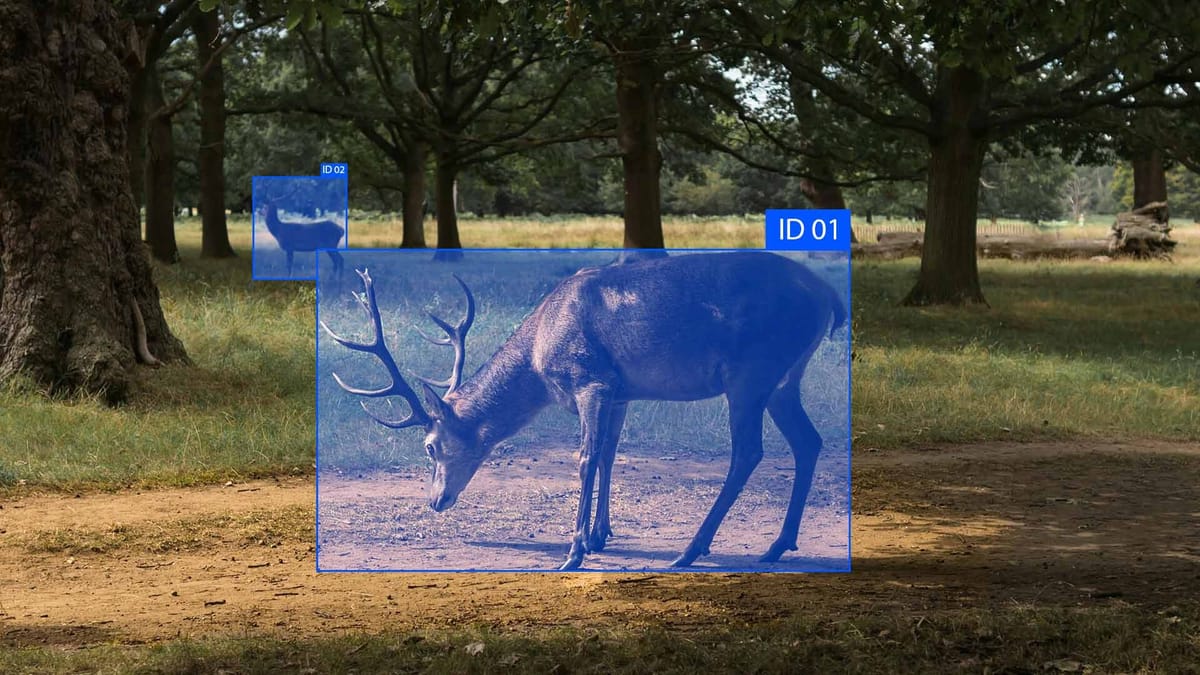

- Annotation Techniques. Human annotators can label data manually or automatically using tools and algorithms. Techniques vary by data type and use case, including bounding boxes, segmentation masks, text spans, and audio transcripts.

- Scalability and Distribution. Organizations often rely on decentralized labeling systems to meet the demands of large datasets, where annotation is distributed across multiple teams or nodes. This accelerates the process and supports data sovereignty by keeping data within local environments.

- Compliance and Privacy. Growing concerns around data privacy, GDPR compliance, and ethical data handling are central to labeling workflows. Integrating secure aggregation and privacy-preserving methods, such as federated learning, ensures that annotations can be collected and utilized without exposing sensitive information.

Implementing Privacy-Preserving Data Labeling Techniques

Implementing privacy-preserving data labeling techniques requires a shift from traditional centralized models to more secure and ethical frameworks. At the core of this transition is decentralized labeling, where annotation occurs locally on user devices or within protected environments, eliminating the need to transfer raw data to external servers. To enable collaboration without compromising privacy, systems must integrate secure aggregation, which merges local model updates to prevent access to individual data points.

Privacy-preserving methods such as federated learning, differential privacy, and encrypted computation must be embedded at the architectural level to meet regulatory standards. These technologies ensure that data remains anonymized and secure throughout the labeling and training processes, even across distributed environments. Privacy-aware annotation tools can enforce policies around data minimization and access control, providing transparency and auditability at every stage.

Data Labeling on Edge Devices

Data labeling on edge devices enables decentralized labeling by allowing annotation and processing to occur directly where data is generated on smartphones, cameras, sensors, or local servers. By leveraging local computational power, edge-based labeling supports real-time annotation, even in environments with limited or intermittent connectivity. Combined with secure aggregation, model updates from each device can be encrypted and merged centrally without revealing any sensitive input.

One of the key advantages of edge-based labeling is its alignment with data sovereignty and GDPR compliance. Because data never leaves the device unless anonymized or aggregated, organizations avoid the legal complexities of cross-border data transfers. Edge annotation systems can also be configured to respect user permissions and contextual privacy settings, creating more ethical and transparent workflows.

Consensus Mechanisms for Quality Labels

Consensus mechanisms ensure annotation accuracy within decentralized labeling systems, where multiple annotators or devices contribute to the same dataset. Rather than relying on a single label per instance, consensus-based approaches compare multiple inputs to identify agreement or statistically likely outcomes. When combined with secure aggregation, these methods allow label quality to be evaluated without revealing individual annotations, preserving privacy and integrity.

Summary

Federated learning and decentralized labeling are increasingly enabling privacy-preserving data annotation, eliminating the need to transfer raw data to centralized servers. This approach enhances data sovereignty, allowing individuals and organizations to control their data fully while contributing to high-quality AI development. These systems are designed to meet strict GDPR compliance requirements, making them suitable for use in sensitive and regulated environments.

FAQ

What is decentralized labeling, and how does it differ from traditional annotation methods?

Decentralized labeling allows data to be annotated locally, without transferring it to a central server. Unlike traditional centralized methods, where raw data must be shared, this protects user privacy and supports data sovereignty.

How does secure aggregation protect sensitive information during annotation?

Secure aggregation combines encrypted updates from multiple sources, preventing any party from accessing individual contributions. This ensures privacy is preserved throughout the decentralized labeling process.

Why is data sovereignty important in modern AI workflows?

Data sovereignty ensures that data remains under the control of its original owner and is not moved or accessed without permission. It's essential for ethical data use and complying with privacy regulations like GDPR.

How does federated learning support GDPR compliance in annotation workflows?

Federated learning keeps all data local while only sharing model updates, helping organizations comply with GDPR by avoiding unauthorized data movement or central storage. It enables privacy-preserving, large-scale AI development.

What role do edge devices play in privacy-preserving data labeling?

Edge devices allow real-time annotation and processing directly where data is created, minimizing latency and data transfer. This supports data sovereignty and aligns with GDPR compliance requirements.

What are advanced protection frameworks, and why are they needed?

Advanced protection frameworks integrate cryptographic tools like differential privacy and secure aggregation into annotation systems. They ensure that data labeling remains confidential and safe, even in decentralized settings.

How do consensus mechanisms ensure label quality in decentralized systems?

Consensus mechanisms use agreement-based methods like majority voting to verify the accuracy of labels. Combined with secure aggregation, they help maintain label quality without compromising privacy.

What are the advantages of decentralized labeling for regulated industries?

Decentralized labeling keeps sensitive data local, making complying with laws like GDPR and maintaining data sovereignty easier. It also reduces risk while enabling the creation of high-quality datasets.

How can federated learning be combined with consensus mechanisms?

Federated learning can use consensus mechanisms to evaluate label quality from distributed sources while preserving privacy through secure aggregation. This strengthens both accuracy and compliance.

Why is GDPR compliance essential in data annotation today?

GDPR compliance is legally required in many regions and ensures user rights and privacy are respected. It drives the adoption of decentralized labeling and privacy-preserving technologies like secure aggregation and federated learning.

Comments ()