Empowering AI with Prime-Based Mathematical Approaches in High-Performance Computing

To overcome high-performance computing bottlenecks, the PNNL research team proposed using graph theory, a mathematical field that explores relationships and connections between a number, or cluster, of points in a space.

The burgeoning traffic congestion in the Seattle vicinity serves as an apt metaphor for a corresponding upsurge in bottlenecks within high-performance computing (HPC) systems, claim researchers from the Pacific Northwest National Laboratory (PNNL). They attribute the increasing congestion in HPC networks to the escalating complexity of tasks such as artificial intelligence (AI) model training, as elucidated in their paper featured in 'The Next Wave', the National Security Agency's review journal for burgeoning technologies.

Sinan Aksoy, a senior data scientist and team leader specializing in graph theory and complex networks at PNNL, suggests that the congestion can be alleviated by structuring the network appropriately. In the HPC framework, a multitude of computer servers or nodes cooperate to function akin to a singular supercomputer. The organization of these nodes and the interconnections among them is the network topology. A bottleneck in an HPC system transpires when data exchange among nodes is forced through the same link, leading to congestion.

As stated in 'The Next Wave' by Aksoy and his associates, Roberto Gioiosa from the HPC group and Stephen Young from the mathematics group at PNNL, such bottlenecks in HPC systems are more prevalent in comparison to when the systems were first conceived. The evolution in usage patterns of HPC systems over time accounts for this discrepancy.

The computer technology sector underwent a significant transformation beginning in the 1990s, causing considerable impact on the Seattle area's economy, residential patterns, and workspaces. The ensuing traffic patterns became increasingly unpredictable, unstructured, and congested, notably along the east-west axis restricted to two bridges across Lake Washington.

The researchers at PNNL draw a parallel between traditional HPC network topologies and the Seattle area's road network. These topologies are tailored to optimally handle physics simulations like molecular interactions or regional climate systems, rather than contemporary AI workloads.

In these physics simulations, computations on one server guide the computations on adjacent servers. Thus, network topologies are optimized for data exchange among neighboring servers. For instance, in a regional climate system simulation, one server might be dedicated to the climate over Seattle while another handles the climate over the Puget Sound region to the west of Seattle.

Contrarily, communication patterns in data analytics and AI applications are irregular and unpredictable, as calculations on one server could inform those on a remote server. Employing these workloads on traditional HPC networks would be as challenging as navigating the greater Seattle region during peak traffic hours on a scavenger hunt, posits Gioiosa.

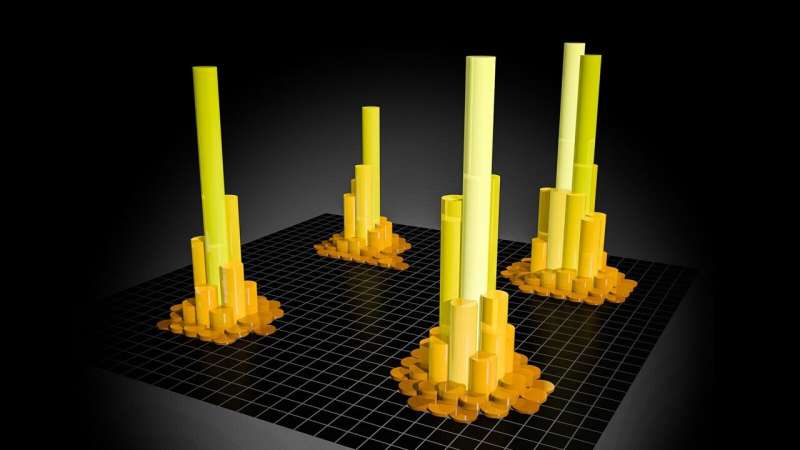

To circumvent HPC bottlenecks, the PNNL research team proposes the use of graph theory, a mathematical domain that investigates relationships and connections among clusters of points in a space. Aksoy and Young are proficient in expanders, a category of graphs capable of diffusing network traffic to ensure multiple options for traversing from one point to another.

Their proposed network, named 'SpectralFly', exhibits absolute mathematical symmetry where each node is uniformly connected to the same number of other nodes, maintaining consistent connections throughout the network. The multitude of options to traverse from one node to another, identical for any node in the network, simplifies the task of routing information through the network for programmers, asserts Aksoy.

The PNNL research team's simulation of the 'SpectralFly' network across workloads ranging from traditional physics-based simulations to AI model training outperforms other HPC network topologies on modern AI workloads, with equivalent performance on conventional workloads. This suggests its potential as a hybrid topology for individuals seeking to execute traditional scientific computations and AI on the same HPC system. "Our goal is to integrate the conventional and emerging worlds in such a method that we can conduct scientific computations while also accommodating AI and big data applications, asserts Gioiosa.

To this end, the team's proposed SpectralFly network, fortified with graph theory principles, could potentially serve as a panacea for the HPC congestion dilemma. As underscored by Aksoy, this network structure presents a homogeneous roadmap for data routing, thereby reducing the computational resources necessary to route information across the network. In essence, it simplifies the task of data exchange among nodes within an HPC system, irrespective of the nature of the workload being executed.

Further, the SpectralFly network demonstrated promising results when subjected to a variety of workload simulations, ranging from conventional physics simulations to contemporary AI model training tasks. This indicates the network's versatility and adaptability to a spectrum of tasks, underscoring its suitability as a universal topology for HPC systems.

As we witness an unprecedented surge in data-intensive tasks in scientific computing, driven by the ubiquity of AI and big data applications, this novel approach to optimizing HPC network topologies signifies a critical step forward. Not only does it potentially resolve the prevalent issue of HPC congestion, but it also paves the way for a more seamless and efficient utilization of computational resources in the emerging era of data-centric science.

Hence, the insights derived from this research at PNNL can potentially revolutionize the way we perceive and handle data exchange within HPC systems. It not only enhances the current understanding of HPC networks but also contributes significantly to the development of more robust, efficient, and adaptable computational frameworks in the future. In summary, the innovative application of mathematical principles, like graph theory, to HPC network structures presents a promising avenue in the quest for optimized high-performance computing in the face of ever-increasing computational demands.

Src: Pacific Northwest National Laboratory

Comments ()