Deep learning models can be skewed by network pruning, according to researchers

A recent study conducted by computer scientists has demonstrated that models based on deep learning can be adversely affected by a commonly used technique called neural network pruning. The study also describes what causes these performance problems and demonstrates a method for addressing them.

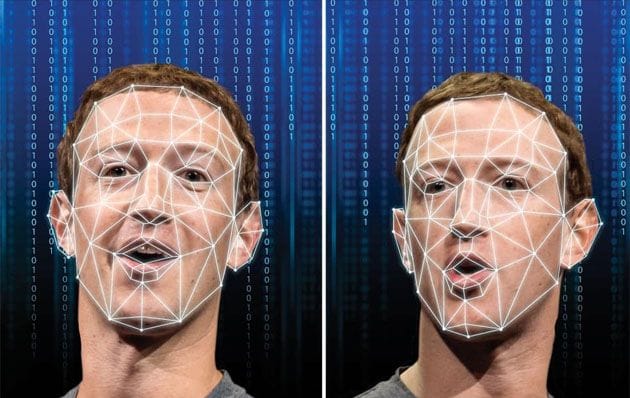

Artificial intelligence, such as deep learning, can be used to classify things, such as images, text, and sounds. Using facial images, it may be possible to identify an individual. As a result, deep learning models are often resource-intensive. Deep learning models pose challenges when they are applied to certain applications.

In order to overcome these challenges, some systems implement "neural network pruning." This process reduces the size and complexity of the deep learning model in order to maximize its efficiency while using fewer computing resources.

Jung-Eun Kim, an assistant computer science professor at North Carolina State University, co-authored a paper on the study, which shows that network pruning reduces the ability of deep learning models to identify some groups.

A deep learning model would need to be compact so that it can operate efficiently if, for example, a security system uses deep learning to scan a person's face to determine if they have access to a building. In most cases, this may be effective, but network pruning could negatively impact the deep learning model's ability to identify some faces."

According to their new paper, network pruning may adversely affect the ability of the model to identify certain groups, called minority groups in the literature, as well as demonstrating a new method of addressing this problem.

Deep learning models are adversely affected by network pruning for two reasons.

Specifically, these two factors are: disparity between gradient norms within groups; and disparity between Hessian norms due to data inaccuracies within groups. It follows that deep learning models can become less accurate when it comes to recognizing specific categories of images, sounds, or text. Specifically, network pruning can aggravate inaccuracies that already exist in the model.

If a deep learning model is trained to recognize faces based on 100 white faces and 60 Asian faces, it may be more accurate at recognizing white faces, but may still achieve adequate performance in recognizing Asian faces. Some Asian faces may not be recognized by the model after network pruning.

As a result of the network pruning, the deficiency may become apparent even if it was not noticeable in the original model.

Kim explains that in order to mitigate this problem, the researchers used mathematical techniques to equalize the groups used in the deep learning model to categorize the samples of data. By using algorithms, we are addressing the gap in accuracy between groups."

A deep learning model which had undergone network pruning was shown to have improved fairness when using the mitigation technique the researchers developed. The model essentially returned to pre-pruning levels of accuracy after using the mitigation technique.

"The most important outcome of this research is that we now have a more comprehensive understanding of exactly how network pruning can influence the performance of deep learning models to identify minority groups, both theoretically and empirically," Kim adds. "We are also open to collaborating with partners in order to identify unknown or overlooked impacts of model reduction techniques, particularly in real-world applications of deep learning models."

Src: North Carolina State University

Comments ()