Building better models with annotated data

Data annotation is the process of labeling data with metadata. It is an important part of these ML algorithms because it helps ensure that it works as expected. These models reflect the intentions of their designers. Without a clear understanding of what “correct” categorization looks like, many of these models could not function.

There is no single "machine learning model." Often, the optimal statistical methods are very project-specific. Depending on what is needed, this can completely change the planning process. This is why establishing the goals of your project early is so important.

What does data annotation mean for your machine learning model?

When we train a machine learning model, metadata is the common language between us and our algorithms. It tells them what they are supposed to learn and what they should expect from their data. It also helps us see how accurately our machine learning models predict different outcomes.

We can use metadata to evaluate and improve the performance of our models by checking for errors in the training process or test data sets. We can also use it to determine whether a machine learning model will produce accurate predictions for new data sets that it hasn't seen before. Proper annotation practices can minimize the need for corrections later in the design process.

The more accurate your annotation, the better your model will be trained, which means that you will have a more accurate prediction in the end. Establishing best practices from the start can ensure this happens as painlessly as possible. Keymakr offers a variety of tools perfectly suited for whatever data type you are trying to annotate.

What kinds of models use data annotation?

There are many kinds of training models that use data annotation. Each type has specific use cases. These are generally broken up into three categories: supervised learning, unsupervised learning, and semi-supervised learning. In each case, the preparation and execution of the algorithms vary.

Supervised learning is the most common use case. First, it uses the annotated dataset to train an algorithm. Then, it takes these pre-labeled pieces of data to create a model for what should be done with new, unfamiliar inputs. Setting parameters from the start means they won't need to be refined later.

Regression models make probabilistic guesses about inputs and produce single-value predictions. Classification and naive Bayesian models try to predict groupings of data based on training datasets. Decision trees, which create the groupings based on conditional statements (if x is true, then check to see if y is true), are one form of this. Many other kinds of supervised models also exist.

Unsupervised learning uses unlabeled data and looks for patterns in the dataset. These kinds of algorithms will often require ongoing validation from their creators. These kinds of algorithms are best suited for unstructured or unpredictable forms of data. There are many, many kinds of unsupervised learning models. They make predictions by clustering or making associations between variables in the data.

Semi-supervised learning combines both labeled and unlabeled datasets to train an algorithm. The aspects of the algorithms that are supervised or unsupervised will vary depending on the use case. Training data in these situations often integrate supervised and unsupervised elements. Some use unsupervised ML algorithms to create training datasets that “self-train” supervised models.

Getting the most out of your annotated data

There are many benefits to well-annotated training data. These include:

- Simplifying the data preparation phase

- Improving model performance

- Increasing the transparency of models

- Reducing bias in a model

- Increasing efficiency of training and testing data sets.

In each of these cases, the high-level goal is to create a data landscape well-suited to the algorithm's goals. In addition, because so many of these algorithms build on existing technology, there are best practices to set you up for success. Therefore, setting clear expectations and processes from the start is central to this process.

There are so many variables that can complicate ML projects. Even if the algorithm works to some degree, poorly designed algorithms can create problems down the road. This is why planning and preparing early is so important. The algorithms shouldn't be total black boxes, so there must be clear intentions throughout the process.

There are three phases in every annotation project: preparation, execution, and validation. Certain practices will ensure that you're well prepared for the following steps in each phase.

In the preparation phase, the focus is on outlining the project and collecting good training data. Good training data reflects the structure and appearance of the data that will eventually go to your algorithm. Training data consistently different from real data is not useful when designing these algorithms. This is why knowing the algorithm's goal from the start is so important.

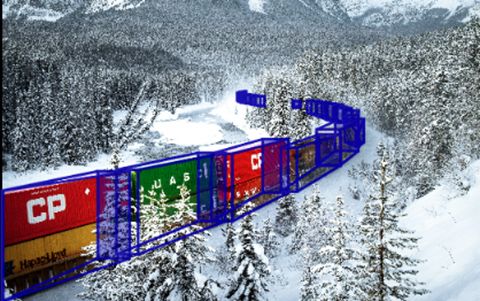

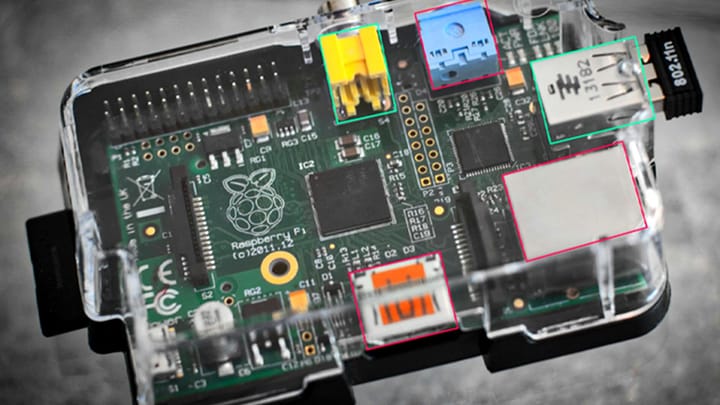

In the execution phase, the emphasis is on using the proper tools to communicate your metadata to these algorithms. This means developing an understanding of how you want your annotated data to be structured and what the key parameters to identify are. For example, bounding box annotation has different use cases than skeletal annotation. Knowing when and how to use one over the other is essential.

Lastly, the validation phase is centered around quality assurance. Although the hope is that every annotated training dataset will be perfect, the reality is often not as clear-cut. In this step, you must observe what the algorithms do with the training datasets and make modifications based on what you see. Algorithm design is an interactive process. Understanding and responding to feedback is critical.

Planning for each phase from the start will streamline the design process. Good data means that the tools you use will be more effective. Good tools often integrate quality assurance directly into their products. Planning these interactions from the start can give your project the initial structure it needs.

The better your annotation is, the better your algorithm will be. Understanding what needs to be done and how it is best executed will save time and money later in the lifecycle of your algorithms. Good data and better tooling will do the same. In the end, though, the best thing you can do is clearly understand what you are trying to accomplish from the state.

Comments ()