Getting Your Next Machine Learning. AI Project Started with Data Annotation

ML algorithms will fail to produce meaningful results without a good training data set. Data annotation is the process of labeling and categorizing data for use in a training dataset. Done either by hand or by crowdsourcing, it mimics the process of the learning model. Translating human observations into functional code can take many forms, depending on the use case.

What will data annotation mean for my next machine learning project?

This kind of pre-labeled training data is used in supervised learning algorithms, where the algorithm follows a clear set of rules. Contrast this to abstract pattern recognition of categorizations with unsupervised learning models. There isn’t a set framework that the algorithm follows. These different learning models have specific and necessary use cases.

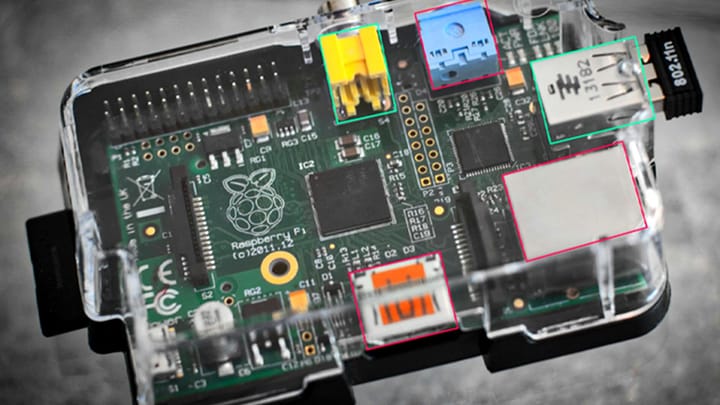

The process of annotating these data sources varies. It depends on the type of data, project requirements, budget constraints, and data quality. An ecosystem of data annotation tools exists to help streamline these processes. Some of them make use of other ML solutions themselves. The goal of any data project should be to align these constraints with what is needed.

Because data annotation can take many forms, its uses also vary. Facial scanners that can identify where lips and eyes should be on a face are trained on massive facial datasets. Blurry or handwritten words can be made into textual data. Red eyes can be removed from photos. All require a clear set of training data from which these algorithms can be designed.

There are centralized and decentralized forms of data annotation. ReCAPTCHA, Google's human-verification system, annotated much of the text of Google Books. Appen or Mechanical Turk users can be used to crowdsource annotation. Tools can give individuals the ability to annotate as well. The decision of how to do this depends on project constraints as well.

What do you need to know about the different kinds of data annotation?

Each of these algorithms tries to make random data into machine-readable categories. This can apply to many things. For example, voice-activated language algorithms can understand when a question starts and ends. Social media companies flagging content that violates their terms of service. Many of these data annotation tools focus on finding ways to communicate these abstractions in a systematized manner.

Each of these data types comes with a particular suite of tools and practices. Oftentimes, complex machine learning projects will include several types of projects at the same time. Some of the more common types of data sources to annotate are:

- Image Annotation

- Video Annotation

- Audio Annotation

- Semantic Annotation

- Text Annotation

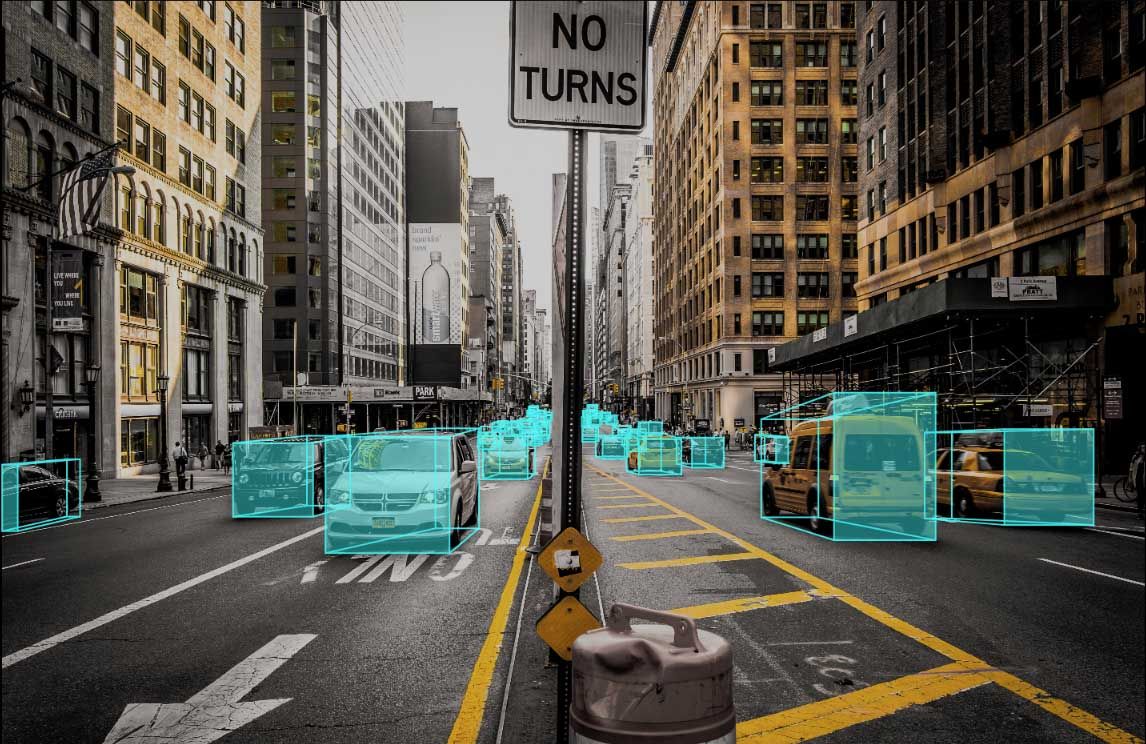

Because visual data can mean different things (from satellite data to handwriting), the ways to annotate it also vary. Image classification algorithms generally use training datasets to determine what a picture is about. Object identification algorithms try to extract specific objects from within an image.

You can further narrow this category by segmenting specific images to identify unique objects from other objects of the same kind. Bounding boxes and complex polygons are common tools used to separate these objects within the training dataset. Keymakr offers several kinds of polygonal bounding boxes to help identify these kinds of objects.

Video annotation presents a new dimensional aspect to the task of image recognition. It takes individual frames and creates a composite, chronological interpretation of what is going on. Manually annotating long (or continuous) video data can be tedious. Video annotation is often augmented with software that can track moving objects. Video data can be a powerful tool for automating tasks. Self-driving cars are often given as a clear example.

Text and audio data are two related and prevalent forms of annotated data. Audio data can also contain other, non-linguistic information: background noises, tone, and errors. The same is true of textual data, with concepts expressed in many ways. These processes are often lumped together as “natural language processing” (NLP) algorithms.

Semantic annotation adds another level of contextual information. It adds meaning to the metadata of unstructured data types. Most common with textual data, it is an important step in figuring out what something is about. It often looks like NLP, with a greater focus on the metadata behind textual fields (and not the text itself).

In each of these processes, there are a growing number of tools that can help ease the annotation process. As data sources become more robust, the selection of available tools will also grow. A quick tour around the Keymakr site should give you a sense of what these look like and how they can be tailored to fit the specific needs of your data.

What best practices should you follow when annotating data?

The process of annotation can introduce human error into these algorithms. It is important to follow best practices whenever creating training sets. There are many supervised learning algorithms to choose from. Each optimizes for a specific use case or specific data availability.

These algorithms also carry the same risks as any statistical analysis. You might overfit the data if the algorithm identifies underlying trends as well as the noise and randomness of the training dataset. As you begin to construct your models, there are many statistical tools that you can use to identify if this is happening. The best practices for any ML project are like any data project.

Another best practice to follow when creating these annotated training datasets is knowing exactly what you want the algorithm to see. If you aren't sure what you want to automate, it will be hard to communicate that in your algorithm. Incomplete or limited training datasets can have major implications. The observed shortcomings of facial-recognition technology are a strong example of this.

As the scale of annotated data increases, it is also essential to establish specific guidelines on how it should be done. This means creating clear prompts on what and how information should be categorized. Many platforms that allow for more decentralized annotation practices have features built for these purposes.

As with anything, the design process can matter as much as the technologies used to design them. Establishing clear guidelines from the onset of your project should prevent problems downstream. Knowing your data, your algorithms, these best practices, and your intentions behind the algorithm should set you up for success.

Comments ()