4D Spatio-Temporal Annotation for Autonomous Vehicles

Autonomous vehicles process terabytes of data every day, but this flood of sensor data does not account for the complexity of the real-world environment. Combining spatial depth with time-based tracking redefines environmental analysis. By incorporating temporal layers into 3D mapping, systems can now predict pedestrian movement more accurately and efficiently than previous models. This is thanks to advanced 3D/4D annotation techniques that transform raw LiDAR and camera data into intelligent road models.

Modern autonomous driving systems require continuous monitoring of the evolving scene, understanding not only where objects are, but also how they move and interact. This approach reduces false collision warnings and improves the accuracy of route planning.

Key Takeaways

- Integrates spatial depth with time-based tracking for modeling dynamic environments.

- Transforms raw sensor data into predictive intelligence for safe navigation.

- Tracks object movement patterns over successive time periods.

- Addresses gaps in real-time motion prediction.

- Combines sensor input and decision-making through multi-level data interpretation.

4D Spatio-Temporal Annotation

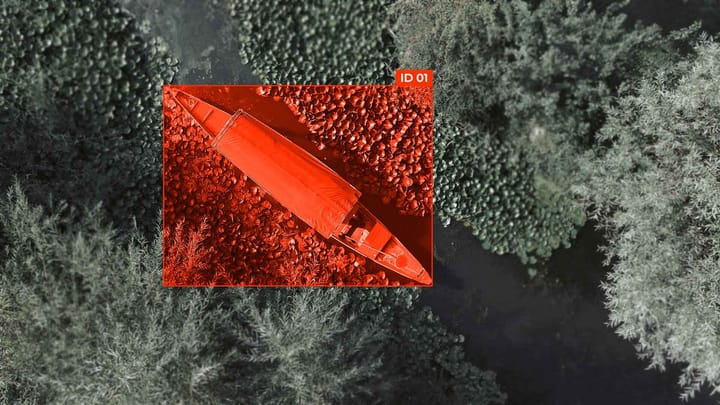

4D spatio-temporal annotation is a modern approach to data labeling that combines spatial and temporal dimensions to track changes in the environment accurately. Unlike traditional 2D or 3D annotations that only describe objects in space, 4D annotation adds a time dimension, known as temporal labeling, to observe the dynamics of change over time.

This approach is crucial for analyzing satellite imagery, as it is necessary to distinguish between temporal fluctuations and persistent changes. 4D annotations enable the creation of three-dimensional models of objects tied to a specific time, ensuring reproducibility, increasing the accuracy of machine learning, and facilitating the prediction of future changes.

The Importance of 4D Spatio-Temporal Annotation in Autonomous Vehicles

4D spatio-temporal annotation facilitates the development of autonomous vehicles by enabling dynamic object tracking, allowing systems to determine the current position of other cars, pedestrians, or obstacles, and predict their future trajectories. This enables autonomous driving systems to determine the current position of other cars, pedestrians, or obstacles, and predict their future trajectories.

Thanks to 4D annotations, machine learning, and computer vision algorithms, computer systems can create accurate, real-time traffic maps that take into account the speed, acceleration, and behavior of objects, and adapt route and safety distance decisions accordingly. This is important in complex urban traffic conditions, changing weather conditions, or at multi-level traffic interchanges.

Multimodal Thinking in Autonomous Systems

Multimodal thinking in autonomous systems refers to the ability to combine and analyze data from various sensors and information sources to make informed decisions. Autonomous vehicles, robotic platforms, and drones collect large amounts of data from cameras, LIDAR, radar, GPS, satellite systems, and other sensors. Multimodal thinking allows the system to integrate this data, compare information from different channels, and create a complete picture of the environment through sensor fusion.

Thanks to this approach, autonomous systems recognize objects and their behavior, predict movement trajectories, assess risks, and respond to unforeseen situations with accuracy. Multimodal thinking also provides resilience to conditions where one of the sensor channels is limited or provides inaccurate data, such as in poor visibility, heavy rain, or dusty environments.

As a result, this enhances the navigation, safety, and efficiency of autonomous systems, enabling accurate decisions to be made in real-time and increasing the system's ability to adapt to complex and changing environmental conditions.

Approaches to integrating spatial and temporal data

Integrating spatial and temporal data is crucial for autonomous systems, including autonomous vehicles, drones, and robotic platforms. This approach enables the precise location of objects in space and the tracking of their dynamics over time. Combining spatial and temporal information improves the accuracy of trajectory prediction, object recognition, and real-time decision-making.

Evaluating the performance of autonomous vehicles using detailed annotations

Evaluating the performance of autonomous vehicles requires detailed annotations that reflect real-world road environment conditions, object trajectories, and interactions with other road users.

FAQ

How do 4D annotations improve dynamic decision-making in autonomous systems?

4D annotations enhance dynamic decision-making in autonomous systems, enabling the tracking of objects' spatial positions over time and the prediction of their trajectories for accurate and safe responses.

How to ensure the accuracy of annotations for complex interactions?

This can be achieved through a combination of multi-sensor data, expert review, standardized labeling protocols, and multi-level verification.

What is the role of predictive analytics in autonomous security systems?

Predictive analytics in autonomous security systems allows you to anticipate potential risks, predict object behavior, and make preventive decisions to prevent accidents and threats.

What indicators prove the effectiveness of 4D analytics?

The effectiveness of 4D analyses is proven by the accuracy of spatiotemporal tracking, trajectory prediction, annotation accuracy, data consistency between sensors, and the model's ability to detect dynamic changes in objects over time.

Comments ()