Vision-Based 3D Occupancy Prediction

Occupancy prediction enables models to forecast which areas of a scene will be occupied, unoccupied, or hazardous in the near future. Unlike traditional object recognition, occupancy prediction allows a system to "look ahead" and understand the dynamics of car, pedestrian, and other road user movement. This is necessary for trajectory planning, collision avoidance, and decision-making in complex conditions. This approach enables the car to assess risks, adjust its speed, and predict the presence of obstacles around bends or behind other vehicles.

Key Takeaways

- Camera-only pipelines can generate dense 3D voxel meshes at large scales.

- Occupancy provides continuous scene context beyond the frame, which aids planning and safety.

- Methods and datasets for achieving milestones, standardizing assessments, and accelerating implementation.

- Transparent metrics such as mIoU and IoU occupancy simplify benchmarking.

From images to voxels of a 3D scene

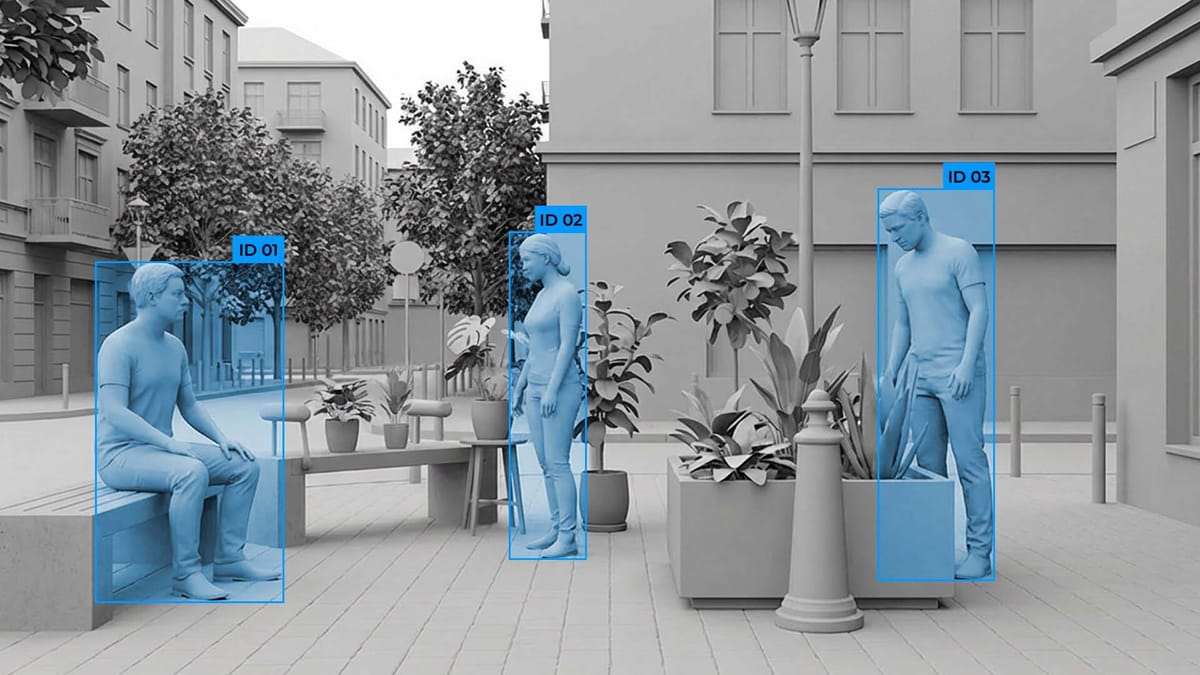

Evaluating a three-dimensional scene becomes clear when the system clearly defines what data it receives as input and what format of spatial representation it should form as output. The input includes images from multiple cameras, as well as sensor data (LiDAR points, radar signals).

Each image displays the scene in a 2D projection, but does not contain complete information about the distance to objects, their shape, or position in space. The task of the model is to transform these 2D observations into a 3D representation, performing image-to-voxel conversion that maps pixels into a voxel-based occupancy grid

At the output, the system forms a voxel map. This is a three-dimensional grid, where each voxel contains a rating: occupied, free, or unknown. This enables the model to comprehend the scene's structure, including the precise locations of cars, pedestrians, curbs, walls, and other obstacles.

Monocular pipelines

Monocular pipelines take a single RGB image as input and reconstruct the whole 3D structure of the scene, creating a dense scene representation from camera data. Monocular models operate in conditions where the depth of the scene is not directly measured, but must be inferred from visual features, perspective, semantics, and frame context. Monocular depth estimation provides a critical intermediate step for accurately predicting 3D occupancy from a single image. This involves several stages:

- 2D features from RGB. The model extracts depth features from a single image (textures, contours, silhouettes, and semantic cues).

- Projection into 3D space. The 2D features are transformed into a 3D mesh using geometric assumptions or transform query mechanisms.

- Volume reconstruction. The network forms a 3D grid of voxels, where each voxel contains an occupancy estimate.

- Semantic enrichment. Many approaches combine occupancy and semantic scene completion, i.e., they simultaneously predict both structure and object classes.

Thus, monocular pipelines enable 3D reconstruction in conditions where additional sensors are unavailable or prohibitively expensive. They allow the creation of lightweight, scalable environmental analysis systems used in robotics, autonomous driving, AR/VR, and infrastructure monitoring.

Evaluation protocols and metrics for occupancy prediction

To evaluate an occupancy prediction model, it is necessary to measure the accuracy of restoring the geometry of the space, including both filled and empty areas, distant regions of the scene, and hidden zones. There are specialized metrics and protocols for this. Let's consider them in the table below.

Transformers and attention for 3D occupancy

Transformers and attention mechanisms are used in 3D reconstruction and occupancy prediction. The idea is to allow the model to focus on the most critical regions of the scene and integrate information from 2D images and from 3D spatial representations.

Problems and Solutions of 3D Occupancy Prediction

3D occupancy prediction requires considerations of depth, overlap, and volumetric structure of the environment. Despite advances in deep learning, fundamental challenges remain. Solving these problems has implications for the reliability of autonomous driving, robotics, and augmented reality applications.

FAQ

What is the main task of converting frames from a single camera to voxels of a 3D scene?

The primary task is to reconstruct the 3D structure of the scene in the form of a voxel map, based on 2D images from a single camera, and to determine which areas are occupied and which are free.

How does semantic occupancy differ from pure geometric occupancy?

Semantic occupancy assumes the occupancy of space by objects, their categories or types, while pure geometric occupancy only evaluates the fact of the presence of a volume without classification.

What metrics should teams report for fair comparison?

Teams should report IoU, mIoU, precision, recall, F-score, Chamfer Distance, and visibility-aware metrics to ensure fair and comprehensive comparison of 3D occupancy prediction models.

What architectural decisions are essential for reliable 3D reconstruction?

Key architectural solutions include integrating multimodal data, using transformers with attention mechanisms, sparse voxel representations, and multi-projection or hierarchical 3D modeling.

How do depth estimation and pseudo-LiDAR approaches fit into pipelines?

Depth estimation and pseudo-LiDAR approaches are integrated into pipelines as intermediate representations that transform 2D images into 3D point clouds or voxels for accurate scene occupancy prediction.

What are the major open problems facing the field?

Key problems include depth ambiguity from monocular inputs, long-range context modeling, label scarcity, domain offsets between datasets, and standardization of estimation protocols.

Comments ()