Understanding Data Labeling Techniques

Data labeling is an essential stage in developing machine learning models. It involves identifying and tagging objects in raw data to provide context for the models. This process is crucial for supervised learning models, where labeled data helps the model understand and process input data more accurately. Different techniques are used for data labeling in various domains such as computer vision, natural language processing, and audio processing.

Key Takeaways

- Data labeling is crucial for developing machine learning models.

- Supervised learning models rely on labeled data to improve accuracy.

- Data labeling techniques vary across different domains.

- Computer vision, natural language processing, and audio processing are examples of domains that require data labeling.

- Accurate data labeling enhances the performance of machine learning models.

Data Labeling in Computer Vision

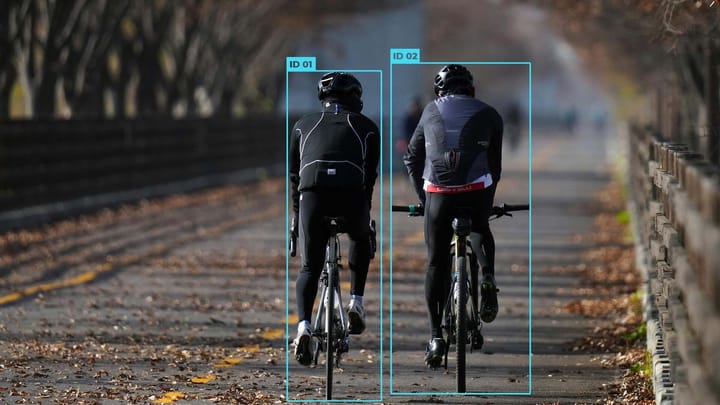

In the field of computer vision, accurate data labeling is crucial for training models that can understand and interpret visual information. Computer vision data labeling involves the annotation of images, pixels, key points, or the creation of bounding boxes to generate labeled datasets for training. These labeled datasets serve as the foundation for building computer vision models that can categorize images, detect object locations, identify key points, or segment images.

To ensure the accuracy and quality of labeled data, various labeling tools and strategies are employed. These tools provide annotators with the necessary functionalities and interfaces to perform precise and consistent labeling. Additionally, supervised learning labeling techniques are often used, where expert annotators manually label the data based on predefined guidelines or criteria. This supervised approach helps ensure the reliability and consistency of the labeled datasets.

Computer vision data labeling plays a crucial role in the development of applications such as image recognition, object detection, facial recognition, and autonomous driving. The labeled datasets generated through precise data labeling enable machine learning models to learn from examples and make accurate predictions based on the identified patterns and features.

Accurate data labeling in computer vision is essential for training powerful and reliable models that can understand and interpret visual information. It ensures that these models can effectively analyze and process images, enabling a wide range of applications across industries.

Data Labeling in Natural Language Processing

When it comes to natural language processing (NLP), data labeling plays a critical role in creating training datasets. In this domain, manual identification of important sections of text or tagging the text with specific labels is essential. This process enables NLP models to understand and analyze text data accurately, supporting tasks such as sentiment analysis, entity name recognition, and optical character recognition.

One common approach to data labeling in NLP is the use of data annotation services. These services provide expert annotators who draw bounding boxes around text and transcribe the text in the training dataset, resulting in labeled data that can be utilized for training NLP models.

Effective data labeling in NLP involves the careful and precise identification of relevant information within the text. Annotators often need to mark specific sections, identify key phrases, or assign sentiment labels to input data. This meticulous labeling process ensures that the models are trained with accurate and comprehensive data, improving their ability to understand and generate language-based insights.

Incorporating data annotation services in NLP projects not only saves time but also ensures high-quality labeled data. With the help of trained annotators, businesses can leverage their expertise to generate reliable and relevant training datasets for NLP models. The combination of manual labeling and professional guidance enhances the accuracy and performance of NLP models, leading to more accurate language analysis and processing.

Next, let's take a look at an example of data labeling in NLP:

"The sentiment extraction task aims to identify the sentiment expressed in a given sentence. When labeling the dataset, annotators mark the sentiment as positive, negative, or neutral. They carefully analyze the context and overall tone to determine the sentiment expressed in the sentence. This labeled data is then used to train sentiment analysis models, enabling them to accurately predict the sentiment of new sentences."

| Data Labeling Process in NLP | Key Steps |

|---|---|

| 1. Text Segmentation | Breaking down the text into manageable units for labeling |

| 2. Annotation Guidelines | Providing clear instructions and guidelines for annotators to follow |

| 3. Labeling and Tagging | Manually identifying and tagging important sections of text |

| 4. Quality Assurance | Reviewing and verifying the accuracy of labeled data |

| 5. Dataset Preparation | Organizing and formatting the labeled data for model training |

By following this meticulous process, businesses can ensure the creation of reliable datasets that power advanced NLP models. With accurate labeling and robust training data, NLP models can achieve high levels of language understanding and analysis, enabling businesses to extract valuable insights from textual data.

Data Labeling in Audio Processing

Audio processing plays a vital role in converting various sounds into a structured format suitable for machine learning applications. However, to train the models effectively, accurate data labeling is essential in this domain. This involves the manual transcription of audio into written text and the categorization of the audio with relevant tags.

By labeling audio data, machine learning models can be trained for various tasks such as speech recognition, wildlife sound classification, and audio event detection. These labeled audio datasets provide the necessary information for the models to understand and process audio inputs accurately.

Labeling Strategies

Labeling audio data requires specific strategies to ensure the accuracy and quality of the labeled datasets. Different labeling techniques and tools can be utilized based on the requirements of the project. These strategies may include:

- Transcribing audio into written text: Annotators manually transcribe the audio content into written text, capturing the precise words and phrases.

- Categorizing audio with tags: Annotation experts tag the audio data with relevant labels, indicating the characteristics or context of the audio.

- Verifying audio accuracy: Quality assurance checks are performed to ensure the correctness of the transcribed text and tags.

By implementing these labeling strategies, audio data can be transformed into labeled datasets that enable the training of robust machine learning models capable of accurately analyzing and interpreting audio inputs.

| Task | Example Use Case |

|---|---|

| Speech Recognition | Converting spoken words into written text for voice assistants or transcription services. |

| Wildlife Sound Classification | Identifying and categorizing different animal sounds for biodiversity conservation and ecological research. |

| Audio Event Detection | Detecting and classifying specific events or sounds, such as sirens or gunshots, for security and surveillance applications. |

"Accurate data labeling in audio processing is crucial for training machine learning models that can understand and interpret audio inputs effectively."

Data Labeling Approaches

Data labeling approaches vary depending on the project's complexity, size, and duration. Companies have several options to choose from when it comes to labeling their data. Let's explore some of the common data labeling approaches:

Internal Labeling

Internal labeling involves using in-house data science experts to manually label the data. This approach allows companies to keep the labeling process within their own team, ensuring complete control and confidentiality over the data. Internal labeling is often preferred for projects that require domain-specific knowledge or deal with sensitive data. While it offers higher data quality and privacy, it can be time-consuming and might require additional resources dedicated to labeling.

Synthetic Labeling

Synthetic labeling is a technique where new labeled data is generated from pre-existing datasets. By leveraging existing labeled data, companies can train models and use them to automatically label new data points. This approach helps reduce the time and effort required for manual labeling, making it ideal for large-scale projects. However, the accuracy of synthetic labeling depends on the quality and diversity of the pre-existing labeled datasets used as the foundation.

Programmatic Labeling

Programmatic labeling involves using automated scripts or algorithms to label the data. This approach significantly reduces the time and human effort required for labeling. Companies can develop custom scripts or utilize existing tools to automate the labeling process based on predefined rules or machine learning algorithms. Programmatic labeling is efficient for projects with well-defined labeling criteria and standardized data formats. However, it may not be suitable for complex or subjective labeling tasks that require human judgment.

Outsourcing

Outsourcing data labeling tasks involves hiring external experts or agencies to perform the labeling on behalf of the company. This approach allows companies to leverage specialized expertise and access a larger pool of annotators. Outsourcing is beneficial when companies lack the necessary resources or expertise in-house. However, it requires close collaboration and clear communication to ensure quality and consistency in labeling.

Crowdsourcing

Crowdsourcing is the process of distributing data labeling tasks to a crowd of workers, usually through online platforms. This approach enables companies to tap into a large and diverse workforce, often at a lower cost compared to other labeling approaches. Crowdsourcing is advantageous for projects with high volumes of data or tasks that require multiple annotations for quality control. However, it may pose challenges in terms of maintaining consistency and accuracy due to the variable expertise and reliability of crowd workers.

Benefits and Challenges of Data Labeling

Data labeling plays a crucial role in the development of machine learning models by providing labeled data for training. This process offers numerous benefits that greatly impact the accuracy and performance of the models. Additionally, it presents challenges that organizations need to address to ensure the quality and effectiveness of the labeled data.

Benefits of Data Labeling

Properly labeled data brings several benefits to the table:

- Precise Predictions: Labeled data allows machine learning algorithms to learn patterns and relationships more effectively. By providing clear labels, models can make more accurate predictions and classifications.

- Better Data Usability: Labeled data improves the usability of datasets for specific tasks. It enables researchers and analysts to perform exploratory data analysis and gain meaningful insights.

- Improved Performance: When models are trained on high-quality labeled data, they exhibit improved performance in various applications. This translates to enhanced decision-making capabilities and better outcomes.

Challenges of Data Labeling

While data labeling offers several benefits, it also presents challenges that organizations must overcome:

- Cost and Time Consumption: Data labeling can be time-consuming, especially for large datasets. It also requires resources to hire skilled annotators or implement labeling tools and infrastructure.

- Potential Human Errors: The labeling process involves manual effort, leaving room for human errors such as mislabeling or inconsistencies. Quality assurance checks are crucial to mitigate these errors and ensure data accuracy.

- Quality Assurance: Ensuring the quality of labeled data is a challenge, especially when dealing with complex labeling tasks. Organizations need to implement robust quality assurance processes to validate the accuracy and reliability of labeled data.

Overcoming these challenges is essential to maximize the benefits of data labeling and utilize it effectively in machine learning projects.

| Benefits | Challenges |

|---|---|

| Precise predictions | Cost and time consumption |

| Better data usability | Potential human errors |

| Improved performance | Quality assurance |

Data Labeling Best Practices

Optimizing data labeling accuracy and efficiency is essential for training machine learning models effectively. By following these data labeling best practices, you can enhance the quality and reliability of labeled data:

- Use intuitive and streamlined task interfaces for annotators: Providing annotators with user-friendly tools and interfaces simplifies the labeling process and improves overall efficiency.

- Implement consensus measures to assess inter-labeler agreement: Consensus measures, such as inter-annotator agreement scores, help ensure consistent labeling across annotators and reduce errors.

- Conduct label auditing to ensure accuracy: Regularly auditing labeled data helps identify and correct any labeling mistakes, ensuring the accuracy of the training dataset.

- Utilize transfer learning techniques: Transfer learning leverages pre-trained models to label new data efficiently, reducing the need for extensive manual labeling.

- Employ active learning methods to select the most appropriate datasets for labeling: Active learning algorithms intelligently select data samples that offer the most learning potential, optimizing the labeling process.

Following these best practices will not only help improve the quality and reliability of your labeled data but also enhance the performance of your machine learning models.

Comparing Data Labeling Best Practices

| Best Practice | Benefits |

|---|---|

| Using intuitive task interfaces | Improves annotator efficiency and productivity |

| Implementing consensus measures | Ensures consistent and accurate labeling |

| Conducting label auditing | Identifies and corrects labeling mistakes |

| Utilizing transfer learning techniques | Reduces manual labeling efforts, saves time |

| Employing active learning methods | Optimizes dataset selection, maximizes learning potential |

Conclusion

Data labeling techniques play a crucial role in enhancing the accuracy and performance of AI and machine learning models. Properly labeled data serves as the foundation for training these models, enabling them to make accurate predictions and estimations. By providing context and meaning to raw data, data labeling empowers AI systems to understand and process information more effectively.

Although data labeling can be challenging and time-consuming, the benefits it brings to AI initiatives are well worth the effort. Labeled data for training allows businesses to develop models that are capable of delivering more precise and reliable results. With access to high-quality labeled data, AI technologies can be deployed in various domains, including computer vision, natural language processing, and audio processing, enabling businesses to harness the full potential of these cutting-edge technologies.

Adopting best practices and choosing the appropriate labeling approaches are crucial for successful data labeling. Intuitive task interfaces, consensus measures, label auditing, and active learning methods are some of the best practices that can optimize the accuracy and efficiency of data labeling processes. By following these guidelines, businesses can ensure the quality and reliability of their labeled data, ultimately leading to improved model performance and usability.

In conclusion, labeled data for training is paramount in the development of AI and machine learning models. With the right data labeling techniques and approaches in place, businesses can unlock the full potential of AI in their operations, driving innovation and achieving better outcomes in various industries.

FAQ

What is data labeling?

Data labeling is the process of identifying and tagging objects in raw data to provide context for machine learning models.

Why is data labeling important in machine learning?

Data labeling is important in machine learning because the labeled data helps models understand and process input data more accurately.

What are some data labeling techniques used in computer vision?

In computer vision, data labeling techniques include image annotation, pixel labeling, key point identification, and bounding box creation.

How is data labeling done in natural language processing?

In natural language processing, data labeling involves the manual identification of important sections of text or tagging the text with specific labels.

What is the role of data labeling in audio processing?

Data labeling in audio processing includes manual transcription of audio into written text and categorizing the audio with tags.

What are the different approaches to data labeling?

Different approaches to data labeling include internal labeling, synthetic labeling, programmatic labeling, outsourcing, and crowdsourcing.

What are the benefits and challenges of data labeling?

Benefits of data labeling include more accurate predictions and improved performance of machine learning models, while challenges include cost, time consumption, and potential human errors.

What are some best practices for data labeling?

Best practices for data labeling include using intuitive task interfaces, implementing consensus measures, conducting label auditing, and employing active learning methods.

How does data labeling contribute to AI and machine learning?

Properly labeled data serves as the foundation for training AI and machine learning models, enabling them to make accurate predictions and estimations.

Comments ()