Time-Series Data Labeling for Self-Driving Cars

For an autonomous car to move safely through a city, simply recognizing objects around it is not enough. It must understand time and the sequence of events. In the world of autonomous driving, data does not exist as a set of random photos – it consists of continuous information streams where each subsequent frame is inextricably linked to the previous one.

Time-series data labeling transforms raw sensor data into a structured history. This allows AI to not only identify a pedestrian but also understand their walking pace, the acceleration of a car in the adjacent lane, or the moment a traffic light begins to change color.

The main difficulty, and at the same time the value of this approach, lies in ensuring temporal consistency. In real life, objects cannot instantly disappear or teleport. Time-series labeling teaches the AI model the laws of physics and the logic of behavior.

By accurately tracking objects through hundreds of frames and synchronizing video with the car's internal system data, engineers can train prediction models. This is the foundation of safety: the car learns not just to react to an obstacle that has already appeared, but to anticipate its appearance fractions of a second before the event.

Quick Takes

- Temporal labeling teaches AI the laws of physics, preventing errors like "teleportation" or the sudden disappearance of objects.

- Thanks to intent labeling, the system learns to predict maneuvers before they even become obvious.

- Keyframing and auto-labeling methods allow for processing thousands of video frames much faster than manual annotation.

- Training success depends on temporal alignment – the perfect match between the video and the logs of the car's internal systems.

- The smoothing process eliminates micro-jitters of objects, ensuring realistic trajectories for the AI.

Data Streams and Features of Temporal Labeling

To train a self-driving vehicle, it is important to merge information from different sources into a single timeline. Only then can the system understand the full picture of what is happening around it during movement.

Video Streams and Object Tracking

Video from cameras is the primary source of visual information, which requires thorough temporal labeling.

- Continuity of Movement. Instead of labeling individual photos, specialists work with entire video fragments. The main task is to perform continuous tracking so the system understands that the same car is moving through all consecutive frames.

- Behavior Analysis. Thanks to time-based annotation, one can label not only the type of object but also its actions. This allows the model to recognize moments when a cyclist begins a maneuver or a pedestrian prepares to step onto the road.

3D Space Dynamics in LiDAR Point Clouds

LiDAR creates a volumetric model of the world that constantly updates as the car moves. Time-series labeling for LiDAR helps capture how the 3D geometry of objects changes in space. This enables the AI to see the volume of obstacles and the exact distance to them at every moment.

Using sequence segmentation, engineers isolate entire layers of data in 3D space. This is necessary so the car can clearly distinguish between road boundaries and moving objects, even when they partially overlap during movement.

Internal Sensor Data and Technical Context

In addition to external observation, the system analyzes the internal metrics of the car itself, which arrive via a specialized data bus.

Data on speed, steering wheel angle, and brake pedal pressure are recorded synchronously with the video. This creates a full technical context of how the machine reacted to external stimuli at a specific moment.

Combining visual data with internal metrics is critical for safety. For example, the system learns to link the visual signal of a red traffic light with the automatic notification of brake system activation, making driving smooth and predictable.

Specific Tasks of Labeling in Time

When working with time series, annotators focus not only on the physical boundaries of objects but also on the logic of their behavior. This allows for the creation of a knowledge base for decision-making in complex situations.

Capturing Critical Events

This task involves identifying the exact moments in time when a change in the scene state or agent behavior occurs. Specialists mark the start and end of important events, such as the instant a traffic light changes color or the start of braking for the car ahead. Such labels teach algorithms to react instantly to environmental changes.

An important part is capturing moments when a pedestrian crosses the sidewalk line or a car wheel touches a lane marking. This data helps the system understand priority rules and safe maneuvering boundaries.

Predicting Intentions

This type of labeling focuses on the future actions of road users, which is the basis for behavior forecasting.

- Reading Intent. Annotators analyze video and indicate what an object is preparing to do. For example, if a car slowly drifts toward the edge of a lane and turns on its blinker, a specialist adds a label about preparation for overtaking or turning.

- Proactive Safety. Through this approach, AI learns to recognize the "body language" of cars and pedestrians before a maneuver becomes obvious. This allows the autonomous vehicle to act preventively, avoiding jerky movements and emergency stops.

Building and Labeling Trajectories

The final stage of working with dynamics is visualizing the path of each agent through space and time. A smooth line is created for each object, showing its historical and future path. This ensures reliable future path prediction for training the navigation system.

Using temporal labeling, developers obtain exact coordinates of movement over time. This data helps the model understand at what speed and along what arc a cyclist will move, allowing the self-driving car to plan its own safe route in advance.

Optimization and Labeling Speed

Labeling video streams is a massive volume of work where every object must be tracked through thousands of frames. To avoid spending years on data preparation, engineers use methods that allow the computer to help the human.

The main tool here is keyframing. Instead of outlining the car on every frame of the video, the annotator marks it only at strategically important moments – for example, at the beginning and end of a maneuver. The system automatically fills the gaps, calculating the object's movement between these points. This ensures perfect trajectory smoothness and speeds up the process significantly.

For even greater speed, auto-labeling is used. This is an approach where an existing AI model makes a "first pass" over raw data, placing preliminary labels. A human then simply checks these results and corrects errors in complex cases.

Comparison of Data Processing Methods

The use of these technological tricks allows for the creation of massive training datasets that are the foundation for safe driving. Without automation, training modern self-driving cars would be technically impossible due to the colossal amount of visual information.

Quality Control and Temporal Anomalies

In time-series work, the accuracy of a single individual frame does not guarantee success. Even a perfectly placed car in a photo can turn out to be an error if, in the next second, it "teleports" a meter to the side. Quality control in temporal labeling focuses on movement logic and data consistency.

Smoothing and Physical Reality

One of the most common types of errors in temporal labeling is microscopic jumps or "jittering" of object outlines from frame to frame.

- Checking for Physical Consistency. The quality control system analyzes the object's trajectory as a single line. If a sharp jump in speed or position occurs between frames that is impossible by the laws of physics, such labeling is sent back for revision. This is called the smoothing process, which ensures stable, continuous tracking.

- Anomaly Removal. Special algorithms automatically highlight video sections where an object suddenly changes size or trajectory. This allows annotators to quickly fix errors so the AI does not perceive ordinary labeling inaccuracies as the aggressive driving style of those around it.

Temporal Data Alignment

An autonomous car collects information simultaneously from many sources, and they must all work in perfect resonance. It is important to check if events in digital logs match what we see on video. For example, specialists check temporal alignment by comparing the moment the brake pedal is pressed in internal system data with the moment the brake lights illuminate on the video.

If the data diverges by even a few milliseconds, the training model will receive a contradictory signal: the car is already braking, but visually this is not yet confirmed. Quality labeling ensures that all information streams are perfectly overlaid on each other, creating a unified and reliable history of every trip.

FAQ

How does the system react to an object being temporarily blocked by another car?

Annotators use temporal context to maintain the same object ID, even if it briefly disappeared from view. This teaches the autopilot to expect a car to appear from behind an obstacle.

Does frame rate affect the quality of prediction model training?

Yes, higher FPS provides more data about dynamics, but it is critical that the labeling is synchronized. Even at high frequency, a time gap between sensors can lead to false decisions.

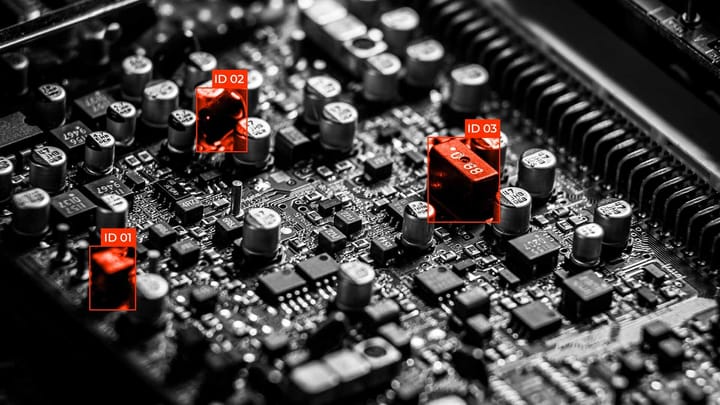

What is "ID drift" and why is it dangerous?

This is an error where the ID number of one object jumps to another, for example, from one car to a neighboring one. This confuses the prediction model, which begins to attribute the movement history of one agent to another.

How does interpolation in the keyframing method handle non-linear movement?

If a car turns or brakes sharply, the annotator places more keyframes. Interpolation algorithms become more complex to repeat the movement curve accurately.

Comments ()