Researchers have shown that head and hand motion data can be used to identify VR users in the metaverse

A team of researchers from UC Berkeley, RWTH Aachen, and Unanimous AI has discovered that the data obtained from head and hand sensors can be used to identify users moving around virtually in the metaverse. Based on an analysis of head and hand motion data, the group has been studying privacy issues regarding users engaging with virtual reality ecosystems and has discovered that VR game developers are easily able to identify users by using this information. An arXiv preprint of the paper was published.

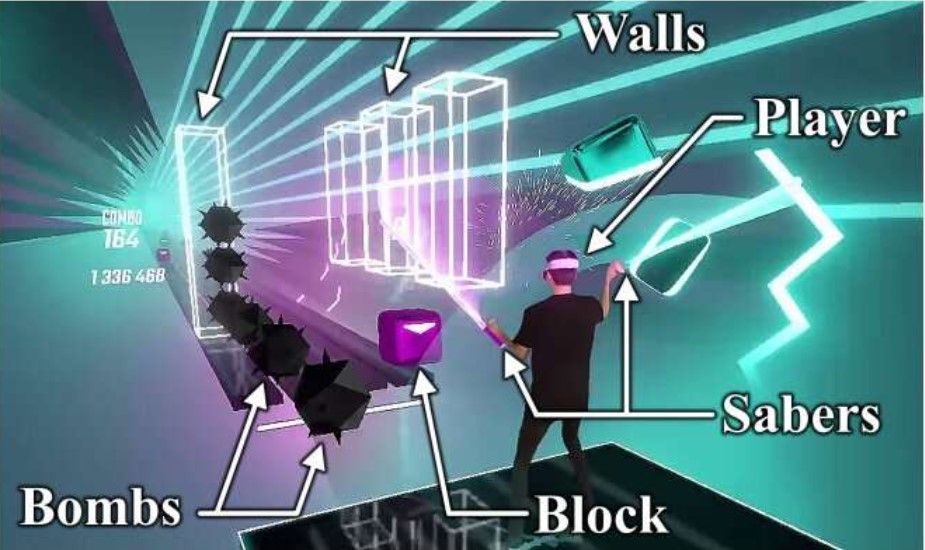

In the metaverse, users are able to communicate in a variety of ways within a virtual reality environment. It is necessary to wear a 3D headset that covers the user's eyes in order to be able to view the virtual world in which they are participating in the metaverse. The users can also interact with virtual objects and other people by using hand sensors.

Metaverse experiences have become increasingly immersive over the past few years, sometimes to the point where people forget they are not actually there. As a result of this, many members of the community are concerned about their privacy while interacting in the metaverse. Research has previously indicated that there is very little privacy in the metaverse - virtually all activity can be traced back to the user. An innovative approach to tracing the identities of visitors to a given virtual world has been developed by a team in California: studying body movements.

Researchers recruited 50,000 volunteers and monitored their movements while playing Beat Saber, a virtual reality game requiring nearly constant movement. An artificial intelligence system was taught to recognize slight differences in how people move when doing nearly the same thing using 2.5 million data recordings collected from volunteers.

Upon training on just 100 seconds of motion data, it was able to identify an individual with 94% accuracy. Furthermore, the researchers found that studying motion data allowed them to reveal information about an individual's dominant hand, height, and, in some cases, gender, in addition to identifying the individual.

Src: Science X Network

Comments ()