Quality Control Practices for Data Annotation

Not all data is created equal. Whether it’s videos or images, the quality of your data can completely determine the success or failure of your project. This is where quality control comes into play. With the right processes and practices, potential issues can be avoided from the start.

Data teams are often distributed across locations and expertise. Annotators might not be the ones designing algorithms. Quality control is how a common language can be created.

As with any annotation project, success often comes down to the proper tools. You must have a clear idea of what needs to be done. This is why the design of data projects is so important. If nothing is left to chance, every player is able to work without having to figure it out after the fact. Providing structure around these practices is the central goal of annotation companies.

What is quality control in data annotation?

There are two major ways to think about quality control in data annotation.

First, you can consider the quality of the data being annotated. This requires proper sourcing and assessment.

Secondly, you can think about the quality of the annotations themselves. This often means assessing the quality of the tools or processes in question. Combining these two ways of thinking can ensure that your ML projects function as you intend them to.

What are the best practices for quality control in data annotation?

Whether you instruct a team on how data should be annotated or do it yourself, a clear understanding of the task is essential. Following certain best practices can help achieve this. Best practices, in this case, refer to different frameworks through which your projects can be designed. While these will, of course, depend on the specific project, there are general rules that should apply across the board.

These best practices fall into a few categories. These can be summarized as follows:

- Clear communication between data teams and annotators

- Feedback structures between data teams and annotators

- Tooling that meets the demands of the training datasets

The first, communication, is critical to the success of projects. As was mentioned, often, the people doing the annotating and the people creating the algorithms are not the same. Data annotation can be a time-consuming practice. Distributed teams or gig workers are commonly used for the processes instead.

Furthermore, the backgrounds of the annotation teams are often dramatically different than those of the data scientists who will use that data. Without clear guidelines of what needs to be done, projects can quickly fall apart.

This is why the second point is so important. Without a clear feedback mechanism, annotators may never realize that their work is not what is needed for the project. The best practice here is for feedback to be obligatory and regular.

After each batch of annotations, monitoring the error rate of what is being annotated is useful. Using that data, new guidelines can be created to increase annotation accuracy. Ideally, this feedback system would become second nature to your annotation teams.

Tooling plays a role in this as well. Without the proper tools to create bounding boxes or key points, it might not even be possible for annotators to complete the task at hand. Ensuring they are set up for success means that fewer corrections will need to be made. This also means that the data science team will need to do the work beforehand to understand the best tools. Again, it isn’t reasonable to expect distributed annotation teams to know that themselves.

Quality Control in Action

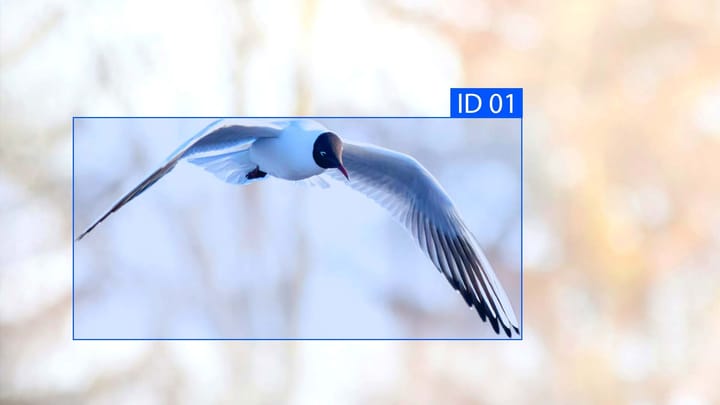

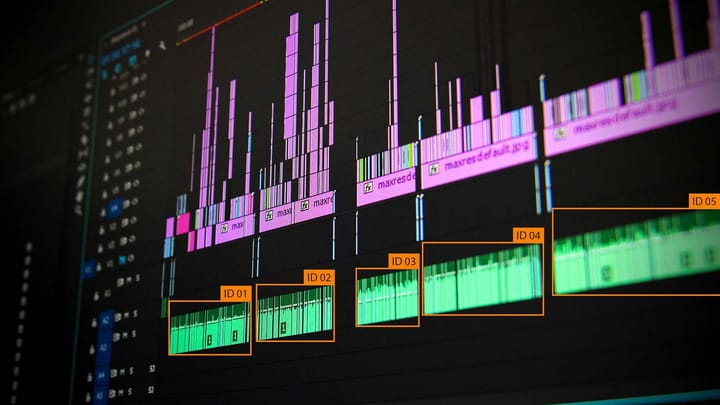

Data annotation can take many forms. For example, videos are annotated differently than images. Likewise, images containing many objects are annotated differently than images with single objects.

Finding the tools and practices best suited for each of these use cases can determine the training data's ability to be used. Quality control in annotation requires a clear understanding of what data you’ll receive and what tools best handle it. More importantly, that understanding must be shared across your team.

High error rates in annotated data can cause significant problems for your algorithms. This is made more challenging when distributed teams are tasked with creating these annotations. More than anything, quality control is a function of how well a team is being led toward a goal. As with any team, the secret sauce will be communication and feedback. Planning this can make all the difference for your data project.

Comments ()