Mastering Data Annotation: Best Practices & Tips

We all know that data annotation is a crucial step in machine learning and AI projects, as it helps ML models interpret and make accurate predictions. However, without proper annotation, these models struggle to perform effectively. To ensure the success of AI projects, it's essential to implement the best practices and tips for managing data annotation.

Let's dive into the key considerations and guidelines for mastering data annotation. From defining project goals to selecting the right data annotation tool, preparing a diverse dataset, establishing clear guidelines, and ensuring quality assurance, we will cover everything you need to know to enhance the performance of your ML models.

By following the best practices outlined in this article, you will improve the accuracy and efficiency of your ML models, unlock the real value of AI for your organization, and stay ahead in the rapidly evolving world of artificial intelligence.

The Basis of a Data Annotation Project

In order to ensure the success of a data annotation project, several key phases must be carefully considered and executed. These include defining the overall project goals, determining the type of data needed, and choosing the right data annotation tool. By adhering to best practices and guidelines, organizations can create a robust framework for their annotation projects.

Defining Project Goals

Before embarking on a data annotation project, it is crucial to clearly define the project goals. This involves understanding the specific requirements and objectives that the annotated data should fulfill. Whether it's training a machine learning model or conducting research, having well-defined project goals ensures that the annotation process remains focused and on track.

Determining Data Needs

An important step in the data annotation project is determining the type of data that needs to be annotated. This involves identifying the specific attributes, labels, or features that are required for the project. By establishing clear guidelines for data collection, organizations can ensure that the annotated data meets the necessary criteria for training or analysis.

Choosing the Right Annotation Tool

The selection of a suitable data annotation tool is crucial for the success of the project. The tool should align with the project goals, data type, and annotation requirements. Additionally, considerations should be given to the tool's user interface (UI) and user experience (UX), as these factors contribute to the overall efficiency and effectiveness of the annotation process. Integration capabilities with other data management systems and project management tools should also be taken into account.

Guidelines and Quality Assurance

Clear guidelines are essential to ensure consistency and accuracy in the annotation process. These guidelines provide instructions and standards that the workforce should adhere to during the annotation tasks. Regular feedback and communication with the workforce enable continuous improvement and address any ambiguities or challenges that may arise. Implementing a strict quality assurance process, such as conducting regular reviews and spot checks, helps maintain the overall quality of the annotations and minimize errors.

Data Privacy Considerations

When selecting an annotation platform, data privacy should be a significant consideration. Organizations must ensure that the platform complies with relevant data protection regulations and provides adequate security measures. This includes data encryption, access controls, and proper handling or anonymization of sensitive information. By prioritizing data privacy, organizations can mitigate potential risks and maintain the confidentiality of their data.

By following these best practices and guidelines, organizations can establish a solid foundation for their data annotation projects. This allows for the creation of high-quality annotated datasets, ultimately contributing to the success of AI projects and the generation of real value for the organization.

Preparing the Dataset for Annotation

Before annotation can occur, it's crucial to prepare a diverse dataset. While quantity is important, diversity is equally essential. A diverse dataset ensures that the ML model covers all possible variations and edge cases, enabling it to perform well in real-world scenarios.

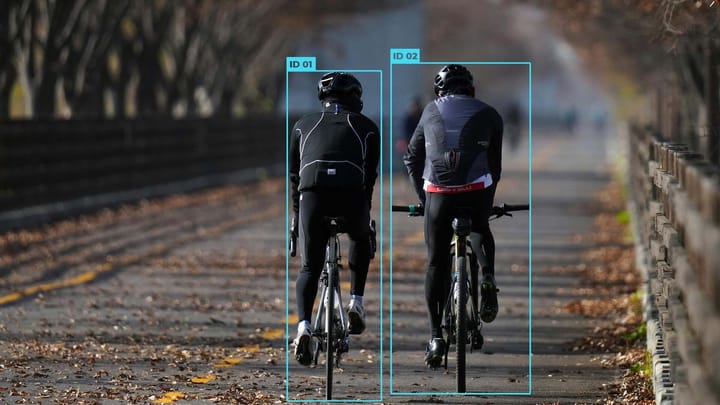

Dataset preparation involves including different types of data that reflects the diversity of the target domain. For example, if the ML model aims to identify people crossing the street, the dataset should encompass various aspects such as:

- People: Include individuals of different genders, ages, body types, and ethnicities to ensure the model can accurately detect pedestrians from all demographics.

- Weather Conditions: Cover a wide range of weather conditions such as sunny, rainy, foggy, or snowy, as these factors significantly impact pedestrian behavior and appearance.

- Dress Codes: Incorporate different attire options, including formal wear, casual clothing, and attire representative of diverse cultural backgrounds, to prevent the model from developing biases based on specific clothing preferences.

- Other Variables: Consider additional variables that could affect the model's performance, such as different hairstyles, accessories, mobility aids, and carrying items like bags or umbrellas.

By ensuring a diverse dataset, you minimize bias and provide the ML model with a comprehensive understanding of the target domain. This enables the model to make accurate predictions and generalizations in real-world scenarios, where diversity and edge cases are common.

Addressing Bias and Edge Cases

One of the primary aims of dataset preparation is to address bias and include edge cases. Bias can emerge if the dataset predominantly consists of certain demographic groups, leading to inaccurate and unfair predictions. Including a diverse range of individuals and scenarios prevents the model from favoring any specific group, contributing to fair and equitable outcomes.

Moreover, edge cases are essential as they represent scenarios that occur less frequently but are crucial for maintaining model accuracy. These edge cases might include individuals with unique physical attributes, uncommon weather conditions, or particular pedestrian behaviors that the model should be able to recognize and handle appropriately.

By consciously preparing the dataset to include diversity and edge cases, organizations can ensure that their ML models perform robustly and ethically, catering to the complexities and nuances of the real world.

Selecting and Leveraging a Data Annotation Tool

Choosing the right data annotation tool plays a significant role in the success of your project. It is crucial to consider various factors, including data privacy and access by the workforce, ease of integration into your existing infrastructure, desired UX/UI interface, and effective project management tools. By selecting a tool that aligns with your specific requirements, you can ensure efficient communication and collaboration between all stakeholders involved in the annotation process.

When evaluating potential data annotation tools, prioritize platforms that prioritize data privacy. Protecting sensitive data is critical, particularly when working with third-party annotation service providers. Ensure that the tool you choose complies with relevant data protection regulations and offers robust security measures to safeguard your valuable data.

Seamless integration into your existing infrastructure is vital to minimize disruptions to your workflow. Look for a data annotation tool that supports easy integration with your existing systems and workflows, providing a smooth transition into the annotation phase. This will help streamline the overall project timeline and enhance the efficiency of the annotation process.

The UX/UI interface of the data annotation tool is crucial for the productivity and satisfaction of the workforce. A user-friendly interface with intuitive navigation and clear instructions can significantly improve the accuracy and speed of annotations. Look for tools that prioritize a user-centric design to ensure a positive experience for your annotation team.

Efficient project management tools within the data annotation platform can simplify coordination and collaboration. Features such as task assignment, progress tracking, and real-time communication can enhance the overall efficiency and organization of your annotation project. Consider the collaboration features offered by different tools to identify the one that best suits your project requirements.

By carefully selecting and leveraging a suitable data annotation tool, you can enhance the effectiveness of your annotation project, ensuring smooth collaboration, streamlined workflows, and optimal results.

Defining Guidelines and Ensuring Quality Assurance

To maintain high-quality annotations, clear and consistent guidelines must be defined and communicated to the workforce. These guidelines serve as a roadmap for annotators, ensuring that their annotations align with the project's objectives. Furthermore, these guidelines may need to be updated and shared as the project progresses to reflect any changes or refinements in the annotation process.

Feedback plays a crucial role in enhancing the quality of annotations. Creating an environment where the workforce feels comfortable asking questions and providing feedback fosters collaborative learning and improvement. An ongoing feedback loop allows for continuous refinement and optimization of the annotation process, leading to improved accuracy and relevance of annotations.

Quality assurance is an essential component of any data annotation project. Implementing a robust quality assurance process is key to detecting and resolving any inconsistencies in the annotations. Consensus metrics, which measure the level of agreement among annotators, can be used to ensure annotations are accurate and reliable. Honeypot metrics can also be employed to identify annotators who may not be adhering to the guidelines, enabling timely corrective measures to maintain data integrity.

By defining clear guidelines, encouraging feedback, and implementing a rigorous quality assurance process, data annotation projects can ensure the production of high-quality annotations that are reliable, consistent, and aligned with the project's objectives.

Iterating on Data Quality for Continuous Improvement

Continuous monitoring and analysis of model outputs play a crucial role in optimizing the performance of machine learning models. By identifying areas where the model underperforms, organizations can make strategic adjustments to the training dataset, improving data quality, and ultimately enhancing the model's performance. This iterative process of refining the training dataset is essential for achieving accurate predictions and achieving desired outcomes.

One key aspect of improving data quality is iterating on the number of assets to annotate. However, it’s crucial to clarify that both quantity and diversity are important for data annotation, but in different ways. A larger dataset can improve the overall accuracy of the model, but if it lacks diversity, the model may perform poorly in real-world scenarios that it has not been exposed to. Organizations must consider striking the right balance between the size of the dataset and the resources available for annotation. By optimizing the number of assets to annotate, organizations can allocate their resources effectively and ensure higher quality annotations.

The percentage of consensus is another parameter that can be iterated upon to enhance data quality. Increasing the level of consensus required among annotators can improve the reliability of annotations and reduce the chances of errors. By establishing clear guidelines and providing regular feedback to annotators, organizations can foster a culture of collaboration and ensure consistent annotations.

The composition of the dataset also plays a crucial role in data quality. Organizations should carefully curate the dataset to include diverse examples that capture the variability and complexity of real-world scenarios. This can help in training the model to handle a wide range of situations and improve its generalization capabilities.

Another aspect to consider is iterating on the guidelines given to the workforce. As the annotation process progresses, organizations may discover the need to refine or expand the guidelines to address specific challenges or to improve the overall quality of the annotations. Regular communication with the workforce and incorporating their feedback can lead to better guidelines that enhance data quality.

Enriching the dataset based on real-world parameters is also crucial for continuous improvement. By incorporating relevant real-world factors into the dataset, such as variations in lighting conditions, perspectives, or other external factors, organizations can ensure that the model is robust and performs well in different scenarios.

Implementing lean and agile management principles can greatly facilitate the iterative process of improving data quality. By adopting a cyclical approach of testing, learning, and refining, organizations can continuously enhance the performance of their machine learning models. Leveraging feedback loops, collaborating closely with the workforce, and prioritizing continuous improvement can drive efficiency and effectiveness in data annotation projects.

Tracking Data Quality Metrics

Conclusion

Data annotation is an essential process for the development of machine learning (ML) models in AI projects. By implementing best practices in data annotation, organizations can improve the accuracy and efficiency of their ML models and create real value for their operations. Following these practices is crucial for staying at the forefront of AI innovation.

Through meticulous dataset preparation, clear guidelines, and a rigorous quality assurance process, organizations can enhance the performance of their ML models. By diversifying the dataset and including a wide range of edge cases, bias can be minimized, ensuring better performance in real-world scenarios. The selection of a suitable data annotation tool, one that prioritizes data privacy, integration capabilities, and user experience, is pivotal in streamlining the data annotation workflow.

Continuous improvement and iteration are key elements for optimizing data quality and model performance. Through frequent monitoring and analysis of ML model outputs, organizations can fine-tune their training datasets, enabling the model to deliver more accurate results. By integrating lean and agile management principles, organizations can maintain relevance and efficiency in their AI projects, driving value creation and staying ahead in the rapidly evolving landscape of AI.

FAQ

What is data annotation?

Data annotation is a critical step in machine learning and AI projects. It involves labeling or tagging data to provide the necessary context for training ML models to make accurate predictions.

Why is data annotation important?

Data annotation is important because ML models rely on properly annotated data to interpret and understand patterns. Without accurate annotations, models struggle to make accurate predictions, leading to poor performance and reduced efficiency.

What are the challenges of dataset quality in ML projects?

Dataset quality is a significant challenge in ML projects. In fact, about 80% of ML projects never reach deployment due to issues with dataset quality. Poor annotation, insufficient diversity, and bias in the dataset can all hinder model performance and limit real-world applicability.

How should a diverse dataset be prepared for annotation?

Before annotation, it's crucial to prepare a diverse dataset that covers all possible variations and edge cases. This ensures that ML models are trained to recognize and perform well in real-world scenarios. Quantity is not as important as diversity in dataset preparation.

What factors should be considered when selecting a data annotation tool?

When selecting a data annotation tool, factors such as data privacy, ease of integration into existing infrastructure, user interface, project management tools, and efficient communication and collaboration capabilities should be considered.

How can annotation guidelines be defined and enforced?

Annotation guidelines should be clear and consistent, defining the expectations and requirements for the workforce. Regular feedback and communication should be provided, and a strict quality assurance process, such as employing consensus or honeypot metrics, can be implemented to ensure the annotations are accurate and consistent.

What role does continuous monitoring play in improving ML model performance?

Continuous monitoring and analysis of model outputs are crucial for identifying areas where the ML model underperforms. By making strategic adjustments to the training dataset, including the number of assets annotated, consensus percentage, dataset composition, and guidelines given to the workforce, the model's performance can be improved, reducing redundancy and ensuring its relevance.

Comments ()