Localized Model Tagging: On-the-Fly AI Personalization

Context-aware tuning allows algorithms to "learn" in specific environments. It builds on empirical validation techniques first developed in visual perception research. Like the human eye's ability to simultaneously process glare and contrast, modern systems now mimic optical responses through luminance mapping. This technique, which was developed for high dynamic range displays, now enables more intelligent decision-making in voice recognition and predictive analytics.

Three innovations make this transformation possible: parametric activation functions, real-time bias calibration, and multi-layer validation. They enable the creation of precision adaptation, systems that maintain core functionality while optimizing the output for specific use cases. This effectively improves speech recognition in noisy factories and improves medical imaging analysis.

Key Takeaways

- Dynamic activation layers improve accuracy in benchmarking tests.

- Real-time parameter settings can be optimized based on context.

- Luminance mapping methods adapted from visual science improve image recognition.

- Hybrid validation methods ensure robustness across environments.

Basics of Local Model Adaptation

The basics of local model adaptation involve customizing a ready-made AI model that runs on a company's infrastructure to meet the specific needs of a business or domain.This approach is an example of localized adaptation adapting models without complete retraining.

This process involves three main mechanisms:

- Adaptation signals. Data patterns that trigger system recalibration.

- Detection thresholds. The minimum variation in input data that requires a response.

- Brightness mapping. Modeling optical responses to brightness gradients.

Processing Steps in Spatial Adaptation

This workflow is particularly effective in automotive displays and augmented reality interfaces where rapid lighting changes occur.

Innovative approaches to local adaptation

A method that uses genomic bias estimates to determine optimal combinations of parameters. By analyzing the distribution of historical data, the framework predicts which adjustments give the maximum predictive gain. Compared to traditional methods, this method reduces trial-and-error cycles.

Optimization of experimental stimuli

Adaptive sampling protocols that reflect natural selection processes allow dynamically adjusting test scenarios based on real-time performance indicators. Key features include:

- Environmental locus mapping to prioritize critical variables.

- Demographic history simulations for robustness of stress testing parameters.

- FST score tracking to verify the effectiveness of adjustments.

This methodology highly correlated laboratory predictions and field performance in recent automotive demonstration trials.

Optimizing HDR Display Performance

High Dynamic Range (HDR) technology allows displays to display more detail in bright and dark areas of the frame. Effective HDR implementation depends not only on the characteristics of the panel but also on precise control of contrast, brightness, and color coverage.

Modern approaches use machine learning to analyze dynamic scenes and adaptively adjust tone mapping. Such algorithms identify critical areas of the frame of faces, light sources, glare, and locally adjust brightness to maintain a balance between detail and emotional perception. Quick labeling allows you to reduce the number of iterations thanks to dynamic selection of test scenarios. Additionally, display calibration, ambient lighting, and content type (movies, games, documents) are considered.

Modern AI models can adapt the color palette and contrast in real time, which increases the user's visual comfort.

Optimizing HDR displays with artificial intelligence allows you to go beyond standard settings and implement personalized image quality that changes with the content and user environment.

Eye-Dependent Tone Mapping Analytics

Tone mapping is converting a wide range of HDR content into a display suitable for displays with limited capabilities. Typically, algorithms for this conversion work globally or locally but do not consider where the user is looking at a particular moment. This is where eye-dependent tone mapping analytics, which combines eye tracking and deep image processing, comes in.

The idea behind this method is that the human eye perceives different parts of a scene with varying levels of accuracy. The central area of vision is most sensitive to detail and color, while peripheral vision is less picky about contrast. The system uses eye sensors to determine the user's focus point in real time and adjusts the tone mapping in that area. This enhances local contrast, detail, and lighting dynamics.

The analytics module also considers the scene's context: whether it is a face, a prominent object, or a moving element. Beyond the line of sight, the algorithm can reduce resource-intensive calculations, optimizing system performance and energy consumption. This approach improves visual comfort, adapting content to each user's perception.

Personalized federated learning for AI adaptation

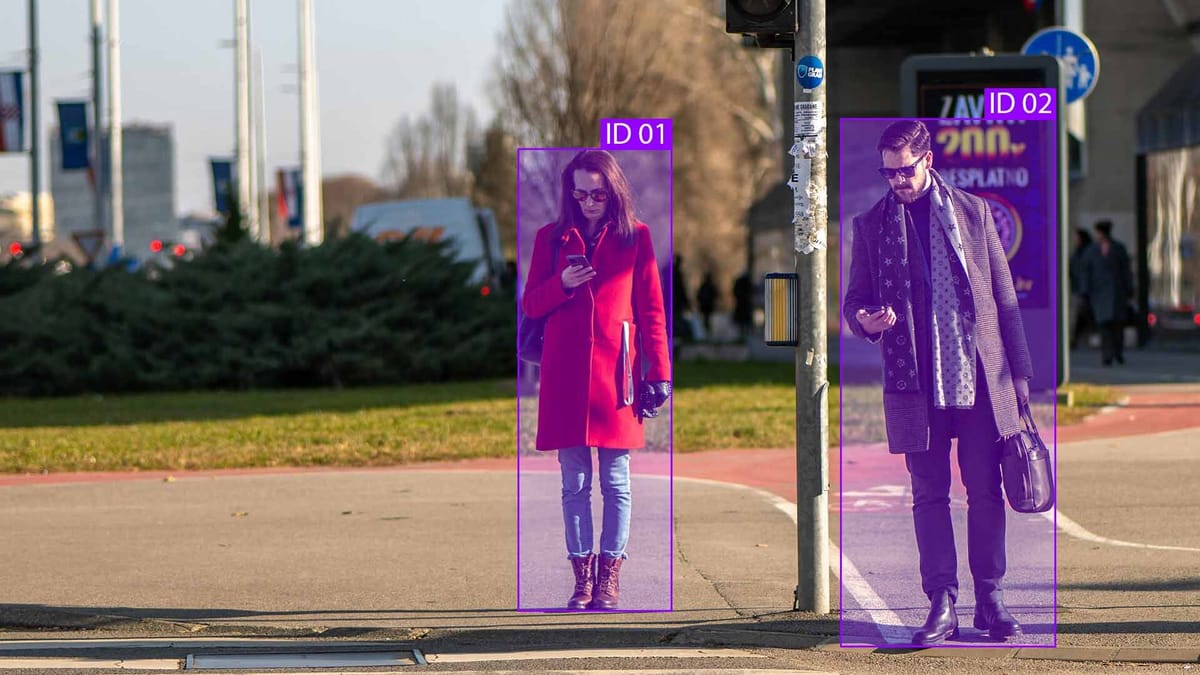

Personal AI systems remain increasingly accurate through local updates in an environment with limited access to data. This allows an AI model to update standard weights based on distributed data and consider each device's or user's individual characteristics. Thanks to this, the AI model adapts to a specific environment, usage style, regional language variants, or behavioral patterns. This approach is practical for systems that interact with people: virtual assistants, medical diagnostic models, recommendation systems, or autopilots.

The infrastructure of personalized federated learning includes a coordination server that distributes global updates and local filtering and optimization mechanisms for models. Adaptive aggregation of updates and multi-level regularization that considers global and individual goals are used to avoid "overtraining" at a single point.

Thus, personalized federated learning is a path to creating ethical, safe, and effective AI systems that better understand their users.

Comparing local and global adaptation methods

There are two main approaches to adaptation: local and global.

Local adaptation focuses on fine-tuning individual components or segments of the model. It works at the level of specific data areas or tasks. This allows you to adapt to the nuances of the local context. This approach is suitable when changes in the data are selective or partial variations. Local adaptation requires fewer computational resources and reduces the risk of overtraining, as it makes limited adjustments. However, this method does not consider global trends in the dataset, which affects the overall consistency of the AI model.

Global adaptation involves retraining or fundamentally changing the parameters of the entire model or its large blocks. It is suitable when changes in the input data or conditions are large-scale and require a comprehensive approach to revision. Global adaptation allows you to better align the AI model with new conditions, but it requires significant computational resources and time. There is also a risk of losing important information acquired during initial training and a greater risk of overtraining.

The optimal approach is to combine both methods. Local adaptation helps quickly and effectively account for the specifics of new data, while global adaptation provides a long-term and deep restructuring of the AI model.

Impact of Data Distribution and Heterogeneity

AI systems fail when faced with uneven data landscapes. Three factors exacerbate distribution problems:

- Regional differences in data collection protocols.

- Unbalanced representation of classes across devices.

- Inconsistency in feature spaces between global and local datasets.

Technical teams combat these problems with:

- Adaptive sampling strategies that prioritize outliers.

- Contrast-aware regularization that supports feature alignment.

- Multi-source validation protocols.

Interdisciplinary perspectives on adaptation

Adaptation spans various disciplines and practices, from biology to information technology, social sciences to engineering. An interdisciplinary approach to studying adaptation offers new opportunities for better understanding complex systems and developing effective strategies in the face of constant change. This approach is based on integrating theoretical knowledge and methods from different disciplines.

Interdisciplinary analysis combines biological principles with computer models of AI to create adaptive artificial intelligence systems that independently change their parameters according to the context of use. Integrating social and technical sciences also helps to predict the impact of technologies on society better and develop more responsible and ethical solutions.

FAQ

How does personalized AI handle real-time environmental changes?

Personalized AI analyzes real-time sensor and context data to detect environmental changes quickly.

What differentiates regional AI from global approaches?

Regional AI maximizes relevance by considering local cultural, linguistic, and regulatory contexts. Global approaches focus on one-size-fits-all models that may not consider local nuances and context.

What techniques can improve the performance of HDR displays?

Through dynamic tone mapping, which optimizes brightness and contrast in real time, and using halo compensation algorithms to reduce the blurring of bright objects.

What hardware limitations affect localized AI deployment?

Memory and processing power limitations can limit the effectiveness of localized AI deployment. It is also important to consider the factor of limited network bandwidth, which affects the speed of data processing in real time.

What are the prospects for interdisciplinary adaptation?

The prospects for interdisciplinary adaptation lie in expanding the boundaries of traditional approaches, finding new tools and methodologies, and being able to respond quickly to the complex challenges of our time.

Comments ()