LLM leaderboard 2026

A few years ago, industry leaders competed on text-generation performance, but today's models demonstrate logical reasoning and mathematical computation to comprehensively understand multimodal data. The annual rankings are based on performance metrics in tests such as LMArena, GPQA, SWE-Bench, and MMLU. This allows for an objective comparison of models based on speed, accuracy, ability to solve complex tasks, and effectiveness in real-world scenarios.

There is growing interest in local and specialized LLMs that are optimized for language or industry requirements. In this review, we present a current list of LLM leaders 2026, analyzing their key advantages, limitations, and results on leading benchmarks.

How LLM rankings are formed

The 2026 assessment of large language models is based on a set of specialized benchmarks that measure distinct aspects of the models' intellectual performance. Some tests focus on human preferences and the quality of responses in a dialogue. In contrast, others focus on the ability to solve scientific and programming problems that are closely aligned with real-world use cases.

These benchmarks demonstrate the capabilities of LLM in engineering, research, and business scenarios.

Key LLM benchmarks and what they measure

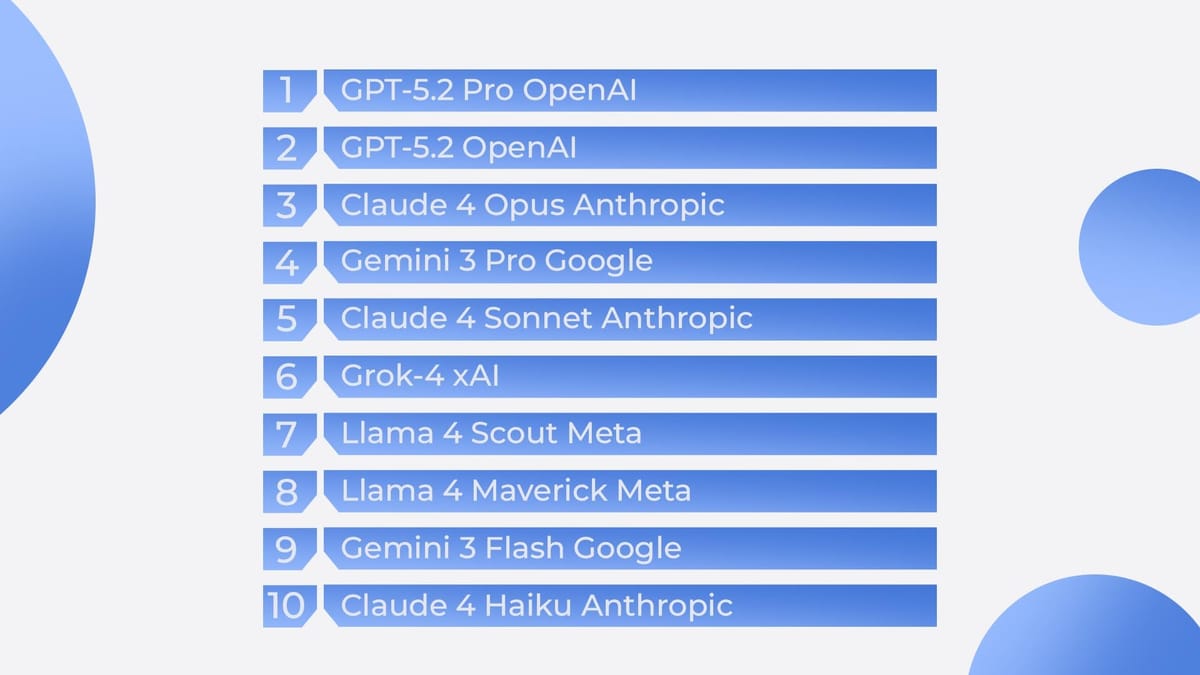

LLM leaders 2026 model leaderboards and strengths

The 2026 Large Language Model leaderboard rankings are based on current leaderboard data and comparisons of the best AI models. These rankings take into account overall performance, complex reasoning, contextual memory, speed, and real-world use cases for working with text, code, and multimodal data.

Limitations and solutions for LLM in 2026

Despite the advances in 2026, large language models (LLMs) still have several limitations. Current models perform well in tests and benchmarks, but they are not a one-size-fits-all solution. Key challenges include:

- Context limitations. Even large context windows have limits, and when working with very long documents or data streams, the model's performance degrades.

- Hallucination susceptibility. Models generate facts or data that do not exist in the real world.

- Vulnerability to bias. Models can reproduce or reinforce stereotypes present in the training data.

- Computing requirements. LLMs require substantial memory, processing power, and time to train and generate.

- Multimodal limitations. Not all models are effective with text, code, images, or audio simultaneously.

Ways to address these issues

Choosing the right LLM for different use cases

Choosing a large language model (LLM) requires a careful performance comparison to match capabilities with use cases. Some LLMs are optimized for natural text generation, others for data encoding, and others for working with long contexts and large volumes of information.

For business analytics and document processing, models with large contextual memory and factual accuracy are chosen. For example, GPT‑5.2 Pro or Gemini 3 Pro. They can summarize large documents, create reports, and make recommendations.

For code generation and developer support, models that demonstrate high performance in SWE-Bench, HumanEval, or MBPP are suitable. For example, Claude 4 Sonnet or GPT‑5.2. They handle algorithmic tasks, fix bugs, write functions, and integrate with IDEs and CI/CD pipelines.

For multimodal scenarios where it is important to process text, images, and other data simultaneously, use Gemini 3 Pro or Llama 4 Maverick. These models support cross-modal embeddings and are optimized for generating image descriptions, analyzing diagrams, and supporting interactive dialog with multimodal input data.

For fast, resource-intensive solutions that require optimizing response time and computing resources, models such as Gemini 3 Flash or Claude 4 Haiku are suitable. They provide fast output and relatively low computing costs.

The right choice of LLM depends on the balance between performance, resources, and specific tasks. Using models in the areas for which they were optimized enables practical application of modern LLMs in business, science, and technology.

FAQ

What are the main benchmarks for evaluating LLM models?

The main benchmarks evaluate LLM performance in logical reasoning, code generation, multidisciplinary knowledge, and multimodal tasks, examples are LMArena, GPQA, SWE-Bench, and MMLU.

Which models are in the top LLMs of 2026?

The top models of 2026 include GPT‑5.2 Pro, GPT‑5.2, Claude 4 Opus, Gemini 3 Pro, Claude 4 Sonnet, Grok‑4, Llama 4 Scout, Llama 4 Maverick, Gemini 3 Flash, and Claude 4 Haiku.

What are the main drawbacks of LLM models?

The main drawbacks of LLMs include limited context, hallucinations, bias, high computational requirements, and limited multimodality.

What are the solutions to the main problems of LLM models?

LLM problems are solved through context partitioning, RAG and fact-checking, bias mitigation, model distillation, LoRA tuning, and multimodal learning strategies.

In what areas are LLM models currently used?

LLM models are used in business analytics, text and code generation, scientific research, multimodal systems, and automation of routine tasks.

Comments ()