Labeling in the Data Annotation Process

Machine learning has become one of the most important areas of technological development in recent years. Companies have seen unimaginable growth and development using statistical methods to train algorithms. However, supervised machine learning algorithms still need human input to operate properly. Data annotation is the process by which training datasets are labeled for those purposes.

The terms “data annotation” and “data labeling” functionally describe the same thing. There are many forms this process can take. It depends on the tools being used and the desired outcome of the data science project being undertaken. The kind of data being labeled will also play a major role in this. How will data labeling work for your data science project?

As with all data science projects, it must work at the intersection of statistical methods and human input. The goal is always to prepare the machine learning algorithm to identify unknown inputs. The training and test datasets must contain the proper labels for this to happen. Training data can be very messy. Oftentimes, the photo or video data being labeled has inconsistencies or ambiguity. Providing that context will create a machine-understandable common language between data scientists and the algorithms.

The labeling process itself can also take many forms. Often, distributed teams are tasked with providing labels, while data scientists provide the parameters for how data should be labeled. The need for standardization in how data is labeled means that these teams must work closely together.

What does data labeling look like?

Data labeling can take many forms. Generally, these are determined by the kind of data that is being labeled. The ways that algorithms can identify those data types can also vary significantly. Some of the most common forms of training data are:

- Image data

- Video data

- Audio data

- Geospatial data

- Text data

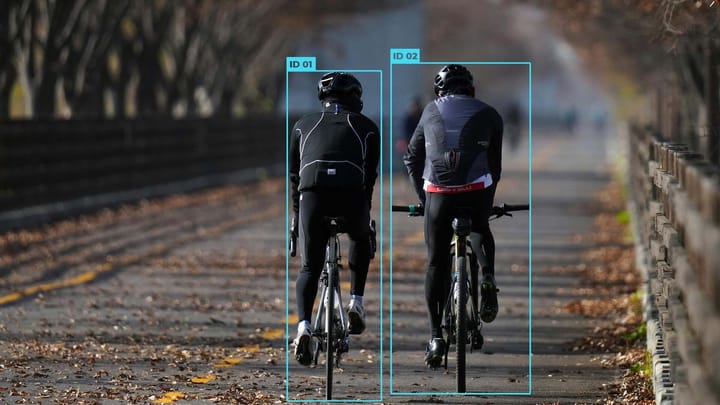

Image data is most commonly found in computer vision algorithms. These try to understand what the contents of input images are. In the labeling process, you’ll either need to categorize the image as a whole or create “bounding boxes” to identify items within an image. Bounding boxes are a widespread tool used in the task of “object detection.” Many technologies rely on computer vision algorithms' ability to identify the contents of a photo.

Video data contains many overlaps with image data. Its application to computer vision algorithms is almost the same. The time dimension, however, can add new layers of complexity to the labeling process. Instead of just creating a bounding box around an item, it might be necessary to track the movement of that object as well. Frame-by-frame this might require the use of other algorithms. With video data, data labeling often must be computer assisted.

Video data may also include dynamic audio data. Audio data is treated differently than images or videos because there are often two different tasks that must be completed. To begin, audio data must be transformed into text data. Data labeling there will often just mean transcribing what is being said at what time. From there, natural language processing can provide context to that audio data by labeling what each section of text means or how it relates to other functions of your algorithm.

There are many other kinds of data that can be labeled. Semantic labeling can also provide context for what images, videos, or audio mean. For example, audio processing can identify not only language but things like background noise or pauses in speech. In each of these cases, the labeling process requires the labeler to establish constraints and implement them on the labeled dataset.

Best Practices for Data Labeling

Several best practices should be followed when planning the data labeling for your ML project. If the labelers are not data scientists, creating clear guidelines on what should be labeled is maybe the most important step. You can also implement consensus mechanisms, where multiple labelers get the same data.

If they identify different things, it probably means there was an error made by someone or the data is unclear. Ongoing feedback and verification of the training data can also help avoid mistakes in the labeling process.

Generally, the best practice is to set clear goals and expectations for your project. Communicating these expectations to your team and being consistent in their application is often all it takes. With the right tools, acquiring the needed training data can be a painless part of the design process for these algorithms.

Comments ()