Integrating YOLOv8 with TensorFlow: A Step-by-Step Guide

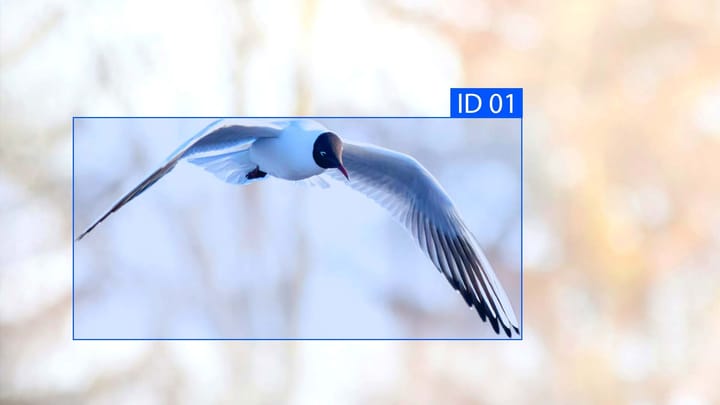

Did you know that within the realm of computer vision, YOLOv8 stands out as a top choice for object detection models? By merging with TensorFlow, an influential machine learning framework, the room for growth in image recognition and object detection knows no bounds.

Known as "You Only Look Once version 8," YOLOv8 has gained recognition for its unmatched efficiency and precision. It leverages deep neural networks, changing our perspective on tackling tasks within computer vision.

This guide will walk you through the steps to unite YOLOv8 with TensorFlow. You'll learn the process of preparing YOLOv8 models for TensorFlow Lite, making them ideal for edge devices. Plus, unravel the insights of TensorBoard, a robust tool for model training visualization.

Key Takeaways:

- YOLOv8 is a highly efficient and accurate object detection model used in computer vision.

- Integrating YOLOv8 with TensorFlow opens up new possibilities for image recognition and object detection tasks.

- Converting YOLOv8 models to TensorFlow Lite format optimizes their performance on edge devices.

- TensorBoard is a powerful visualization toolkit for monitoring and analyzing model training.

- By following this step-by-step guide, you can harness the full potential of YOLOv8 and TensorFlow for your computer vision projects.

Why Export to TFLite?

Deploying computer vision models on edge devices needs a special format for top performance. TensorFlow Lite (TFLite) fits the bill, being an open-source deep learning tool. It's made for on-device use, letting developers run models on mobile, embedded, and IoT gadgets. By converting YOLOv8 models to TFLite, you enhance their performance and make them fit for use on a variety of edge devices.

TFLite has key advantages for putting computer vision models on the edge. It optimizes for on-device use, thereby cutting latency and boosting privacy. It also shrinks the model size. This approach means no constant internet is needed, ensuring real-time inference and better data security. TFLite works across different platforms, from embedded Linux to microcontrollers, broadening the devices your models can run on. Converting YOLOv8 models to TFLite makes them efficient, improves their performance, and enables offline use.

“TensorFlow Lite enables developers to bring the power of machine learning to edge devices, unlocking new possibilities in areas such as image recognition, object detection, and much more.”

YOLOv8 is a leading model for spotting objects in pictures and videos quickly and accurately. Yet, it’s tough to use on devices with limited resources due to its complexity. Here's where TFLite shines. By converting the YOLOv8 model to TFLite, you can make use of TFLite’s optimization. This includes techniques like quantization and weight pruning, which reduce the model’s size and boost how fast it can make out objects.

| TFLite Benefits | YOLOv8 Optimization |

|---|---|

| Optimizes for on-device machine learning | Reduces model size and improves inference speed through TFLite's model optimization techniques |

| Enables offline execution of models, reducing the reliance on a constant internet connection | Enhances privacy by keeping data on the device itself |

| Compatible with embedded Linux, Android, iOS, and microcontrollers | Expands the deployment options for YOLOv8 models |

Exporting your YOLOv8 models to TFLite broadens your options for deploying them across different edge devices. From embedded Linux to Android, iOS, or microcontrollers, TFLite equips you for high-performance model execution. The smaller model sizes overcome device memory limits while still delivering precise and efficient object detection.

Key Features of TFLite Models

TFLite models bring crucial features that shine in on-device machine learning. They are built for seamless use in a variety of settings, offering:

- On-Device Optimization: These models are tailor-made for on-device use. They cut down waiting times and power live processing. This means your device can handle complex tasks swiftly and efficiently.

- Platform Compatibility: TFLite models play nice with many platforms. Whether you're on Android devices, iPhones, or tiny microcontrollers, their versatility shines. Developers can push the limits of their creations on a wide array of gadgets.

- Diverse Language Support: Developers love TFLite for its wide language backing. Java, Swift, C++, and more find a welcome spot here. Choosing a language that fits your style is a breeze, enhancing your development journey.

- High Performance: Thanks to advanced optimization and accelerated hardware, TFLite models excel. They offer swift, accurate performance, even on devices with meager computational capabilities. You get the data you need, when you need it.

These features make TFLite models a standout choice for on-device learning projects. They allow for smooth deployment, fit various coding styles, and deliver cutting-edge performance.

"TFLite models provide on-device optimization, platform compatibility, diverse language support, and high performance, making them an ideal choice for on-device machine learning."

Deployment Options in TFLite

TFLite comes packed with a variety of ways to deploy machine learning models. It meets the needs of various edge devices, from smartphones to microcontrollers with tight resources. This flexibility is part of what makes TFLite stand out.

Android and iOS Deployment

Deploying TFLite models on Android and iOS is highly effective. It lets you integrate machine learning into mobile applications for powerful analysis of camera feeds and sensor data. Users can enjoy real-time applications like object detection and image recognition on their devices.

For example, building an Android app using TFLite for live object identification enhances user experience. On iOS, TFLite aids in creating visually intelligent applications, utilizing the device's powerful processing abilities.

Embedded Linux Implementation

For those focusing on embedded Linux devices such as the Raspberry Pi, TFLite can be a game-changer. It boosts inference times significantly, allowing for local processing of image and video data. This cuts down on latency issues, improving overall system performance.

With TFLite on embedded Linux, devices can analyze images and recognize objects in various setups like security systems, home automation, and robotics. The benefit is quick response times, better user privacy, and less reliance on internet access.

Microcontroller Deployment

What sets TFLite apart is its ability to work on microcontrollers and devices with small memory. These devices require minimal system support to run TFLite models effectively.

This strategy brings machine learning to the edge, supporting smart wearables, IoT devices, and low-power sensors with deep learning algorithms. It's a unique approach to extending AI capabilities to resource-limited devices.

In closing, TFLite enables deployment on Android, iOS, embedded Linux, and microcontrollers. This versatility allows developers to create effective machine learning applications across a wide array of edge devices. It ensures high performance, tailored for each platform.

Export to TFLite: Converting Your YOLOv8 Model

To export your YOLOv8 model to TFLite format, begin by installing essential packages, among them Ultralytics. With the Ultralytics library, model loading, conversion to TFLite, and running inferences is easy using Python. The process ensures your YOLOv8 model is optimally performing with TFLite.

- First, install the necessary packages, including Ultralytics. You can use the following command in your terminal or command prompt:

- Once installed, proceed with converting your YOLOv8 model by running the following Python code:

- Executing the Python code above converts your model to the TFLite format, saving it as model.tflite in your working directory.

- Now, with your TFLite model ready, dive into inference. This model supports execution on a variety of platforms, such as embedded devices, Android, and iOS. For detailed guidance, the TensorFlow Lite documentation is invaluable.

# Import the necessary libraries

import torch

from torchvision.models import yolov3

# Load the YOLOv8 model

model = yolov3(pretrained=True)

# Export the model to TFLite format

model.export('model.tflite')pip install ultralyticsConverting to TFLite boosts your YOLOv8 model’s usability across numerous edge devices. The outlined steps easily connect your model with TFLite, enhancing its overall performance.

Having completed the TFLite conversion, it’s time to assess deployment prospects for your YOLOv8 model on edge devices. Be it Android phones, iOS devices, embedded Linux systems, or microcontrollers, TFLite broadens your deployment options extensively.

In the next section, further deployment options in TFLite are explored. This includes model integration into mobile apps, running on embedded Linux, and deployment on microcontrollers.

Deploying Exported YOLOv8 TFLite Models

After converting your YOLOv8 models to TFLite, the next step is deployment. You can use these models in various ways. This includes integration with mobile apps for Android and iOS or on devices like Raspberry Pi running embedded Linux. Moreover, they are suitable for microcontrollers with limited memory.

Each deployment choice offers unique benefits. It opens up new doors to use YOLOv8 TFLite models. This flexibility and variety of options are beneficial for different project needs.

Integrating TFLite Models on Mobile Applications

Integrating TFLite models into mobile apps for Android and iOS means powerful on-device object detection. These models can analyze camera feeds in real-time. They accurately identify objects, perfect for uses like augmented reality, image recognition, or security.

The models work efficiently, fitting well with mobile app development. This compatibility and performance make integrating object detection in apps a smooth process.

Accelerating Inference on Embedded Linux Devices

For devices like Raspberry Pi, TFLite model deployment speeds up object detection. These devices' computational power lets you process camera inputs and sensor data in real-time. This is key for smart surveillance, autonomous robots, or advanced IoT devices.

Thanks to TFLite's compatibility, deploying YOLOv8 models is seamless. It truly expands the range of applications for these models.

Deploying TFLite Models on Microcontrollers

Not just for big devices, TFLite models work on microcontrollers too. This includes wearables, smart home gadgets, and IoT devices in industry. Using models on microcontrollers grants intelligence at the edge without heavy processing or constant network. This makes decision-making smart and efficient locally.

The adaptability and openness of TFLite are great for deploying YOLOv8 models. They fit well in mobile apps, embedded systems, or microcontrollers. With TFLite, you can fully explore YOLOv8's potential and enhance your object detection projects.

To dive deeper into TFLite model deployment and optimization, check the Firebase documentation. It offers detailed insights into managing models with Firebase ML. This enhances deployment knowledge and best practices.

Using TensorBoard with YOLOv8

TensorBoard is indispensable for tracking the training and model performance in machine learning. It aids in grasping model learning patterns and behavior. For YOLOv8, TensorBoard is especially beneficial, allowing users to optimize its performance and glean valuable insights.

Visualize Training Metrics

TensorBoard excels in the tracking and visualization of training metrics. By employing it in your YOLOv8 training, you can observe important metrics like loss and accuracy across time. Its time series view provides a rich, dynamic display of these metrics, perfect for trend analysis and the spotting of improvement areas.

Note: Tracking metrics with TensorBoard enhances the understanding of your YOLOv8 model's training process and enables data-driven decision-making for optimizing performance.

Display Model Graphs

Furthermore, TensorBoard presents a detailed look at your model's graph, showing its structure, connections, and data flow. This insight is key in spotting areas for enhancement and understanding the computational flow of your YOLOv8 architecture. It offers an insightful view into the inner working of your model.

Project Embeddings

TensorBoard goes beyond to also allow projection of embeddings, which are simplified versions of complex data. By doing this, it makes possible the exploration of relationships between different data points in your YOLOv8 model. This method can yield crucial insights into how your model perceives similarities in objects or concepts.

Note: Using embeddings in TensorBoard allows for a deeper understanding of the learned representations in your YOLOv8 model, facilitating further improvements and insights for object detection tasks.

Configuring TensorBoard with YOLOv8 provides a comprehensive view of your model's performance and architecture. This deep insight into the training dynamics supports informed decision-making based on data. Ultimately, it assists in the optimization of your YOLOv8 model's performance.

| Benefits of TensorBoard for YOLOv8 | How It Helps |

|---|---|

| Tracking Metrics | Monitor loss, accuracy, and learning rates for optimization and performance evaluation. |

| Model Graph Visualization | Understand the structure, connections, and flow of data within the YOLOv8 model. |

| Embedding Projection | Explore relationships between data points to gain insights into the model's understanding. |

Understanding Your TensorBoard for YOLOv8 Training

TensorBoard is divided into Time Series, Scalars, and Graphs. These parts help you analyze and see the progress of your YOLOv8 models.

Time Series: Tracking Progression and Trends

The Time Series section in TensorBoard shows metrics changing over time. It lets you watch how loss, accuracy, or custom metrics evolve. This visual insight helps in understanding your model's learning process.

By tracking these metrics, you get a clear picture of your YOLOv8's performance. It helps you make smart decisions, tweak settings, and ensure better training.

Scalars: Concise View of Metric Evolution

Scalars in TensorBoard focus on single metrics like loss and accuracy. They give a clear view of metric changes over training. This makes it easier to spot trends, fix problems, and evaluate training effectiveness.

Using Scalars simplifies metric analysis. It permits precise model behavior tracking, which speeds up performance optimization without extra costs.

Graphs: Visualizing Model Structure and Data Flow

TensorBoard's Graphs showcase your model's computations. They highlight internal interactions, making it easier to debug and refine. Understanding this graph is key to enhancing your model.

The Graphs feature in TensorBoard demystifies your model's operations. It supports better strategic choices and more efficient model enhancement.

TensorBoard's Time Series, Scalars, and Graphs provide a rich view of your YOLOv8 training. These tools help in-depth analysis, monitoring, and understanding your model's dynamics.

Continue to the next section to learn more about the Ultralytics YOLOv8 integration and model optimization with TFLite: TensorFlow's TensorBoard website.

Conclusion

Integrating YOLOv8 with TensorFlow empowers computer vision like never before. It allows for the export of YOLOv8 models into TFLite format. This action optimizes their function and eases deployment on edge devices.

Taking advantage of TensorBoard during YOLOv8 training is key. It offers better visuals and analyses of model prowess. Its suite includes tracking metrics, model graph visualizations, and embedding projections. With TensorBoard, insight into learning trends, performance metrics, and model behavior is within reach.

Combining Ultralytics YOLOv8, TFLite optimization, and TensorBoard yields powerful results for image recognition and object spotting. This trio facilitates smooth deployment of top-performing YOLOv8 models on various edge devices. Thus, it guarantees efficient machine learning directly on the devices. Additionally, TensorBoard's robust visual features significantly advance model performance comprehension and training refinement.

FAQ

Why should I export my YOLOv8 model to TFLite format?

Exporting your YOLOv8 model to TFLite format offers several key advantages. It optimizes for machine learning on devices. This brings about reduced latency and enhances privacy. It also makes your model much smaller.

Additionally, it ensures your model can work across various platforms, from embedded Linux to mobile devices. This broad compatibility allows seamless deployment on a variety of edge devices.

What are the key features of TFLite models?

TFLite models are designed for efficient on-device machine learning. They lessen time delays and improve user privacy.

They work on a variety of platforms, such as Android, iOS, and embedded Linux. Plus, they complement different programming languages for your flexibility.

Through hardware acceleration and optimized models, TFLite achieves remarkable performance. This culminates in a streamlined, yet powerful, machine learning experience.

What are the deployment options for TFLite models?

TFLite models find use in a range of deployment scenarios. They can be used on Android and iOS for tasks like object detection from camera feeds.

For embedded Linux, they speed up inference on devices like the Raspberry Pi. They can also run on microcontrollers, requiring minimal memory, without OS or library dependencies.

How can I export my YOLOv8 model to TFLite format?

To export your YOLOv8 to TFLite, first, install necessary packages including Ultralytics. Use the Ultralytics library to load and convert your model.

This process is done through Python, making it straightforward. Soon, your YOLOv8 model will be ready for TFLite deployment.

What are the deployment options for exported YOLOv8 TFLite models?

After the export, your YOLOv8 models can venture into various platforms. For Android and iOS, they enhance applications by analyzing images and objects.

Embedded Linux devices, such as the Raspberry Pi, see faster inferences. Even microcontrollers can handle these optimized models, pushing the limits of where your model can run.

How can I use TensorBoard with YOLOv8?

TensorBoard, a supreme monitor for machine learning, benefits YOLOv8 training. It immerses you in model insights and metrics, providing deep learning insights. This includes model graph visualization, performance metrics, and more.

With TensorBoard, tracking metrics, observing model architecture, and analyzing embeddings are just some features that transform your understanding of model training.

What are the key features of TensorBoard for YOLOv8 training?

TensorBoard's offerings include Time Series, Scalars, and Graphs. Time Series offers dynamic metrics tracking, showing how metrics evolve. Scalars highlight loss and accuracy trends, simplifying their observation.

Graphs, on the other hand, focus on the YOLOv8 model’s computational graph. This visualization aids in understanding its inner workings and data flow.

Can I deploy YOLOv8 TFLite models on edge devices?

Definitely. By utilizing TensorFlow and converting to TFLite, your YOLOv8 model is ready for edge devices. TFLite supports machine learning outside the cloud, ensuring your model works efficiently on various devices.

This inclusion of deep neural networks on the edge boosts computer vision deployment. It's perfect for tasks like image recognition and object detection.

Comments ()