Federated Annotation: Coordinating Labels Across Distributed Devices

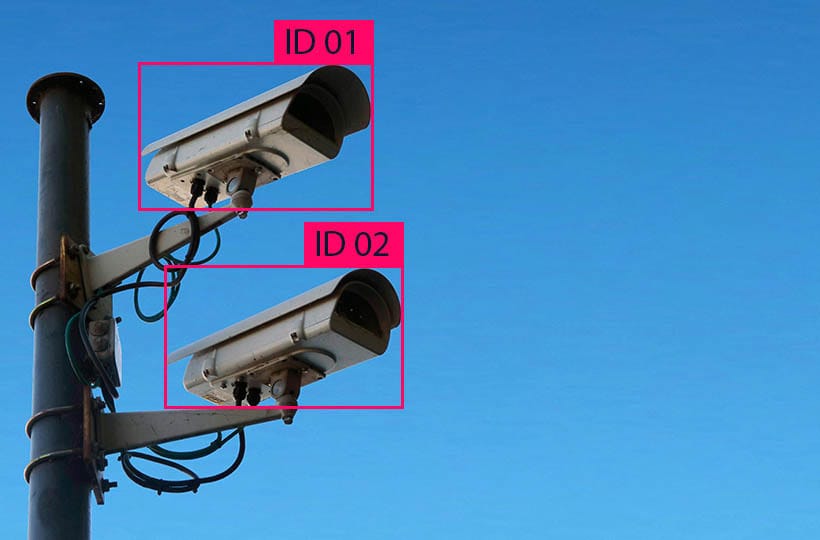

Federated Annotation is an approach to data labeling that combines the principles of federated learning with collaborative label coordination across distributed devices. In traditional machine learning systems, data is collected centrally, allowing for efficient annotation before being used in models. However, with growing privacy concerns and restrictions on data transmission, decentralized approaches have become increasingly necessary.

Federated Annotation enables labeling to be performed directly on users' devices or remote nodes where the data resides without requiring it to be sent to a central server. This method relies on decentralized processing and label synchronization among network participants, utilizing federated learning, result aggregation, and consensus-building mechanisms.

Introduction to Federated Annotation and Distributed Label Coordination

Federated Annotation transforms how data is labeled across distributed devices, offering a scalable, privacy-preserving alternative to traditional centralized approaches. However, ensuring annotation consistency and quality in a decentralized system presents unique challenges. To address these, several key strategies are employed:

- Consensus Mechanisms. Multiple devices annotate the same data point, and the system determines the most reliable label through majority voting, confidence scoring, or heuristic learning. This approach minimizes errors and enhances label accuracy.

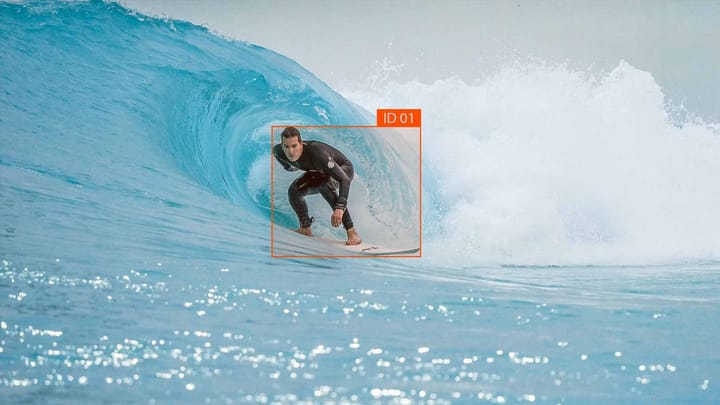

- Active Learning. Instead of labeling every data point, models intelligently identify uncertain or ambiguous cases and request human annotation only when necessary. This reduces the workload while maintaining high-quality labels.

- Personalization and Adaptability. Keeping data on users' devices allows annotations to be fine-tuned to individual contexts. This leads to better personalization in AI applications such as recommendation systems, voice assistants, and healthcare diagnostics, where context-specific accuracy is crucial.

- Bias Reduction and Data Diversity. Traditional datasets often suffer from the underrepresentation of specific user groups. Federated Annotation naturally captures a broader range of user environments and behaviors, fostering more inclusive AI models that generalize better across different populations.

The Importance of Distributed Device Coordination

As technology advances, an increasing number of devices are interconnected, forming complex networks requiring seamless coordination. Distributed device coordination ensures efficiency, reliability, and security in modern computing environments. Effective coordination is essential for optimizing performance and resource allocation in edge computing, the Internet of Things (IoT), or cloud-based systems.

Coordinating Labels Across Distributed Devices

In traditional machine learning workflows, data labeling is usually done centralized, where raw data is collected, annotated, and then used to train models. However, as data privacy regulations tighten and datasets grow in scale, a shift toward decentralized solutions has become essential.

Distributed labeling involves assigning and synchronizing labels across multiple devices without transferring raw data to a central server. This approach is instrumental in federated learning environments, where data remains on local devices, and only aggregated insights are shared. By keeping data localized, this method enhances privacy and reduces the risks associated with data breaches or unauthorized access.

One of the biggest challenges in coordinating labels across devices is ensuring consistency and accuracy. Since multiple devices may annotate the same data differently, consensus models, trust-weighted voting, and machine learning-assisted label verification are often used. For instance, if several devices assign different labels to a data point, an aggregation system can determine the most probable correct label by analyzing the annotators' patterns, confidence scores, or historical accuracy.

Role of Data Governance and Security

As businesses and institutions generate massive amounts of data, the challenge is to store and analyze it effectively and safeguard it against misuse and breaches. Without proper oversight, data can quickly become a liability rather than an asset.

At its core, data governance establishes a structured approach to managing data. It defines policies, assigns responsibilities, and ensures that information is accurate, consistent, and compliant with legal and industry standards. This framework helps organizations avoid chaos by preventing duplicate or outdated records, ensuring that data remains trustworthy and accessible when needed. A well-governed data environment allows companies to make smarter decisions, improve operational efficiency, and enhance customer experiences.

With regulations like GDPR and CCPA reshaping how organizations handle data, companies that invest in firm governance and security frameworks gain a competitive advantage. They mitigate risks and build trust with customers, partners, and stakeholders. In the digital age, responsible data management is no longer optional. It is a necessity for sustainable growth and innovation.

Best Practices and Strategies for Data Curation

An intense data curation process begins with clear standards for collection and validation. Filtering out redundant or outdated records keeps datasets reliable and efficient. Metadata plays a key role in making data searchable and understandable, ensuring users can easily find what they need. Automation helps streamline curation, but human oversight remains crucial for context and nuance.

Collaboration is another important factor. When teams across different departments contribute to data refinement, it leads to more comprehensive and meaningful datasets. Security and compliance must also be prioritized, ensuring that curated data meets privacy regulations and industry standards.

Establishing Standards and Protocols

Clear guidelines ensure seamless collaboration and prevent costly errors in technology, business, or science. Standards define best practices, while protocols outline specific procedures to follow. They create a structured environment where processes run smoothly, innovation thrives, and risks are minimized. Without them, confusion and inefficiency take over, leading to unreliable outcomes. The best systems evolve by refining their standards and adapting to new challenges while maintaining stability.

Building an Effective Data Catalog

A well-designed catalog is a central hub where data assets are organized, searchable, and easily accessible. It provides explicit metadata, descriptions, and lineage, helping teams understand where data comes from, how it is structured, and how it can be used.

An intense data catalog improves collaboration by breaking down silos and making data more discoverable across departments. It also enhances data governance by ensuring consistency, compliance, and access control. Automation plays a key role by keeping metadata current and reducing the manual effort needed to maintain accuracy.

A data catalog must be intuitive, scalable, and integrated with existing data systems to be truly effective. When done right, it empowers organizations to make faster, more informed decisions while maintaining trust in their data.

Leveraging Advanced Tools and Technologies

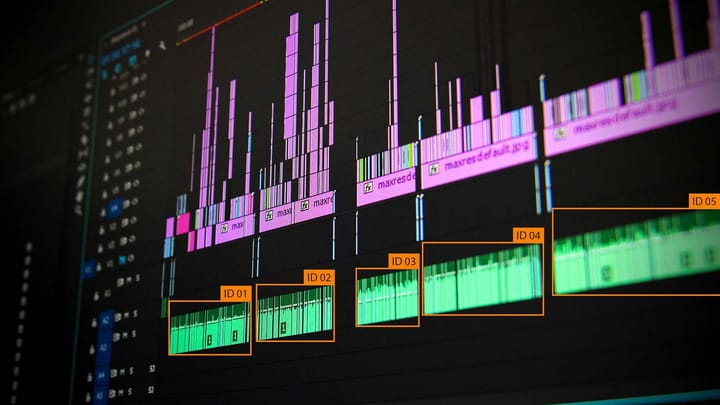

In today's data-driven world, managing information across distributed systems requires more than traditional methods. Innovative tools and intelligent technologies transform how organizations curate, process, and utilize data. Automation and AI-driven solutions improve accuracy, reduce redundancy, and unlock new insights at unprecedented speed. By integrating advanced analytics and machine learning, businesses can streamline workflows, drive more intelligent decision-making, and gain a competitive edge in an increasingly complex digital landscape.

Exploring Data Catalog and Curation Tools

Managing vast amounts of data is no small task, but modern data catalog tools like Microsoft Purview, Apache Atlas, and Okta transform how organizations handle information. These platforms go beyond simple storage by intelligently tagging, classifying, and maintaining metadata, even in complex, decentralized environments. Bringing structure to scattered data makes it easier to search, access, and collaborate across teams.

These tools are game-changers in fields like healthcare, where data accuracy can directly impact patient outcomes. Standardized metadata ensures that critical medical information is reliable, enabling more precise diagnostics and personalized treatment. As data grows in volume and complexity, intelligent cataloging and curation are becoming essential for organizations striving to make smarter, data-driven decisions.

Integrating Machine Learning Pipelines

Organizations can unlock the full potential of AI-driven insights by streamlining data processing, feature engineering, and model deployment. This approach is particularly valuable in areas like fraud detection, where rapid analysis of transaction patterns can prevent security threats, and demand forecasting, where precise predictions help optimize supply chains. A well-integrated pipeline transforms raw data into actionable intelligence, making machine learning a powerful engine for more intelligent decision-making.

Future Trends and Innovations in Federated Annotation

The future of federated annotation is shaping into a game-changer driven by cutting-edge technologies and novel methodologies. As data grows in both volume and complexity, the need for more efficient, privacy-preserving, and intelligent annotation methods is becoming increasingly critical.

One of the most exciting advancements is the integration of AI-driven automation, where machine learning models assist in annotating data directly on users' devices, reducing reliance on manual labeling. This speeds up the process and ensures higher consistency in annotations. Additionally, improvements in decentralized learning algorithms will allow devices to collaborate more effectively, refining annotations through consensus without exposing sensitive data.

Another key trend is the rise of blockchain-based verification, which can enhance trust in the annotation process by providing transparent and tamper-proof audit trails. This could be particularly valuable in healthcare and finance, where data integrity is paramount.

As federated annotation evolves, we can expect better synergy with edge computing, enabling real-time data labeling closer to the source. This will minimize latency, optimize resource usage, and make federated systems even more scalable.

Ultimately, the innovations in federated annotation will lead to more intelligent, more secure, and highly adaptive data ecosystems, transforming how organizations harness decentralized information while maintaining privacy and efficiency.

Summary

Advancements in distributed coordination and governance frameworks have transformed how organizations manage data and develop models. As data ecosystems become increasingly complex, ensuring data quality and security is more critical than ever.

Integrating AI-driven solutions and advanced authentication protocols reshapes data curation, automates processes, and enhances model performance. These innovations address existing challenges and create new opportunities for future advancements, keeping organizations at the forefront of technological progress.

For tech professionals and decision-makers, staying ahead means embracing best practices in data management and exploring emerging technologies like blockchain. Organizations can fully harness their data's potential and drive impactful results by prioritizing adaptability and strategic implementation.

Recent studies highlight how AI revolutionizes data curation by minimizing manual work and improving accuracy. This shift underscores the need for continuous learning and proactive adoption of cutting-edge solutions to remain competitive in an evolving digital landscape.

FAQ

What is federated dataset curation, and why is it important?

Federated dataset curation manages and organizes data across distributed systems while maintaining data quality and integrity. It's crucial for ensuring accurate and reliable data for machine learning models.

How does federated annotation differ from traditional data labeling?

Federated annotation involves coordinating labels across distributed devices, enabling decentralized data collection and labeling. Compared to centralized methods, this approach enhances scalability and privacy.

What role does data governance play in distributed systems?

Data governance ensures compliance, security, and consistency in data management. It establishes standards and policies to maintain data quality and integrity across federated environments.

How can organizations integrate advanced tools for federated dataset curation?

Organizations can use data catalog tools for better organization and automated labeling solutions to streamline the curation process. Integrating machine learning pipelines further enhances efficiency and accuracy.

What challenges are associated with federated annotation?

Challenges include ensuring data security, managing distributed systems, and maintaining consistency across different sources. Addressing these requires robust governance and advanced technological solutions.

How does federated dataset curation impact machine learning models?

High-quality, well-curated data improves model accuracy and reliability. Federated curation ensures diverse and representative data, enhancing model performance and generalization.

What is the importance of metadata in federated systems?

Metadata provides context and description for data, aiding in organization, access, and compliance. It is essential for maintaining data integrity and facilitating efficient data management.

How can organizations ensure compliance in federated environments?

Organizations can ensure compliance with regulations and maintain data security by implementing strict governance policies, conducting regular audits, and using advanced tools.

What are the benefits of using automated tools in data curation?

Automated tools reduce manual effort, improve efficiency, and enhance consistency. They also help scale operations and maintain high data quality across distributed systems.

How does federated annotation support business decision-making?

By providing high-quality, well-organized data, federated annotation enables businesses to make informed decisions. It supports data-driven strategies and enhances operational efficiency.

Comments ()