Ethical Considerations in AI Model Development

If you use facial recognition software, chances are it might not recognize you—if you're a woman or have darker skin. This reveals a bias in facial recognition tech, showing higher error rates for these groups. This issue is more than a tech failure; it embodies a deep ethical problem that U.S. agencies and organizations are addressing.

Understanding AI's ethical issues means recognizing the potential harm it can cause. Such harms are preventable with responsible AI principles. As AI tech advances rapidly, our governance frameworks must also evolve. They need to tackle bias, ensure transparency, and protect creative rights.

So, the need to use AI for good is undeniable. We must work together for an ethically driven AI future. Technology should serve humanity, ensuring fairness, privacy, safety, and trust as AI shapes our world.

Key Takeaways

- Understanding AI bias is crucial for making tech more inclusive and avoiding discrimination.

- Transparent AI systems help judge AI fairness, accuracy, and reliability, ensuring ethical decisions.

- Legal rules for AI-made content ownership protect creators' interests and rights.

- Responsible AI needs efforts from many fields in governance for ethical AI use.

- New ethical guidelines in stats and AI aim to guard privacy, autonomy, and fight bias.

Unveiling the Ethics of AI: The Path Towards Responsible Technology

AI is now a big part of our lives. It's vital to look at its ethics closely. We need AI that matches our society's values. It must be fair and unbiased.

This fairness means AI should make fair choices. Transparency in AI lets people understand how decisions are made. This builds trust in technology.

Keeping personal data safe is crucial with AI. Techniques like differential privacy and federated learning boost this safety. They ensure data security without hindering new ideas.

| Ethical Concern | Challenges | Strategies for Mitigation |

|---|---|---|

| Algorithmic Fairness | Bias in training data leading to skewed outputs | Inclusive data sets, regular audits |

| AI Transparency | "Black box" decision-making processes | Development of explainable AI models |

| AI Privacy | Risks related to data breaches and misuse | Enhanced encryption, strict access controls |

Supporting ethical AI means facing and solving complex issues. As AI grows, so must our ethics, letting AI help humanity. We need to protect against harming freedom or increasing inequality.

Confronting the Specter of Bias and Inequality in AI Algorithms

Artificial intelligence (AI) plays a big role in our lives today, making decisions and automating processes. But, it also risks carrying over old societal biases into our digital future. This problem with AI can add to discrimination, stressing the need for ethically built AI systems.

The Inherent Risks of Societal Bias in Data

AI systems learn from data, which might not always reflect our diverse society. When used for important tasks, like hiring or diagnosing illnesses, these biases can become a big problem. For example, AI trained mostly on data from people with light skin tones struggles to recognize those from other backgrounds. This could lead to unfairness or even harm in places like hospitals.

Strategies for Mitigating Discrimination in Machine Learning

Stopping discrimination in AI requires strong policies and new strategies that ensure fairness. A good step is to use data from all kinds of people and experiences. It’s also important that we understand how AI makes its decisions. This helps in checking the AI regularly to prevent biased decisions.

- Ensure continuous monitoring and iterative feedback mechanisms are in place to catch and correct biases as AI systems evolve.

- Promote cross-disciplinary collaborations to foster innovative solutions that prioritize inclusion and diversity within AI frameworks.

We must keep working hard to fight bias in AI to build technology that fairly benefits everyone. Leading efforts to use AI ethically will make sure our tech advancements include everyone.

| Issue | Impact | Strategic Approach |

|---|---|---|

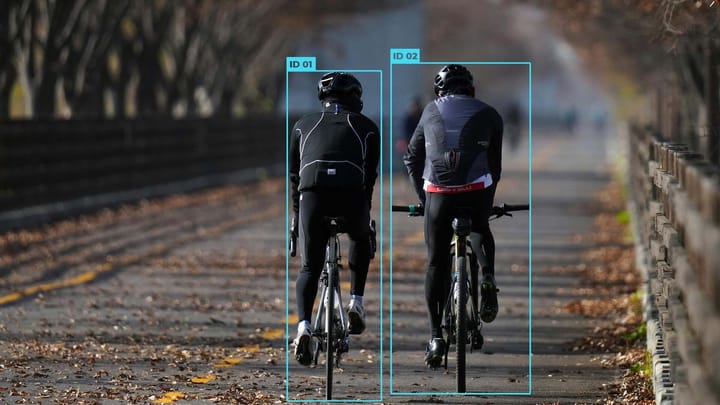

| Bias in facial recognition | Misidentification, heightened surveillance concerns | Regular algorithmic auditing, increase demographic diversity in datasets |

| AI in healthcare | Medical resource allocation biases, misdiagnosis | Development of equitable AI systems, incorporation of diverse health data |

| AI in recruitment | Reinforcement of historical workplace inequalities | Implement guidelines for fairness in AI-driven hiring tools |

By actively tackling AI bias, we improve AI's trustworthiness and its ability to serve everyone fairly. This commitment to ethical technology makes a big difference in building an inclusive future.

Deciphering the AI Black Box: A Roadmap to Transparency and Clarity

The mystery of AI's "black box" makes it hard to use these technologies in fields like healthcare and finance. The need for explainable AI is great. It aims to show how AI models work. This helps users trust AI decisions.

Worries about AI accountability have grown. So, AI transparency is now key to ethical AI use. Explainable AI helps people check and correct decisions when needed.

- Asserting AI Accountability: Holding AI and its creators accountable requires strong systems for tracking decisions.

- Enhancing AI Transparency: AI must be clear. Users should understand and trust how it works and what it does.

- Deploying Explainable AI: Using tech that explains its actions ensures AI meets ethical guidelines.

Making AI more transparent means using technical methods. Tools like LIME, SHAP, and DALEX help us understand AI decisions. This matters a lot in complex situations and big data.

| AI Model Type | Transparency Level | Common Applications |

|---|---|---|

| Black-box AI | Low | Fraud detection in financial sectors |

| White-box AI | High | Data-sensitive areas (Healthcare) |

| Explainable AI (XAI) | High | Areas needing careful decision-making |

Clear AI systems build trust. They let users and the public ensure AI matches our ethics. As AI grows, focusing on explainable AI will too. This will lead to better AI that fits with our values.

Shouldering the Weight of AI Accountability: Who is Liable for Autonomous Decisions?

The conversation around AI accountability and AI ethics is becoming key. As AI grows from simple tools to independent decision-makers, we are faced with a legal puzzle. Who is responsible when AI goes wrong? This question is critical for keeping our trust in technology safe.

In talking about AI model ethical considerations, we need to look at how AI's choices affect people. AI's complexity brings new challenges in figuring out who is to blame when it makes its own decisions.

Let's look at a big survey that asked people in many countries what they think about who should be blamed when autonomous surgical robots make mistakes. This study highlights why it's important to have clear rules for who is accountable when AI systems are used.

| Scenario | Blame Allocation | Public Opinion |

|---|---|---|

| Surgeon oversight with Robot Assistance | Surgeon | Moderate Agreement |

| Robot with Minor Autonomy | Shared between Robot and Surgeon | Mixed Opinions |

| Fully Autonomous Robot | Robot/Manufacturer | Increasing Shift Towards Manufacturer |

| No Human Involvement | Robot Manufacturer | Strong Blame on Manufacturer |

The data shows the more robots do on their own, the more people think manufacturers should be blamed. This highlights the urgent need for solid AI ethic policies. These policies will help shape how AI tech grows.

The European Commission is taking steps to make laws around AI clearer. They want to simplify how we decide who is at fault in AI mishaps. This could lead to worldwide standards for AI responsibility.

Handling AI accountability and AI ethics needs everyone to work together. Teachers, lawmakers, and AI creators must join forces. This way, AI can get better and stay safe and fair for everyone.

The Intersection of Art and Algorithm: Is AI-Generated Content Ownable?

The art world is changing fast, thanks to artificial intelligence. Now, AI-generated art is up against traditional art. This raises big questions about who owns an artwork when AI helps make it.

A New Frontier for Intellectual Property Rights

AI technologies, like Generative Adversarial Networks, are mixing things up. They're not just tools; they're part of the creative team. This blurs lines between human artists and machines. Because of this, our old rules about who owns what need a second look.

AI art is showing up in galleries. It's becoming a bigger part of how we make art today. We need new laws to handle art made by both humans and AI.

Clarifying Legal Boundaries in the Age of Creative AI

Who should own AI-created art? Should it be the people who made the AI, the artists using it, or even the AI itself? Copyright disputes are popping up more often as AI makes art faster than humans.

It's important to have rules that are fair. This way, everyone who helps make the art gets their share. This encourages more innovation and keeps the art world fair.

It's key to understand how art and AI work together. This could change how we think about ownership in creative work. As we figure this out, the relationship between tech and tradition is evolving. This could change our ideas about making and owning art today.

The Double-Edged Sword: AI's Power in Shaping Public Perception

The swift rise of artificial intelligence (AI) brings opportunities and risks, especially around public perception. It's evolving, and with it grows the concern over social manipulation and AI misinformation. These challenges call for ethical thought and strong actions.

AI changes how we get information on social platforms. This makes it a strong force in shaping talks and views. Yet, this power sometimes gets misused. This misuse can hurt democracy and unity in society. To fight AI misinformation, we need solid plans to ensure ethical use of AI.

Deepfake tech and targeted algorithms can twist reality. They make fake stories seem real. This threatens truth's integrity and calls for steps to protect our digital info world.

To face these issues, we need constant watchfulness, new solutions, and working together across sectors. Here are ways to push for AI's responsible use in talking to the public:

- Regulatory frameworks: Create strict rules for using AI in media and public talks. These rules must line up with ethical guidelines.

- Transparency in AI algorithms: We must make tech companies clear about their AI's workings. This is key for the content they make and share.

- AI literacy initiatives: Teach the public about AI in media. This will help us stand strong against false info.

- Robust fact-checking mechanisms: Use AI to make fact-checking better. This helps fix false info fast.

As we deal with AI's complex role in society, making sure we use AI ethically is crucial. We all must agree to use AI with care and honesty. This way, we can enjoy AI's benefits while avoiding problems with social manipulation.

"In an era where AI shapes public perception, proactive engagement in ethical practices is not optional but a necessity."

The potential for AI's positive influence is big. Yet, since AI can be used in good and bad ways, we must be careful and responsible. Our steps forward should be filled with policies that push for openness, accountability, and ethical thought. This ensures AI helps the public well, keeping our democratic values and social peace safe.

Safeguarding the Sanctity of Privacy in the Data-Driven Realms of AI

In today's fast-changing digital world, AI privacy concerns, AI security, and data protection in AI lead the way in tech growth and rules. Last year, Australia saw over 400 big data breaches. This makes it clear how crucial it is to strengthen data protection.

Health, finance, and insurance sectors are especially at risk. They're often targeted for their sensitive data. AI boosts security measures significantly. It speeds up the detection and handling of data breaches by more than 100 days, proving its worth in fighting cyber threats.

Yet, only 28% of companies fully use AI to prevent data breaches. This shows a big chance to enhance security in all areas. The General Data Protection Regulation (GDPR) is key. It provides a strong rulebook for data protection in AI. Following these rules helps protect privacy and build trust in AI tech.

Using AI for protecting data lets us quickly address potential breaches. This ensures everyone's privacy and keeps organizations safe.

- Using AI to detect threats before they happen

- More AI in checking how new projects affect privacy

- Using AI to make encryption stronger

| Sector | Reported Data Breaches | Cost Savings through AI ($) |

|---|---|---|

| Healthcare | 120 | 600,000 |

| Finance | 95 | 550,000 |

| Insurance | 85 | 650,000 |

To tackle AI privacy concerns and boost AI security, firms must embrace AI. But, they also need to push for strict rules and ethical AI use.

As AI changes the game, merging tech innovation with strong security will be key. By adopting these techs, we're creating a safer, secure future online.

AI at Work: A Vision of Automated Efficiency and the Underlying Workforce Shift

Industries are quickly adopting AI technology, changing employment and the workforce. These changes bring both challenges and opportunities to the global economy. It's vital to embrace retraining programs for workers to thrive in this new digital era.

Retraining The Human Workforce in the AI Era

The need for retraining has never been more urgent because of AI. These programs prepare employees for an AI-enhanced workplace. They focus on skills like digital literacy and problem-solving in automated settings.

The transition is not merely about technology; it's about shaping an agile, knowledgeable, and innovative human workforce capable of working alongside advanced AI systems.

Envisioning the New Landscape of Employment

AI automates tasks but also creates jobs needing human creativity and intelligence. Managers are now redesigning roles to work well with AI. This approach aims for a teamwork-like synergy between humans and machines.

Companies are moving towards roles that use AI for making decisions. This blend of human and AI skills is opening new opportunities. It also shows the need for flexible employment policies.

- Continuous learning and improvement of AI systems

- Development of human-AI interaction models

- Critical analysis and management of AI-driven data

Both workers and companies must see job automation as a chance for growth. Engaging in retraining lets the workforce update their abilities for the future. This strategy reduces the risks of losing jobs to AI and takes advantage of new possibilities.

Navigating the Ethical Minefield: The Emergence of Autonomous Weapons Systems

The rise of autonomous weapons due to AI in defense has led to ethical and regulatory dilemmas. These challenges highlight serious concerns about accountability and misuse, showing the need for strong international rules. It's important for you to understand and push for careful control that matches ethical AI standards.

Autonomous weapons work without human input, even deciding to end human lives. This makes us question how much trust we should put in machines for critical decisions. It's vital to keep human oversight in such serious scenarios. Still, we can't ignore that ethical AI could lower the risk to human lives in conflict areas by being more precise and making fewer mistakes.

| Concern | Statistic |

|---|---|

| Humanitarian concerns with autonomous weapons | 75% of surveyed experts raise concerns |

| Ethical decision-making in combat | 65% significance rate |

| Transparency in AI weapon systems | 85% demand for accountability |

There's a big divide worldwide on how to manage autonomous weapons. This mirrors the different views on ethical AI and responsible use by countries. Some nations focus on clarity and human oversight, while others boost their military with new tech. This situation shows the need for worldwide agreement and open talks.

AI Governance: Crafting the Policy Compass for Tech Stewardship

The complexity of today's tech world makes AI governance more important than ever. Society depends more on algorithms to make big decisions. It's key that we have strong AI policies to make sure tech is used right. These policies should push for fairness and accountability. They set the rules for how AI should work, keeping innovation and safety in balance.

Getting everyone involved in AI governance is key. We need to hear from tech experts, policy makers, ethicists, and people everywhere. Having a wide range of viewpoints helps make sure AI develops in a way that's good for everyone. It shows how important it is to include different perspectives in tech and policy making.

The main steps to take for good AI governance are:

- Getting involved early in talks about AI rules to stop problems before they start.

- Working to understand and follow GDPR and other privacy laws to protect users' data rights.

- Using regulatory sandboxes for testing AI in safe ways, making it easier to bring new ideas to life.

- Making sure a lot of different people have a say in AI policy, to balance new ideas with ethical concerns.

We need a clear plan for AI governance to navigate its ethical challenges. Recognizing AI's big influence on society is a must. It affects everything from job hiring to how much privacy people have. A flexible governance system is crucial. It helps us enjoy AI's benefits while avoiding problems like unfair data use and privacy risks.

This approach to AI governance looks not just at today's issues but also future ones. As AI gets more advanced, our policies need to keep pace. They must make sure AI helps society in ethical ways.

Summary

Today, AI is becoming a big part of our lives. We must use it wisely and think about ethics in AI. Issues like making AI's decisions clear and understanding the risks are important. These aren't just ideas; they're real challenges we face now.

Building AI responsibly should be our first step, not an afterthought. This means thinking about ethics from the start. It's key to making technology that's safe and fair for everyone.

How you see AI ethics shapes our future. We need to look at problems like keeping data safe, avoiding AI mistakes, and making sure AI treats everyone fairly. This helps build a world where technology is fair and accountable.

FAQ

What are the primary ethical considerations in AI model development?

Key ethical concerns in AI include ensuring AI actions are moral and responsible. This covers AI governance and handling issues like transparency, bias, fairness, privacy, and security. These aspects are vital when integrating AI into society.

How can we achieve ethical AI and a path towards responsible technology?

To achieve ethical AI, we focus on fairness in algorithms and making AI's workings clear. We also prioritize privacy and commit to responsible AI practices. This means considering AI's social impact at all development stages.

What risks do societal biases in data present to AI algorithms?

Societal biases in data can lead to AI making unfair decisions. This can make existing social inequalities worse through automated decisions.

How can discrimination in machine learning be mitigated?

To fight discrimination, we need diverse data sets and clear AI decisions. It's also important to have rules for when AI's decisions are biased. Keeping AI decisions transparent is key.

What is the "black box" issue in AI, and why is transparency important?

The "black box" issue means it's hard to see how AI makes decisions. Transparency is key so we can trust and understand AI decisions. This is really important in critical areas like healthcare and justice.

Who holds liability for autonomous decisions made by AI systems?

Usually, AI developers or users are responsible for AI decisions. The exact responsibility can vary. There's a debate on setting clear rules for when AI causes problems.

Can AI-generated content be legally owned, and if so, by whom?

AI content ownership is complex. It could belong to the AI's programmer, the data provider, or be a joint work. Laws are still catching up to these new issues.

What are the potential consequences of AI's capability for social manipulation?

AI can manipulate society by spreading false information, affecting democracy and stability. We need to stay vigilant and fight misleading content to protect truth and trust.

Why are privacy and security vital ethical considerations in AI?

AI deals with lots of personal information. It's crucial to keep this data safe from hacks or misuse. This protects our privacy and data quality.

How will AI impact the workforce, and what strategies can be implemented to address these changes?

AI may replace some jobs but also create new ones. We can help workers by offering retraining. Policies should also help ensure a fair transition for all.

How can AI governance shape the responsible deployment of AI technologies?

AI governance sets rules for ethical AI use. It ensures AI respects moral standards. It involves many stakeholders to direct AI for the good of society.

Comments ()