Ensuring label fairness and bias reduction in data labeling

Data labeling is a critical process in machine learning, where humans annotate data to create a training set for algorithms. However, the accuracy and fairness of data labeling can directly impact the performance and ethical considerations of machine learning models. In recent years, there has been a growing concern about the potential bias and unfairness in data labeling, which can lead to unintended consequences and harm to individuals or communities.

To address these challenges, it is essential to understand the importance of fairness and bias reduction in data labeling and to identify potential sources of bias. In this article, we will explore best practices for ensuring fairness in data labeling and strategies for reducing bias. We will also discuss the role of human oversight in ensuring label fairness and bias reduction, as well as evaluating the effectiveness of these measures. Furthermore, we will examine case studies of successful fairness and bias reduction in data labeling and discuss the challenges and opportunities for the future of this field.

Understanding The Importance Of Fairness And Bias Reduction In Data Labeling

Label bias and selection bias are two types of biases that can contribute to biased data labeling in machine learning. In addition, human bias in historical data such as loan and job applications, as well as correlated features or proxies, can also introduce bias into the process. This is a significant issue as biased data leads to inaccurate results that can have negative consequences for individuals and communities.

To mitigate these biases, data scientists must prioritize fairness metrics throughout the machine learning process. Biases can be addressed at different stages including early, mid, and late stages. Early-stage mitigation involves creating representative datasets while mid-stage mitigation focuses on model optimization techniques. Late-stage mitigation requires post-modeling interventions such as decision thresholds or pre-processing algorithms.

Furthermore, incorporating diverse perspectives from individuals of different genders, ethnicities, races, ages and sexual orientations is necessary when creating a fair dataset. Beyond that, using algorithms to identify bias directly should be considered when collecting new training data or evaluating models for accuracy. Ultimately the goal is to reduce the likelihood of implicit biases or false assumptions being made based on historical precedent which leads to accurate predictions for all people involved regardless of superficial features like gender or ethnicity.

Therefore it's important to incorporate strategies that address label fairness and reduce biases during data labeling processes especially in situations where there are discrepancies between diverse groups who would be impacted by automatic decisions though AI systems.

Identifying Potential Sources Of Bias In Data Labeling

One important concern in data labeling is ensuring that the process is fair and free from bias. While data labeling often involves a combination of human input and technology, relying on people to make judgments can introduce bias into the process. Labelers may mislabel or use their own discretion when translating, tagging or labeling information, leading to inaccurate and potentially discriminatory results.

To mitigate potential sources of bias in data labeling, labelers should choose labels thoughtfully and with sensitivity towards respecting the humanity and individuality of those being labeled. Avoiding labels that equate people with their condition or using adjectives as nouns can help reduce harmful stereotypes in the resulting models. Organizations should document their methods for selecting and cleansing data, allowing for root-cause analysis of sources of bias during future model iterations.

In addition to thoughtful labeling practices, monitoring for outliers through statistical analyses and validating samples of training data for representativeness can help reduce potential sources of bias. Transparency throughout model development and selection allows individuals to identify possible biases before they become ingrained in the system. In cases where blinded outcome assessment is not possible other means such as sampling techniques are good options when available. Ultimately identifying potential sources of bias through careful analysis can ultimately lead to more accurate models that serve everyone without discriminatory results.

Best Practices For Ensuring Fairness In Data Labeling

When it comes to data labeling, fairness is key. However, ensuring fair labeling can be challenging, particularly given the increasing volume of data requiring labels. Best practices for ensuring fairness in data labeling involve implementing optimal labeling practices to minimize cost and time while developing accurate datasets that lead to high-quality models.

Organizations need to set guidelines, rules and procedures for identifying potential bias in datasets, communicating this information effectively and mitigating any issues that arise. This involves selecting appropriate statistical measures of "fairness" and tweaking the algorithm to ensure a more equitable result.

To avoid bias in your training data, preprocessing techniques can help manipulate the data prior to being used by machine learning algorithms. Additionally, post-processing on the output from an algorithm can also help address potential bias in model decisions. Legal regulations have a critical role as well: nondiscrimination laws regulate digital practices similar human resources-type anti discrimination related effort is needed when hiring people forming teams that will implement these technologies.

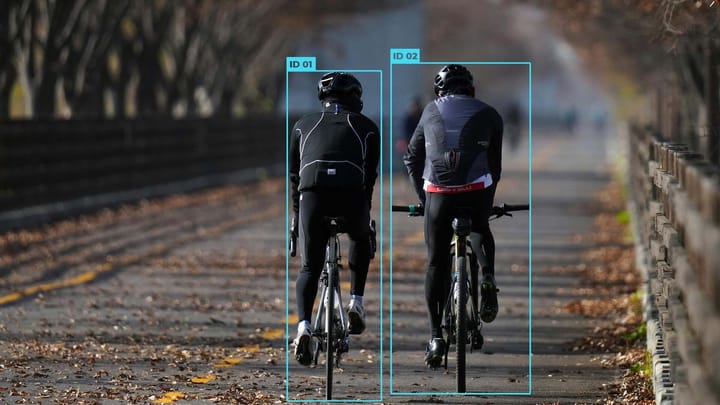

Furthermore, open-source data-labeling technology can help reduce potential biases inherent within annotation tools themselves when identifying objects in raw imaging or video streams. Although some level of automation may be useful for expediency purposes, utilizing machines only introduces new types of biased decision-making processes into what needs closer consideration from humans evaluating ethical use cases. Therein lies how we balance practicality with responsibility throughout these emerging industries where AI methods are increasingly deployed. Creating awareness by regularly reviewing and educating about our own unconscious biases increases understanding while addressing underlying causes leading towards tackling any prejudices within our cognitive perspectives wherever possible.

Strategies For Reducing Bias In Data Labeling

Label bias is a significant challenge in data labeling that can result in an unfair model. Organizations can address this issue by implementing strategies that reduce bias and ensure label fairness. One approach to creating a fair machine learning model is the pre-processing approach, where bias is identified and addressed in the data generation process.

There are three main categories for reducing bias in data analysis. Data pre-processing involves identifying and removing biased records or balancing them with counter-examples to reduce their impact on the final model. Data post-processing refers to adjusting the output of the model to remove any remaining biases. Algorithm augmentation, on the other hand, involves modifying or tweaking an algorithm to produce a more fair output.

Bias is an ongoing challenge for AI systems, but organizations can mitigate potential dataset bias by setting up guidelines, rules, and procedures for identifying it. Furthermore, when considering active data collection strategies' fairness considerations, it's important also to account for label bias. Label bias occurs when the process used systematically assigns labels incorrectly; this may involve classifying certain groups incorrectly because of stereotypes or prejudices.

Reducing label biases while ensuring label fairness plays an essential role in creating accurate models while avoiding any biases affecting various demographic groups wrongly . Organizations must implement appropriate strategies like proactive data collection techniques that help eliminate these biases from their datasets completely thereby ensuring equality across all demographics/users using their models.

The Role Of Human Oversight In Ensuring Label Fairness And Bias Reduction

Labeling data to train machines for AI algorithms can potentially lead to bias and fairness issues. This problem can occur due to incorrect or imprecise labeling, as well as the existence of human biases in creating machine learning models. To address these problems, there are several approaches proposed for reducing bias in algorithms, including data pre-processing, data post-processing, and algorithm augmentation.

One way to reduce bias is through human oversight and agency in ensuring label fairness. Human oversight can guarantee that the labeled dataset meets ethical standards and avoids perpetuating social or cultural biases. Technical robustness is also crucial in a fair labeling process; it ensures that the algorithms used in labeling have been tested across different settings with diverse samples of labeled instances.

Ensuring label fairness can be achieved by prioritizing safety while maintaining technical efficacy during an automated labeling process since hand-labeling isn't always ideal due to its costliness and potential dangers. Alternative methods like crowdsourcing are becoming increasingly popular for large-scale labeling projects but come with their own set of challenges. However, employing a continually reviewed set of guidelines addressing anonymity, transparency procedures aims at increasing both equitability and fostering public trust towards automated decision-making systems based on machine learning models that benefit from low-bias datasets accurately reflecting real-world experiences.

Evaluating The Effectiveness Of Fairness And Bias Reduction Measures In Data Labeling

Data labeling plays a crucial role in creating machine learning models but can be subject to bias and lack of fairness. The two main causes of bias in machine learning models are label bias and selection bias. Label bias can result from incorrect or subjective labeling of data, while selection bias occurs when certain groups are overrepresented or underrepresented in the training data.

Human biases can further affect the quality of labels, leading to unfair outcomes for certain groups. This highlights the importance of ensuring label fairness by implementing different measures that correspond to different notions of fairness. Post-processing techniques can be applied to reduce bias in machine learning models, but accurate detection is a key challenge.

Another cause of unfairness in algorithmic decision-making is outcome proxy bias, which occurs when a proxy metric is used instead of the actual metric. This can result in incorrect predictions that disadvantage specific groups. To mitigate this issue, new approaches have been developed where predicted scores are adjusted based on learned/observed differences between privileged and unprivileged groups after training a recommender system.

To evaluate the effectiveness of fairness and bias reduction measures in data labeling, it is important to monitor accuracy and discrimination levels before and after implementation. Debiased data can result in models with greater accuracy and lower discrimination, indicating that these measures have been successful at minimizing biases present during data labeling processes. Overall, it’s essential to prioritize label fairness when building machine learning models as it will help ensure all users receive fair treatment regardless of their characteristics.

The Future Of Fairness And Bias Reduction In Data Labeling: Challenges And Opportunities.

Labeling bias is a critical issue that affects data accuracy and fairness, especially in machine learning models. Incorrect or imprecise labeling may introduce biases generating undesired outcomes, eventually impacting future decisions based on the model's outputs. Leadership can take actions to ensure AI evolves to be free of biases. Race, gender, and religion are often removed from training data to tackle bias issues. However, language barriers between labelers may present a challenge for consistent dataset quality.

Each stage of data processing presents a risk of introducing biased statements unknowingly. Group indicators such as race or religion operate differently across demographics which require specific awareness - differences in meaning across groups create uncertainty in fair labeling practices. Bias within AI reveals information about the datasets used to train ML models; providing unbiased labeled data will fuel responsible AI growth and real-world applications.

Overcoming challenges associated with biased datasets means considering various context-driven factors like language, semantic recognition within cultures or minority populations and socio-economic backgrounds reducing error-prone errors can help deliver better results. To facilitate accurate labeling free from any prejudice requires communication at all levels within an organization where collaboration becomes key entailing leaders fostering transparency when acquiring labeled data through varying methods representing diversity accurately through different angles alike building community partnerships prioritizing ethical standards compliance by all stakeholders involved irrespective of adhering regulations only but above all ethical aspects related aiming at responsible tools serving an equitable society's development as a whole striving towards zero-biases algorithmic processes.

Comments ()