Edge AI Security and Model Protection Strategies

Unlike cloud systems, edge devices are scattered in homes, factories, and public spaces. They are much easier to access physically, and the models they run are valuable assets. For companies, these models often represent years of investment. Users may handle highly personal or business-critical data. If attackers compromise these devices, they can steal models, tamper with predictions, or leak sensitive information. That is why model encryption, strong adversarial robustness, and reliable secure inference are no longer optional but necessary safeguards for anyone deploying AI at the edge.

Threats to Edge AI

Physical risks. Devices deployed in public or remote areas can be stolen or tampered with. Once an attacker has physical access, they may try to extract model files or manipulate the hardware.

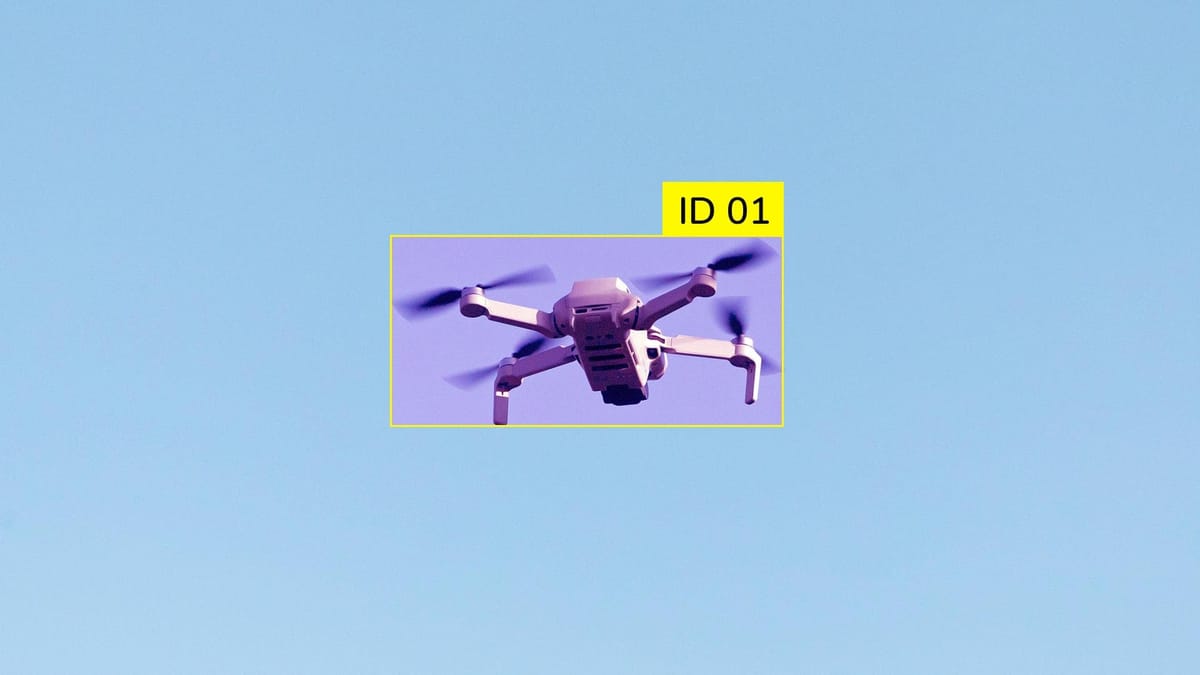

Model attacks. AI systems are attractive targets because models themselves are valuable. Attackers may attempt to copy them using model extraction, recover private data through inversion, or feed adversarial inputs designed to bypass adversarial robustness. These tricks can cause devices to make dangerous mistakes, such as misidentifying objects or granting unauthorized access.

Weak update systems. Since many edge devices rely on firmware and model updates, insecure over-the-air (OTA) processes open them to code injection or downgrade attacks.

Data leaks. Even when raw data stays local, logs or feature outputs can reveal more than expected, creating privacy concerns.

Examples already exist in the wild. Security researchers have shown how adversarial images can mislead vision systems in self-driving cars. Botnets like Mirai have exploited unsecured IoT devices at scale.

Core Principles of Model Protection

The first is confidential computing, which ensures data and models remain protected when used, not just when stored or transmitted.

The second is a layered defense strategy. Security cannot rely on a single tool or setting. Instead, hardware safeguards, cryptography, monitoring, and organizational policies must all work together.

The third principle is balance. Edge devices are small, power-limited, and often need fast response times. Therefore, security methods like model encryption or adversarial training must be designed to protect models without slowing them down so much that they lose their purpose.

Technical Strategies for Protecting Edge AI Models

Protection During Execution

- Trusted Execution Environments (TEEs). Tools like ARM TrustZone and Intel SGX create isolated spaces where secure inference can occur. Even if the operating system is compromised, the model inside remains safe.

- Secure Boot and attestation. Devices that verify their software during startup can block malicious code. With attestation, remote servers can confirm a device is genuine and uncompromised before trusting its results.

- Memory protection. Encrypting memory areas where models are loaded makes it harder for attackers to read or change parameters while a model runs.

Protecting Models as Intellectual Property

- Model encryption and obfuscation. Encrypting models ensures stolen files are useless without the correct keys. Obfuscation adds an extra layer, making reverse engineering more complex.

- Watermarking and fingerprinting. Hidden patterns embedded in a model can later prove ownership or reveal leaks. Fingerprints also allow the detection of cloned models in unauthorized systems.

- Defense against extraction. Limiting how often an API can be queried, monitoring suspicious usage, and inserting protective noise in responses can make it far harder for attackers to copy a model.

Defending Against Adversarial Examples

- Adversarial training. Including adversarial examples in training helps models learn how to resist manipulation.

- Input monitoring. Lightweight detectors can spot suspicious inputs far outside standard data patterns, adding an early warning layer.

- Robust optimization. Training methods focused on stability can improve adversarial robustness, making models less sensitive to small, malicious changes.

Protecting Data and User Privacy

- Federated learning. With this approach, devices train locally and only send aggregated updates to the server. This way, raw data never leaves the device, supporting privacy-friendly secure inference.

- Differential privacy. Adding statistical noise to data or updates ensures no individual record can be reconstructed, even if information is intercepted.

- Homomorphic encryption. Though still resource-heavy, this allows computations to be performed on encrypted data. It makes secure inference possible for sensitive cases without exposing the underlying data.

Lifecycle Security and Updates

Secure OTA updates. Updates must be signed and verified so only legitimate patches are installed. This keeps devices current and maintains adversarial robustness against new threats.

Patch management. If updates fail, companies need clear policies for version control and safe rollback.

Monitoring. Centralized oversight can detect unusual activity across thousands of devices, signaling potential breaches before they spread.

Lifecycle security ensures that protection stays strong throughout a device’s years of operation.

Standards and Organizational Measures

International frameworks such as ISO/IEC 27001, NIST standards, and ETSI IoT guidelines provide structured approaches to cybersecurity. Additional regulations like GDPR or HIPAA apply in healthcare, finance, or consumer technology.

Equally important is cooperation. Hardware vendors, model developers, and end users must align on security practices. From model encryption to secure inference, each layer only works well if the ecosystem treats protection as a shared priority.

Challenges and Trade-offs

- Balancing performance with security. Strong model encryption or anomaly detection can strain limited hardware resources. Solutions must be designed to be secure without slowing down inference.

- Cost considerations. Features like TEEs increase device cost. Organizations must decide how much to invest in protection and where the trade-offs lie.

- Scaling up. Managing updates, encryption keys, and monitoring across huge fleets of devices is complex. Automation and centralized orchestration are essential to scale secure inference safely.

The Future of Edge AI Security

New lightweight model encryption methods are emerging that better suit resource-limited devices. Research into quantum-safe cryptography aims to prepare for future risks. Meanwhile, AI-driven monitoring will increasingly monitor threats in real time, improving adversarial robustness without constant human oversight.

As 5G and 6G networks expand, devices will gain faster links to the cloud. This hybrid model will improve update delivery and monitoring, but also require careful attention to new attack surfaces. Future success will depend on combining edge protection with cloud-based oversight for secure inference at scale.

Summary

Edge AI is reshaping industries, but without strong security, it cannot deliver its full potential. Protecting models through model encryption, ensuring adversarial robustness, and maintaining secure inference are the pillars of trustworthy edge deployments. Companies that take model protection seriously will defend their intellectual property and build systems that users can depend on in the long term.

FAQ

What makes edge AI security different from cloud AI security?

Edge devices operate outside protected data centers and are often deployed in public or remote environments. This makes them more vulnerable to tampering and highlights the need for model encryption and secure inference strategies.

Why is model encryption necessary for edge AI?

Model encryption protects proprietary AI models from theft or reverse engineering. Even if an attacker extracts model files, encryption ensures they remain unusable without the correct keys.

What is adversarial robustness in edge AI?

Adversarial robustness refers to a model’s ability to withstand manipulated inputs designed to trick it. Strengthening robustness is critical in safety-critical environments like healthcare or autonomous driving.

How does secure inference protect sensitive data?

Secure inference ensures that predictions can be made on sensitive data without exposing it to attackers. Techniques like trusted execution environments and homomorphic encryption enable private, verifiable model execution.

What are common threats to edge AI systems?

Threats include physical tampering, model extraction, adversarial attacks, insecure OTA updates, and data leaks. Each requires targeted defenses like model encryption and monitoring systems.

How do trusted execution environments improve security?

Trusted Execution Environments (TEEs) create isolated zones for secure inference. They shield model parameters and computations from unauthorized access, even if the operating system is compromised.

What methods improve adversarial robustness in practice?

Adversarial training, anomaly detection, and robust optimization techniques strengthen adversarial robustness. These approaches make attacks harder and increase trust in model predictions.

How do lifecycle updates contribute to edge AI security?

Secure OTA updates and patch management keep devices resilient against evolving threats. Regular updates also improve adversarial robustness and ensure reliable, secure inference.

What trends define the future of edge AI security?

The future includes lightweight model encryption, quantum-safe cryptography, and AI-driven anomaly detection. These innovations will support scalable, secure inference across millions of devices.

Comments ()