Distributed Annotation Across Edge Networks: Scalability & Security

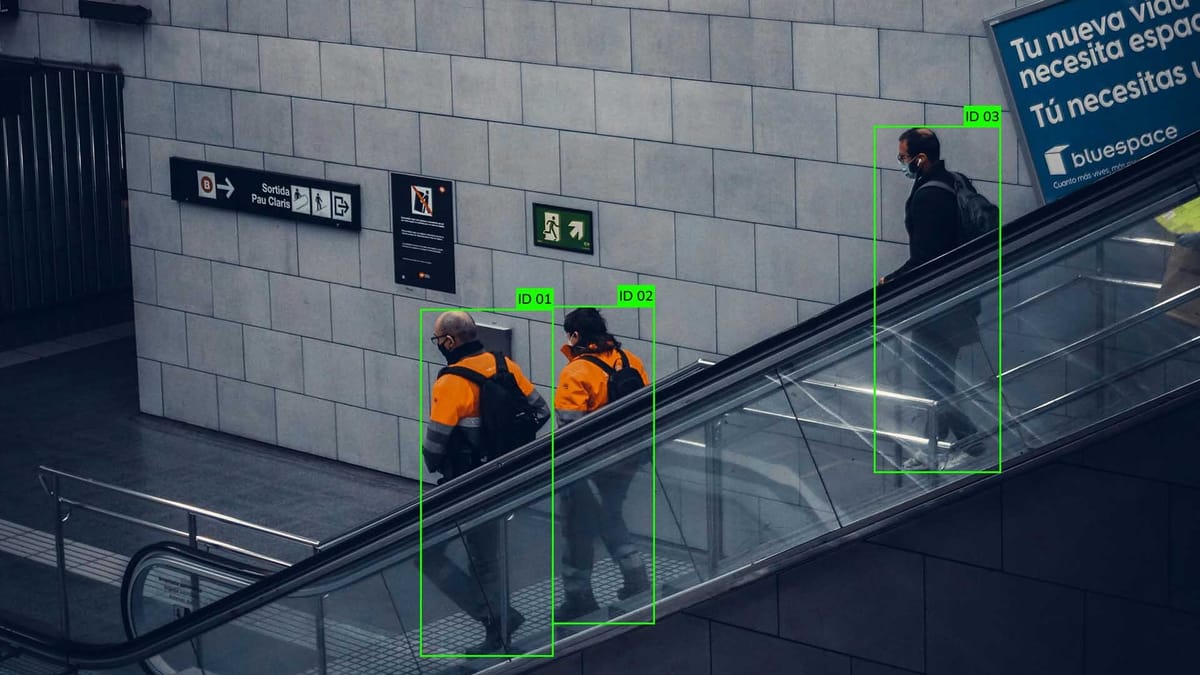

In the modern edge computing environment, distributed annotation across edge networks is becoming increasingly relevant. It aims to improve the efficiency of data processing and analytics systems, reduce latency, and minimize the amount of data transmitted to centralized cloud infrastructure.

One of the core mechanisms in this approach is collaborative labeling, when multiple edge devices jointly perform data annotation, coordinating their actions and integrating the results. To efficiently utilize shared computational resources and ensure quality of service, it is essential to use network slicing, the technology of dividing a single physical network into isolated logical "slices" that meet different requirements, such as reliability, speed, and security. Through data partitioning and distributing datasets across nodes and logical network segments, coordinated, large-scale processing can be achieved while reducing transmission costs and maintaining device autonomy.

Understanding Distributed Annotation Networks

A distributed annotation network is an architecture in which data labeling is executed across multiple decentralized nodes, often located at the network edge. Instead of transmitting raw data to a centralized data center, annotation is performed locally, reducing bandwidth consumption and latency while improving privacy. In such environments, collaborative labeling allows multiple edge nodes to share annotation tasks and collectively refine labeling accuracy through consensus mechanisms or model-assisted validation.

Operators often employ network slicing to manage traffic efficiently and ensure that annotation tasks do not compete with other critical network functions. This technique creates dedicated logical channels for annotation workflows, each tailored to specific performance and security requirements. Complementing this, data partitioning distributes datasets across nodes in a way that balances load, minimizes redundancy, and maintains data relevance for each processing unit.

Defining Decentralized Annotation and Its Importance

Decentralized annotation is an approach in which data labeling is distributed across multiple independent nodes or devices, often located at the network edge. This model departs from the traditional centralized structure, reducing reliance on a single server and ensuring privacy, availability, and computational efficiency.

A real-world example comes from the Sahara AI pilot project, focused on decentralized data collection and labeling. It achieved 92% verification accuracy, with annotated data passing peer review successfully confirmed by internal QA checks. This demonstrates that decentralized approaches can maintain high quality at scale when supported by the right incentives and validation mechanisms.

Benefits for Scalability and Security in AI

- Improved workload distribution through collaborative labeling - enables multiple nodes to share annotation tasks, reducing bottlenecks and ensuring consistent performance as systems scale.

- Efficient resource utilization via network slicing - isolates annotation processes from other network operations, guaranteeing bandwidth, latency, and security requirements even under heavy load.

- Optimized data handling through data partitioning - divides datasets across edge nodes to balance processing loads, minimize redundancy, and reduce transmission overhead.

- Faster system scaling without central dependency - eliminates single points of failure by allowing annotation and processing to occur in parallel across decentralized infrastructure.

- Enhanced data security at the edge - keeps sensitive information local, lowering the risk of large-scale breaches during transmission.

- Stronger resilience against network attacks - isolated network slices and distributed processing make it harder for malicious actors to disrupt operations globally.

- Reduced operational costs - localized annotation and data handling decrease reliance on expensive centralized cloud processing.

- Adaptive performance under varying loads - the combination of collaborative labeling and network slicing allows systems to maintain quality of service in dynamic environments.

Step-by-Step Guide to Implementing Distributed Annotation Networks

By leveraging collaborative labeling among edge devices, the system can scale gracefully and maintain high performance. Effective network slicing ensures that annotation tasks are delivered within isolated, quality-assured channels, protecting performance and security.

A notable illustration of this approach can be found in Webknossos, an open-source platform designed for annotating massive 3D microscopy datasets in neuroscience, ranging from tens of terabytes to full petabytes. By enabling numerous distributed contributors to work directly in their browsers, the system eliminates the need for transferring massive datasets, sidestepping the logistical hurdles of physical data movement. This model demonstrates how decentralized annotation can seamlessly combine scalability by managing data at unprecedented volumes with security and keeping sensitive information local to the user's environment.

Planning Your Annotation Strategy

The foundation of a successful distributed annotation network lies in a well-designed strategy that defines how data will be labeled, shared, and validated across edge nodes. At this stage, the objective is to align annotation requirements with available infrastructure, ensuring that collaborative labeling efforts are efficient and consistent.

Integrating network slicing into the planning phase allows annotation tasks to operate within dedicated, isolated channels, preventing performance degradation from competing network traffic. Likewise, thoughtful data partitioning ensures that datasets are logically segmented and distributed, minimizing redundancy while maximizing processing speed at each node.

Integrating with Existing Data Management Systems

When implementing a distributed annotation network, seamless integration with existing data management systems is essential for maintaining operational efficiency and avoiding workflow disruptions. This stage involves ensuring that annotated datasets can be stored, accessed, and versioned within the organization's current infrastructure, whether it relies on cloud platforms, on-premise servers, or hybrid solutions.

Through collaborative labeling, annotations produced by multiple edge nodes can be synchronized in near real time with central repositories, enabling cross-team visibility and streamlined quality control. Employing network slicing ensures that annotation data streams remain isolated from other high-priority operations, reducing the risk of bandwidth contention or performance drops.

Ensuring Data Governance, Security, and Quality

Governance begins with clear policies defining data ownership, usage rights, and retention periods. These policies ensure that every participant in the collaborative labeling process operates under the same compliance framework. Depending on the data domain, these policies should align with relevant industry regulations and privacy laws, such as GDPR or HIPAA.

Security is reinforced through network slicing, which isolates annotation traffic from other network operations, reducing the risk of data leakage or unauthorized access. Encryption in transit and at rest further protects sensitive information, while access controls ensure that only authorized nodes or contributors can modify specific datasets.

Quality assurance benefits from a combination of automated and manual validation workflows. Applying data partitioning, datasets can be broken into manageable segments, each undergoing targeted quality checks before being merged into the final repository.

A system that embeds governance, security, and quality from the outset strengthens trust among stakeholders, paving the way for sustainable and reliable annotation at scale.

Establishing Robust Data Governance Protocols

- Key Governance Components. A structured model often inspired by frameworks like DAMA-DMBOK or COBIT provides a strategic backbone for governance. Clear roles, such as Data Stewards, Data Owners, and a Data Governance Council, ensure that responsibility is distributed effectively across the organization.

- Policies and Procedures. Formalized guidelines detailing data classification, retention, access, sharing, and compliance must be documented and maintained. These policies help maintain consistency and accountability in how annotation data is handled.

- Security and Compliance Measures. Encryption (both at rest and in transit), role-based access control (RBAC), audit logs, and secure protocols are essential to protect sensitive annotation data, especially in regulated environments.

- Data Quality and Lineage. Automated validation, profiling, and cleansing workflows help ensure the accuracy and reliability of annotations. Tracking data lineage, knowing where each data point comes from and how it was transformed, is vital for transparency and auditability.

- Metadata Management and Discoverability. A centralized metadata management system, such as a data catalog, enhances discoverability and self-service capabilities, promoting efficient access to annotation.

A concrete example can be found at Northern Trust, which implemented a hybrid governance model combining centralized oversight with domain-level autonomy (e.g., data stewards in business units), supported by tools like data catalogs and lineage tracking. As a result, the firm achieved:

- An 80% reduction in compliance risk.

- A 90% improvement in data quality.

Enhancing Machine Learning with Optimized Data Labeling

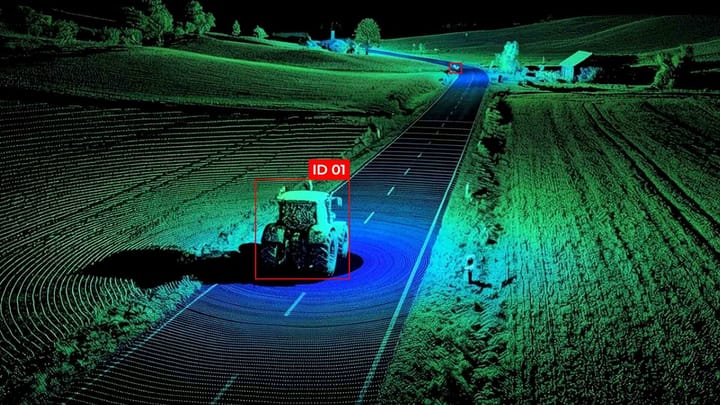

High-quality labeled data is the backbone of effective machine learning models, and distributed annotation networks provide a scalable and secure production method. Leveraging collaborative labeling, multiple edge nodes can annotate datasets simultaneously, increasing throughput and ensuring the model receives diverse, well-validated inputs.

Optimizing data labeling in this way improves the accuracy and generalization of machine learning models and enhances scalability by enabling teams to handle larger datasets seamlessly. It strengthens security, as data can remain localized on edge devices, minimizing the need for transferring sensitive information across networks.

Active Learning Techniques to Improve Accuracy

- Uncertainty Sampling. The system selects data points where the model has the lowest confidence for annotation, allowing collaborative labeling efforts to focus on the most informative samples.

- Query by Committee. Multiple models vote on which samples are most ambiguous, prioritizing them for labeling by distributed nodes, enhancing consensus-driven accuracy.

- Diversity Sampling. Actively selects a diverse subset of data to reduce redundancy, leveraging data partitioning to ensure different nodes handle complementary segments of the dataset.

- Expected Model Change. Identifies samples likely to cause the most significant improvement in model parameters once labeled, optimizing resource use across distributed annotators.

- Error-driven Sampling. Targets data points where the current model frequently makes mistakes, helping the system iteratively correct weaknesses.

- Representative Sampling. Ensures that selected samples accurately reflect the overall data distribution, maintaining balance across partitions and reducing labeling bias.

- Incremental Labeling with Network Slicing. Allocates high-priority annotation tasks to dedicated network slices, ensuring low-latency updates for active learning iterations.

- Automated Feedback Loops. Integrates labeled data immediately into model retraining pipelines, so distributed annotation nodes can adjust future labeling priorities dynamically.

Summary

Integrating decentralized annotation with active learning and robust data governance enhances scalability and security, allowing organizations to handle massive datasets without compromising quality or compliance. Real-world implementations, from neuroscience data platforms to autonomous vehicle systems, demonstrate that these principles can be applied successfully to deliver accurate, timely, and secure annotations at scale.

The combination of strategic planning, technical infrastructure, and continuous quality control makes distributed annotation networks an indispensable component of modern AI and machine learning workflows.

FAQ

What is a distributed annotation network?

A distributed annotation network is a system where data labeling occurs across multiple edge nodes rather than a centralized server. By keeping data closer to where it is collected, it improves scalability, reduces latency, and enhances data privacy.

How does collaborative labeling enhance distributed annotation?

Collaborative labeling allows multiple edge devices or nodes to annotate data together, improving accuracy and efficiency. This coordination helps identify inconsistencies early and accelerates large-scale labeling efforts.

What role does network slicing play in distributed annotation networks?

Network slicing creates isolated, dedicated channels for annotation tasks, ensuring consistent bandwidth, low latency, and secure data transmission. It protects annotation workflows from interference by other network traffic.

Why is data partitioning important in edge annotation systems?

Data partitioning distributes datasets across nodes, balancing processing loads and minimizing redundancy. This enables faster labeling, efficient resource use, and better management of large datasets.

How does decentralized annotation improve data security?

By keeping data localized at edge nodes, decentralized annotation reduces the need to transfer sensitive information to a central server. This lowers the risk of large-scale breaches and supports compliance with privacy regulations.

What are active learning techniques, and how do they enhance accuracy?

Active learning techniques, such as uncertainty and diversity sampling, select the most informative or ambiguous data points for annotation. This ensures collaborative labeling focuses on samples that will improve model performance.

How can existing data management systems integrate with distributed annotation networks?

Integration involves synchronizing annotated data with current storage, versioning, and retrieval systems. Network slicing and data partitioning ensure smooth performance and secure, incremental updates without overloading infrastructure.

What governance measures are essential for distributed annotation networks?

Robust data governance includes clear policies, defined roles (like data stewards), compliance with regulations, and audit mechanisms. These measures maintain data quality, security, and accountability throughout annotation.

How does distributed annotation contribute to machine learning scalability?

It allows multiple nodes to process and label data simultaneously, handling larger datasets without central bottlenecks. Combined with collaborative labeling and partitioned workloads, machine learning models can be trained faster and more efficiently.

What are the main benefits of combining collaborative labeling, network slicing, and data partitioning?

These techniques enhance scalability, ensure security, and maintain high-quality annotations. They optimize resource usage, protect sensitive data, and allow large-scale machine learning applications to operate effectively across distributed environments.

Comments ()