Designing Microtasks: Breaking Down Annotation Jobs for Faster Completion

Breaking down complex tasks into smaller ones, known as microtasking, can improve efficiency and accuracy in creating AI training data.

We'll cover strategies for optimizing workflows, applying cognitive personalization, and implementing quality control mechanisms. We'll also discuss methods for optimizing the user interface for worker productivity. This knowledge will help you design microtasks that speed up data annotation time and maintain accuracy.

Key Takeaways

- Microtask design reduces low-quality results.

- Breaking down complex tasks improves data annotation efficiency.

- Cognitive personalization improves task performance.

- Quality control mechanisms support data annotation accuracy.

- Task decomposition streamlines data annotation processes.

Understanding the Role of Data Quality in Crowdsourcing

Data quality in crowdsourcing affects the accuracy and effectiveness of results, as errors or inconsistencies in data annotation lead to incorrect conclusions. Quality control, which includes annotation validation and behavioral analysis, reduces risks and improves the overall outcome.

Worker performance metrics

Measures help identify areas in data annotation for improvement in the workflow. These include:

- Speed of task completion.

- Accuracy of data annotations.

- Time spent on a task.

Research shows that clearly defined, structured tasks can increase task performance. This highlights the role of thoughtful task design in crowdsourcing projects.

Cognitive Personalization in Task Design

Cognitive Personalization in Task Design is an approach that considers annotators' characteristics to improve the efficiency and quality of data markup.

Key aspects:

- Difficulty Adaptation. The system adjusts the difficulty of tasks according to the annotator's experience and productivity.

- Interface Optimization. Personalized settings of markup tools simplify work and reduce cognitive load.

- Motivation Strategy. Gamification or adaptive prompts maintain attention and promote annotator engagement.

- Feedback-Based Learning. The system analyzes errors and recommends materials to improve the annotator's skills.

Principles of Microtask Design

Task decomposition involves breaking down large tasks into smaller ones. They can be completed quickly and efficiently. Research shows that programming microtasks can reduce the adaptation time from 164 to 44 minutes. This can significantly minimize annotation time in large and complex tasks.

Interface optimization is required for microtask design. A well-thought-out interface reduces cognitive load. Key elements include:

- Clear instructions.

- A clear interface.

- Minimal distractions.

- These aspects create an efficient workflow for annotators.

Input field considerations

The design and placement of input fields affect task completion time and error rates.

- Use appropriate field types.

- Use autocomplete features where possible.

- Provide robust field validation.

These measures will improve user interaction and improve data quality.

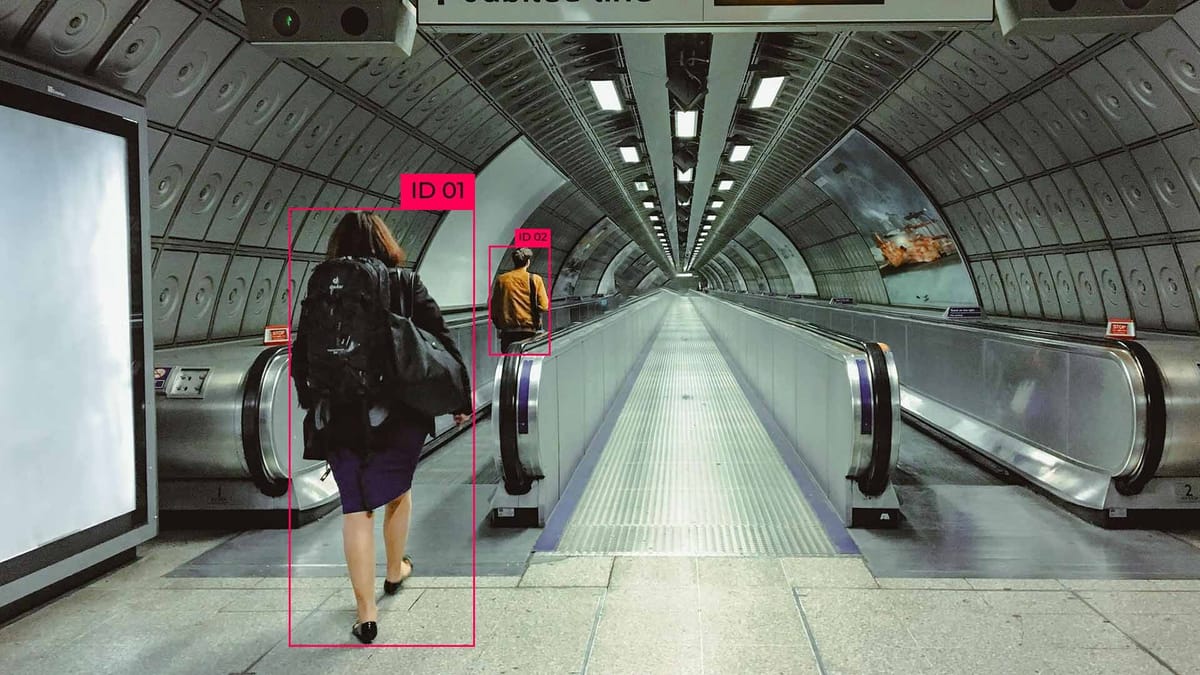

Task Fingerprinting and Behavioral Analysis

A user interaction log is the basis of a task fingerprint. It contains worker actions such as mouse movements and keystrokes and the time it takes to complete each task component.

Using a task fingerprint log, you can track:

- How annotators navigate tasks.

- Which elements of a task create delays or confusion.

- Correlation between interaction patterns and the quality of the output.

This information assesses worker productivity, how effectively they complete tasks, and whether they follow established instructions.

Behavioral Trace Analysis

- Behavioral trace analysis allows you to:

- Identify fraud or low-effort submissions.

- Understand the cognitive load of different tasks.

- Adapt tasks to each worker's preferences.

Optimizing Worker Efficiency Through Task Structure

Task structuring improves employee performance on crowdsourcing platforms. This method allows employees to focus on smaller tasks, which reduces cognitive load. Microtask structuring includes the following elements:

- Grouping similar tasks to reduce context switching.

- Gradual task complexity to maintain employee engagement.

- Combining different microtasks to avoid repetitiveness that can lead to decreased productivity.

With these strategies, it is possible to improve the task structure and experience of the crowdworker. This results in higher retention and engagement rates.

Cognitive Assessment in Microtask Assignment

Evaluating an employee's abilities involves analyzing past performance and personal work habits. This assessment identifies the employee's strengths and weaknesses. This helps to allocate tasks appropriately.

Skill-based task matching aligns employees' abilities with appropriate microtasks. This approach improves the quality of annotation projects.

Performance prediction models use machine learning to predict employee success in performing different types of tasks. These models analyze data, cognitive abilities, and task difficulty levels. Mental ability tests assign employees to tasks of varying difficulty based on performance.

Cognitive assessment strategies when assigning microtasks to optimize workflows and ensure employee satisfaction.

Personalization vs Customization in Task Design

Personalization customizes systems based on employee profiles. Individualization allows employees to shape their work environment. Both methods increase efficiency and satisfaction with microtasks.

Machine Learning Applications in Task Assignment

Machine learning is transforming task assignments on crowdsourcing platforms. A study of 134 crowd workers showed the impact of AI on the development and assignment of microtasks and the potential of predictive analytics in this area.

Predictive analytics considers workers' interests, fatigue levels, and learning progress. This method has improved text transcription tasks.

Improved algorithms now match workers to tasks based on cognitive abilities and experience. These algorithms adapt the design of microtasks and increase overall productivity and data quality.

Quality prediction models identify potential errors before they occur. Fingerprinting methods analyze the behavior of crowdworkers. The development of machine learning will give rise to more sophisticated programs for designing and assigning microtasks.

FAQ

What are microtasks in data annotation?

Microtasks in data annotation are small tasks that simplify the data labeling process by breaking it down into smaller tasks.

How does data quality affect AI training?

Data quality affects the accuracy and generalizability of an AI model. Incorrect data leads to biases and false predictions.

What is cognitive personalization in task design?

Cognitive personalization in task design involves adapting tasks to the individual characteristics of the worker, such as level of experience and speed of completion.

What are the principles of effective microtask design?

Detailed instructions and ease of task completion for workers.

What is the task footprint in microtask design?

In microtask design, a task footprint is a set of data about the annotator's interaction with the task, including mouse movements, keystrokes, and task element execution times.

What role does cognitive assessment play in microtask assignment?

The cognitive assessment determines the task complexity level, considering the annotator's intellectual abilities to assign microtasks correctly.

How is machine learning used in microtask assignments?

Machine learning automates the process of assigning microtasks and analyzing workers' skills and performance to correctly assign tasks. Algorithms also predict tasks' complexity and adapt them to the annotator's level.

Comments ()