Creating Heuristic Labeling Functions to Automate Annotation at Scale

Did you know that programmatic labeling can speed up the annotation process by up to 100 times? Traditional methods can take years, but heuristic labeling changes the game. It allows us to process large amounts of data quickly and accurately. Using Snorkel-like labeling functions, companies are transforming their data annotation workflows, making machine learning initiatives more scalable and efficient.

This approach makes automation more scalable and speeds up AI solution development, cutting down development cycles significantly.

Exploring heuristic labeling functions and their role in machine learning data labeling reveals a shift from old, time-consuming methods. Programmatic labeling can save companies a lot of time and money. Heuristic labeling method captures organizational knowledge and uses existing resources efficiently.

Key Takeaways

- Programmatic labeling can accelerate annotation 10-100 times faster than traditional methods.

- Snorkel-like functions enable scalable automation, saving substantial time and resources.

- Models trained with Snorkel-labeled data achieve high accuracy with significantly less manual effort.

- Heuristic labeling functions capture domain expertise, enriching data quality.

- Integrating these functions into machine learning pipelines ensures efficient and scalable data processing.

Understanding Snorkel-Like Labeling Functions

Snorkel-type labeling functions have become vital instruments for automating data annotation in the constantly changing field of machine learning. Using programmable rules and heuristics helps reduce the requirement of manual labeling, therefore accelerating the training of machine learning systems.

Definition and Purpose

Snorkel-like labeling functions automate extensive dataset labeling with pre-set rules and heuristics. They define labeling functions that apply weak supervision. This method creates an intense training set without the high costs of manual labeling.

Importance in Machine Learning

Using Snorkel-like labeling functions is critical in machine learning for their efficiency and scalability. Traditional labeling methods are time-consuming and costly. These functions use weak supervision to generate labels from patterns, heuristics, and crowdsourced inputs. This method is cheaper and speeds up training dataset creation, allowing for faster model development.

Comparison with Traditional Methods

Many traditional methods of data annotation require a lot of manual work from people, but now there are modern techniques that make the process much quicker and more efficient. With advancements like automated feature extraction, we can create annotations more easily, which not only boosts the accuracy of our models but also enhances the overall training process without needing constant human input.

Using probabilistic annotation is a game changer when it comes to handling large data sets. It cuts down on the time we spend labeling data and helps our models work more effectively. This method allows us to manage our resources better and helps systems adapt quickly to new situations all while keeping prediction accuracy high.

The Role of Heuristic Functions in Annotation

The use of heuristic functions is essential in automated data annotation. These practical methods are key to efficiently converting raw data into labeled datasets, significantly reducing time and costs. We explore how heuristic functions fulfill this role and their significant benefits.

What Are Heuristic Functions?

Heuristic functions are algorithms that depend on realistic rules rather than only theory. Using domain-specific guidelines, automatic data labeling is done by means of machine data annotation. For example, heuristic functions might classify an email as "spam" if it contains particular words. This method makes annotation efficient, flexible, and powerful.

Advantages of Using Heuristic Functions

- Reduced Time and Cost: Heuristic functions significantly reduce the time and expense of manual labeling, allowing for quick handling of complex and large data sets.

- Scalability: These functions enable scalable annotation processes. They help manage continuously changing data inputs and new business needs.

- Efficiency: Heuristic functions improve the efficiency of AI applications by automating labeling. They also incorporate subject matter expertise more effectively into training data.

Examples of Common Heuristic Approaches

Several heuristic approaches are widely used in the industry for adequate programmatic labeling. Here are some notable examples:

- Keyword-Based Labeling: Specific keywords or phrases can trigger labels in text classification. For instance, keyword patterns can be used to detect spam comments on platforms like YouTube.

- Rule-Based Labeling: Predefined rules can be used for multi-class and multi-label text classifications. These rules can come from external sources or be created within systems like Argilla.

- Active Learning Models: These models focus on the most valuable and informative data points for labeling.

Leading artificial intelligence and machine learning developments result from technology giants like Intel and Google using heuristic functions for programmatic labeling. The Snorkel AI team's thorough studies emphasize the vital future of automatic data annotation related to these features.

Implementing Snorkel-Like Labeling Functions

Using labeling systems like those seen in Snorkel requires a deep understanding of the complexities of the data. By identifying key heuristics and turning them into labeling functions, we can significantly enhance a machine's performance.

Best Practices for Implementation

- Incremental Refinement: Regularly update labeling functions with new data to keep efficiency and accuracy high.

- Modular Design: Make labeling functions modular and easy to update. This is essential as the dataset grows.

- Performance Monitoring: Monitor labeling function performance using metrics like the F1 score. Snorkel shows an average deviation of 3.60% from large hand-curated sets.

- Documentation: Document each heuristic thoroughly. This helps track the reasoning behind each function and makes updates easier.

Adhering to these best practices could turn great, unlabelled sets into machine learning treasures. Snorkel DryBell-type systems have demonstrated an 11.5% average increase in F1 points over classifiers trained on more limited sets.

Evaluating the Quality of Labeling Functions

Several vital criteria and processes go into evaluating labeling functions. These are required to guarantee the honesty and dependability of machine learning models. Practical evaluation lets us improve these features. This improves labeling precision and data accuracy.

Importance of Data Quality

Labeling functions lose their value if we don't have quality data to work with. The accuracy of machine learning models hinges on the cleanliness and reliability of the data we source. That's why effective data cleaning and preprocessing techniques are crucial.

One approach that can really boost data quality is Snorkel, which employs tools like MajorLabelVoter and label models to create probabilistic labels. A great example of this is when strong preprocessing was applied to the FakeNewsNet dataset. It resulted in high labeling accuracy, paving the way for more reliable model training down the line.

Common Pitfalls

One of the main problems is a significant imbalance in the data, which can affect the accuracy of models and distort the forecasting results. If the data set is dominated by certain categories or classes, the model can learn to recognize them with high probability but not work well with rare or less represented classes. This reduces the overall efficiency and applicability of the model, especially in critical areas such as medicine or finance.

Another common problem is managing conflicting or overlapping labeling functions. When the same data item receives multiple conflicting labels, the algorithm faces uncertainty, which can lead to poorer learning. Inconsistencies in labeling make it difficult to train models and reduce their ability to interpret new data correctly.

Another challenge is the ability of models to adapt to new, previously unknown data. Standard machine learning methods often involve repeated parameter adjustments to improve performance, but this may not be enough for complex tasks. Traditional approaches, such as fine-tuning hyperparameters, mainly provide only marginal improvements and do not solve fundamental generalization problems. More profound adaptation methods are needed for complex and dynamic applications, including active learning and automated data processing strategies.

How to Overcome These Challenges

A multifaceted approach is needed to overcome data annotation issues. Utilizing a robust mix of labeling functions can mitigate imbalanced data risks. Employing advanced machine learning techniques to identify and manage discrepancies is essential for maintaining data quality.

Maintaining flexibility in updating and refining labeling functions as datasets evolve is vital. Enterprises can benefit from programmatic labeling and guided error analysis, techniques used by platforms like Snorkel and Datasaur. For instance, Snorkel has helped companies improve accuracy by employing weak supervision techniques tailored to specific use cases.

- Use a robust mix of labeling functions

- Employ advanced machine learning techniques to manage discrepancies

- Maintain flexible and iterative schema definitions

- Adopt programmatic labeling and guided error analysis

Popular Companies in the Market

- Keymakr provides advanced video and image annotation services, data collection, and classification for training convolutional neural networks and deep learning AI.

- CloudFactory specializes in data preparation and provides tailored services for AI and machine learning projects.

- Labelbox simplifies the management of training data labeling through integrated workspaces.

Comparison of Framework Features

For example, Snorkel significantly speeds up the labeling process by combining data from multiple sources and improving the quality of labels. This approach is beneficial in large projects that require scalability and accuracy. Using tools like these, companies can optimize their labeling processes and adapt to the growing demand for machine learning and artificial intelligence.

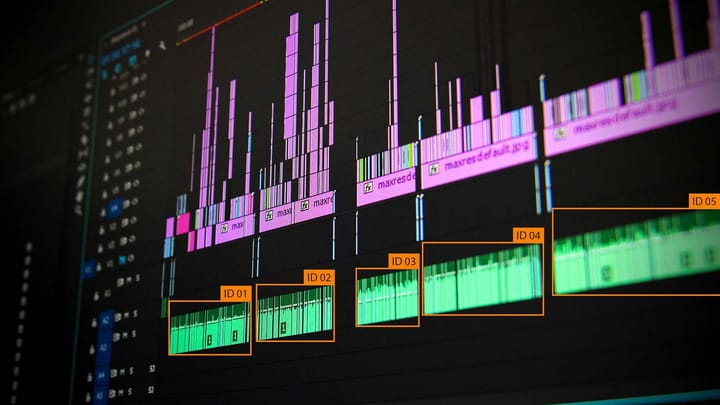

Integrating Labeling Functions into ML Pipelines

Adding labeling functions to a machine learning pipeline requires careful planning to ensure that workflows are seamlessly automated and models can scale. Proper integration of these features into an existing ML process helps to simplify data annotation and increase algorithm stability and accuracy. As a result, models can adapt to new data more quickly, which is especially important for dynamic AI applications.

Workflow Integration Techniques

Effective workflow integration techniques are vital for successful ML pipeline integration. Key strategies include:

- Automating Data Input: Directly funnel bulk labeled data into machine learning models. This reduces manual intervention and speeds up the process.

- Continuous Evaluation: Regularly assess the performance of labeling functions to ensure they contribute positively to model accuracy.

- Dynamic Adjustment: Tune labeling functions based on feedback loops and performance metrics to adapt to evolving data sets.

Ensuring Scalability

- Efficient Resource Management: Allocate computational resources dynamically to meet the demands of growing data sets.

- Parallel Processing: Utilize parallel processing techniques to handle large-scale data labeling tasks more effectively.

- Incremental Learning: Integrate systems that can learn incrementally from new data without necessitating a complete retrain.

Successful execution can be observed in practical applications like snorkeling. Snorkel enables the rapid creation of large training datasets. It exposes key programmatic operations such as labeling, transforming, and slicing data. This facilitates scalable machine learning by reducing labeling time from weeks to hours or days.

The Role of AI and Machine Learning

The field of data annotation is rapidly changing due to the development of artificial intelligence and machine learning. It is fascinating to see how algorithms are becoming more capable of understanding data, understanding context, and reducing the need for manual work.

As machine learning technologies improve, the data annotation process will become faster and more accurate. New algorithms will not only help improve the quality of the text but will also significantly reduce processing time. In places where information is changing rapidly, it is important to use dynamic annotation methods that allow you to retrieve new and accurate data.

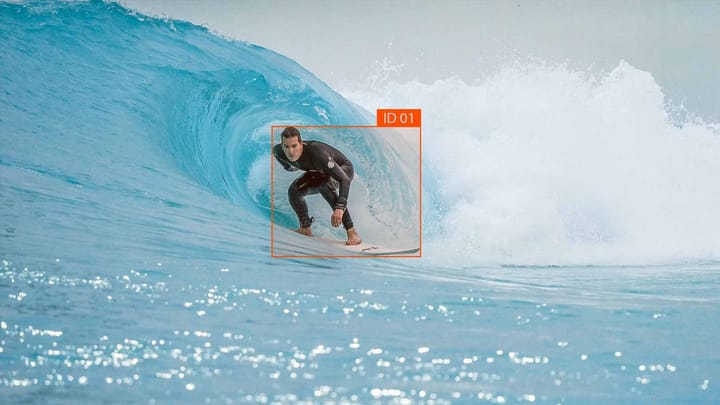

With the increasing demand for high-quality data processing, labeling techniques have become a special niche. Collaborative techniques, such as human observation and multi-criteria data validation, have proven to be very effective in complex tasks such as object identification in specialized systems.

Additional automation: The annotation process fundamentally changes the way data is processed. New tools such as Snorkel's labeling capabilities streamline workflows, save time and resources, and increase scalability. This is especially important for industries such as finance and insurance, where accuracy and compliance are paramount.

FAQ

What are heuristic labeling functions?

Heuristic labeling functions are algorithms designed to annotate data automatically. They use practical discovery or problem-solving techniques, including domain-specific rules and patterns, not just theoretical reasoning.

What is the purpose of Snorkel-like labeling functions in machine learning?

Snorkel-like labeling functions aim to automate data annotation. They use programmable rules and heuristics. This reduces the need for hand-labeled data, saving time and resources in model training.

How do heuristic labeling functions compare to traditional methods?

Heuristic labeling methods are more efficient and more robust than traditional methods. They combine multiple noise signals to produce a probability model. This model can approximate accurate labels, reduce annotation time, and handle large datasets more efficiently.

What are some common advantages of using heuristic functions for data annotation?

Advantages include reduced annotation time and lower costs. They efficiently handle large datasets, improving the scalability and maintainability of data labeling processes.

What are the steps to create practical Snorkel-like labeling functions?

Steps include understanding the data and identifying key heuristics. Program these into labeling functions and iteratively test them for accuracy. Refine these functions based on performance against a validation set.

What metrics are used to evaluate the performance of labeling functions?

Standard metrics include precision, recall, and F1 score. These help assess the accuracy and effectiveness of labeling functions in annotating data.

What industries have successfully implemented Snorkel-like labeling functions?

Industries like healthcare, finance, and social media have successfully implemented these functions. For example, they've been used to annotate medical transcripts in healthcare efficiently.

What are some challenges in automated annotation, and how can they be overcome?

Challenges include dealing with highly imbalanced data and managing overlapping or contradictory labeling functions. Ensuring model adaptability is also a challenge. Strategies to overcome these involve using a robust mix of labeling functions and leveraging advanced machine-learning techniques. Maintaining a flexible updating approach is also key.

What tools and frameworks are available for building labeling functions?

Popular tools include Snorkel AI, which provides tools for programmatically building and managing large sets of labeling functions. Other tools may offer different features focusing on ease of implementation, scalability, and integration capabilities.

How can labeling functions be integrated into machine learning pipelines effectively?

Effective integration involves automating the input of labeled data into training models. Continuously evaluate the impact of labeling functions on model performance. Implement strategies to handle increasing data volumes without degrading system performance.

What are the future trends in automated annotation with Snorkel-like labeling functions?

Future trends include significant advancements in AI and machine learning technologies. These advancements will lead to more adaptive, intelligent systems. They will be capable of learning from the data they process, reducing the need for human intervention. This will enable more accurate and context-aware annotations.

Comments ()