Collaboration and Competition: Running Labeling Contests for Quality Boosts

In recent years, combining collaboration and competition has become a powerful strategy for improving the quality of data labeling. Labeling competitions, organized by research groups and organizations, can engage a wide range of participants while establishing clear performance benchmarks. These competitions transform routine annotation tasks into purposeful challenges, encouraging participants to work efficiently and strive for accuracy. The result is often a significant improvement in both the speed and quality of labeling compared to traditional workflows.

Key Takeaways

- Strategic contests merge individual motivation with collective intelligence.

- Effective designs require clear metrics and shared accountability.

- Hybrid models outperform purely competitive or collaborative approaches.

Understanding Collaborative Labeling Competitions

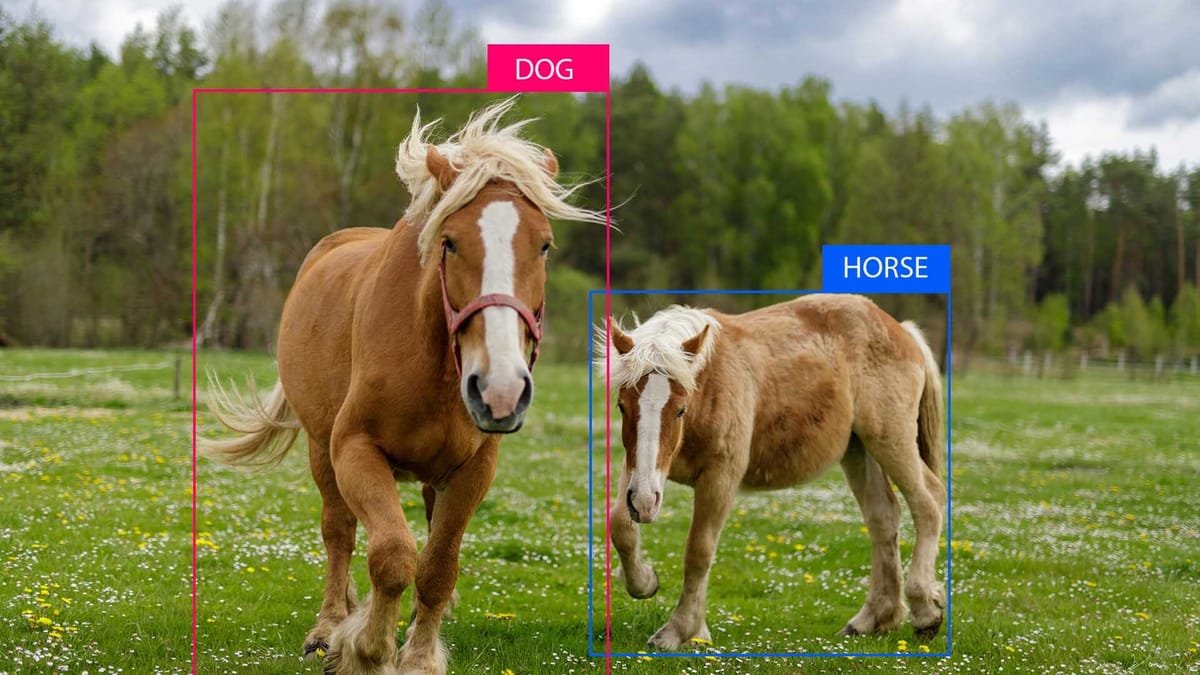

Collaborative labeling competitions are a hybrid approach to data annotation that combines collective effort with individual initiative. Unlike traditional annotation pipelines, these competitions are structured to encourage participants to produce high-quality annotations while simultaneously assessing their performance against their peers. The competitive element adds motivation and accountability, but the shared framework ensures that participants still benefit from shared goals, discussion, and access to shared resources. This mix of collaboration and competition helps maintain a healthy balance between efficiency and accuracy. As a result, collaborative labeling competitions have become a compelling method for generating large-scale, high-quality datasets across various domains.

What Are Collaborative Labeling Competitions

Collaborative labeling competitions are structured events where multiple participants work on data annotation, competing for accuracy, speed, or consistency. They are designed to leverage the strengths of teamwork and individual performance, making annotation a collaborative yet competitive task. Participants are typically given the same dataset and evaluation criteria, allowing for fair comparisons and precise results tracking. While competitions encourage each individual to do their best, collaborative work often involves shared tools, discussions, and guidelines to coordinate efforts.

Key Benefits for AI Training and Data Quality

- Improved labeling accuracy. Competitive pressure drives annotators to produce more accurate and consistent labels, reducing noise in the training data.

- Faster annotation performance. The competition format motivates participants to work quickly without sacrificing quality, accelerating dataset generation.

- Diverse perspectives on ambiguous cases. Multiple annotators offer diverse interpretations, helping to identify edge cases and improve recommendations.

- Built-in quality control system. Continuous performance tracking and consensus scoring detect inconsistencies and flag low-quality contributions in real-time.

- Talent discovery and engagement. The best annotators can be identified for further collaboration, helping to build long-term, high-quality labeling teams.

Balancing Competition and Collaboration for Innovation

Participants are motivated by clear goals and visible rankings, encouraging them to optimize their labeling strategies and improve accuracy. However, unlike isolated competitive environments, they benefit from open discussions, brainstorming, and collaborative problem-solving. This interaction creates a dynamic where high performers raise the bar, and newcomers learn and make meaningful contributions.

In this context, competition is a catalyst, but collaboration provides the foundation for sustainable development. Without a shared understanding and coordinated practices, even the most motivated annotators can deviate from task definitions or diverge in quality. Conversely, collaborative labeling can become slow or complacent without the energy of competition. A well-designed competition achieves this balance by aligning personal incentives with collective standards.

Insights from Competitive Sales and Team Dynamics

Analyzing competitive sales and team dynamics offers valuable parallels for understanding collaborative markups. In high-performance sales environments, people often compete to achieve the best results while working within shared systems and team goals. This structure encourages each member to exceed personal benchmarks without undermining the overall effectiveness of the group. Similarly, participants strive to outperform their peers in markups but rely on shared tools, feedback loops, and collective learning.

This dynamic also highlights the importance of recognition, transparency, and role clarity. Just as sales teams benefit from clear metrics and public leaderboards, markups thrive on visible progress and shared standards. Trust within teams doesn't disappear in competitive environments; trust develops through fair rules and open communication. Participants tend to respect the process more when they understand how results are measured and how their work contributes to the larger goals.

Fostering a Culture of Collective Growth

Fostering a culture of collective growth in tagging competitions means moving beyond short-term rewards to support long-term growth. While competition can encourage immediate interaction, sustainable progress depends on how participants learn from each other and the process. Open forums, feedback tools, and regular reflection moments help people understand what needs to be improved and how to do it together.

At the heart of this culture is a shift in mindset from outperforming others to growing with them. Competitions that reward collaboration through team assessments, mentoring, or peer recognition create deeper connections and a more resilient workforce. Annotators who feel supported and respected are more likely to contribute thoughtfully and consistently. Over time, such an environment reduces burnout, increases staff retention, and raises the baseline quality of all contributions.

Strategies for Running Effective Labeling Contests

The first step is to define clear annotation rules and scoring metrics so that all participants work towards the same standard. Even the most experienced annotators can drift in different directions without clarity, degrading the overall quality. Next, it's essential to design a scoring system that balances speed and accuracy, rewarding not just the volume of output but also thoughtful, consistent annotation. This ensures that competition drives quality, not reduction.

Equally important is creating a space for collaboration and ongoing feedback. Tools like forums, leaderboards, and real-time performance dashboards help participants learn and adjust as the competition progresses. Organizers should also regularly review results and highlight examples of high-quality work to guide improvement.

Designing Engaging Contest Frameworks

Clear goals, intuitive platforms, and visible progress markers help create a sense of purpose and momentum. Rather than simply assigning tasks, a good framework transforms the marking into a challenge with meaning, structure, and achievable milestones. Gamification elements like badges, levels, or tiered rewards can increase engagement without undermining the seriousness of the task. When participants feel that their contributions are valued and measured, they are more likely to stay focused and consistent. At the same time, the framework should support learning, fairness, and adaptability. Rules should be transparent, feedback should be actionable, and updates should be responsive to participant input. Collaboration tools like shared FAQs, annotation forums, and review examples allow people to learn from each other while striving for individual improvement. Including various task types or additional bonuses, challenges can keep the experience fresh and inclusive.

Measuring Success and Compliance

Measuring success and compliance in labeling competitions involves tracking the quality of annotations and adherence to established rules. Success is not just about producing a large number of labels. It requires consistent accuracy, consistency, and relevance to the task's objectives. Organizers typically use metrics such as inter-annotator agreement, error rate, and benchmarking to assess performance.

Compliance is intrinsic to success because it ensures that annotators adhere to ethical principles, confidentiality rules, and data processing protocols. Compliance monitoring can include audits, spot checks, or automated filters to identify deviations or suspicious patterns. Providing clear documentation and regular reminders helps participants understand expectations and consequences. When compliance is consistently enforced, trust in the integrity of the dataset is built, and the long-term reliability of the labeling process is supported.

Summary

Labeling contests balance collaboration and competition to improve the quality and efficiency of data annotation. These contests create motivation and accountability by engaging multiple annotators to compete against each other while sharing common goals and resources. This method results in improved datasets supporting more effective AI training.

Successfully running these contests requires clear guidelines, transparent scoring, and tools encouraging competition and collaboration. Creating an engaging environment with feedback, gamification, and open communication fosters sustained participation and collective growth. Lessons learned from team dynamics and competitive selling demonstrate how fairness, recognition, and trust are essential to maintaining motivation and quality.

FAQ

What is the main idea behind labeling contests?

Labeling contests combine collaboration and competition to improve data annotation quality and speed. Participants compete on shared tasks while working toward common goals.

How do collaborative labeling competitions work?

Multiple annotators label the same dataset using shared guidelines and metrics. They compete for accuracy and speed while benefiting from common resources and feedback.

Why is balancing competition and collaboration significant?

Competition motivates participants to perform better, while collaboration fosters learning and consistency. Together, they drive innovation and maintain quality.

What are the key benefits of running labeling contests?

Contests improve label accuracy, speed up annotation, bring diverse perspectives, provide quality checks, and help identify skilled annotators.

How can insights from sales teams help in labeling contests?

Sales teams emphasize the importance of clear goals, transparency, and fairness, which build trust and motivate contest participants.

What strategies ensure effective labeling contests?

Clear guidelines, balanced scoring for speed and accuracy, continuous feedback, and transparency help maintain quality and engagement.

How does gamification enhance contest frameworks?

Gamification adds motivation through badges, levels, or rewards, making tasks more engaging without distracting from quality.

Why is fostering a culture of collective growth valuable?

It encourages participants to learn from each other and focus on shared progress, improving retention and overall annotation quality.

What metrics are used to measure success in labeling contests?

Accuracy, consistency, inter-annotator agreement, and compliance with guidelines and ethical standards are commonly tracked.

How is compliance maintained during labeling contests?

Organizers enforce rules through audits, spot checks, clear documentation, and regular communication to ensure data integrity and ethical practices.

Comments ()