Camera-LiDAR Calibration: Multi-Sensor Alignment for Autonomous Vehicles

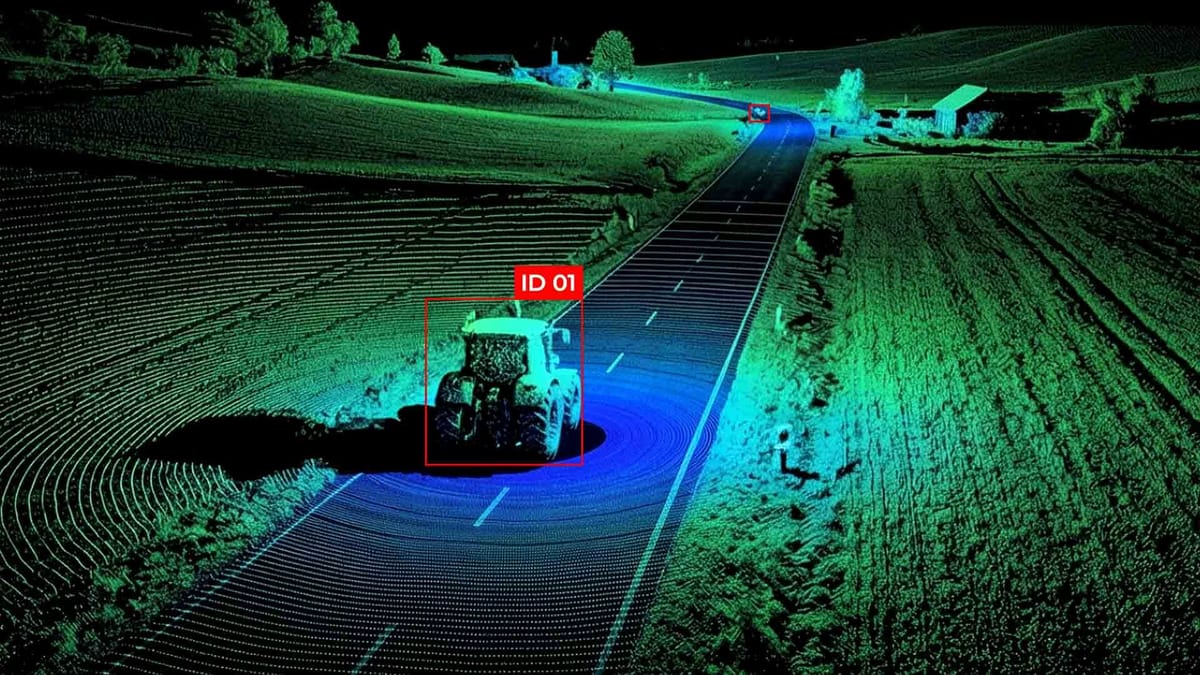

Modern cars are often equipped with a variety of sensors, such as cameras, LiDAR, and radar, each providing unique information about the environment. The real challenge is integrating data from disparate sensors into a single, coherent map of the environment. Calibrating cameras and LiDAR is a crucial process for achieving accurate spatial correspondence between visual and 3D data, enabling autonomous driving systems to precisely estimate distances, recognize objects, and make informed decisions in real-time.

Key Takeaways

- Small misalignments have a disproportionately significant impact on perception and safety.

- Reproducible stacks reduce integration risk and speed deployment.

- Practical metrics help teams set clear acceptance thresholds.

Understanding extrinsic parameters and multi-sensor alignment

In multi-sensor autonomous vehicle systems, the key elements are the extrinsic parameters of the cameras and LiDAR. In contrast to intrinsic parameters, which define the properties of an individual sensor, extrinsic parameters describe the spatial location and orientation of the sensor relative to other sensors or the vehicle's global coordinate system. These parameters typically include:

- Rotation - the angular relationship of the sensor in three-dimensional space.

- Translation - the linear displacement of the sensor relative to a selected reference sensor or the center of the vehicle.

Accurate determination of these parameters is critical for multi-sensor alignment - the process of combining data from different sensors into a single coordinate system. For example, LiDAR data provides accurate information about distances to objects in 3D, while the camera provides rich color and texture data. Without proper calibration, attempts to superimpose 3D points on the camera image lead to distortions, inaccuracies in object recognition, and potential errors in navigation.

The multi-sensor alignment process typically involves several key steps:

- Preliminary determination of external parameters using physical measurements or CAD models of the vehicle.

- Calibration optimization through computational algorithms that minimize deviations between LiDAR point projections onto the camera image.

- Accuracy validation through testing on real or synthetic data, ensuring consistency between sensor streams under different operating conditions.

Methods at a glance: targetless vs. target-based calibration

Main workflow: camera lidar calibration extrinsic parameters

Calibration of camera-LiDAR systems aims to determine extrinsic calibration—the rotation and translation parameters that describe the relative position and orientation of the sensors. A typical workflow includes several sequential steps:

- Sensor preparation and initial assessment. The camera and LiDAR are installed on a vehicle or test platform. Initial estimates of the extrinsic parameters can be obtained using CAD models, physical measurements, or approximate locations. This step creates a starting point for further sensor alignment.

- Data acquisition. Synchronized data recording is performed from both sensors. For target-based methods, these typically include images and point clouds from different angles of targets, whereas for targetless methods, natural scenes are used. The data must cover a sufficient angle and distance to ensure the reliability of the 2D-3D projection and further optimization.

- Feature extraction. Relevant features are extracted from the sensor data. In targeted methods, these can be corners, marker centers, or edges; in non-targeted methods, they include flat surfaces, edges, or key points in 2D and 3D.

- Establishing correspondences. At this stage, correspondences are established between the features from the camera and LiDAR. The accuracy of this operation directly affects the calibration accuracy, since errors in correspondences lead to inaccuracies of extrinsic calibration.

- Optimization of extrinsic parameters. An optimization algorithm is used to minimize the projection error (reprojection error) between LiDAR points and their corresponding camera features. The result is the calculation of the rotation matrix and translation vector, which ensures accurate sensor alignment.

- Validation and refinement. The calibration is verified by superimposing point clouds onto the camera image or rendering a 3D scene to assess its accuracy. If necessary, iterative refinement is performed until the desired calibration accuracy is achieved. Verification is also conducted under various environmental conditions.

- Integration into the system. After validation, the resulting extrinsic calibration parameters are integrated into the autonomous control system to accurately combine LiDAR and camera data, ensuring reliable scene perception and supporting autonomous navigation.

Targetless pipeline using adaptive voxelization (IEEE TIM)

In modern autonomous driving systems, targetless calibration methods are emerging as an effective alternative to traditional methods that rely on physical targets, as they enable extrinsic calibration in real-world field conditions without requiring special targets. One such approach is the targetless pipeline using adaptive voxelization, proposed for the calibration of cameras and LiDARs with a small field of view (small FoV). It combines the segmentation of point clouds with adaptive voxels and parameter optimization to achieve accurate sensor alignment between sensors. The stages of this targetless pipeline include:

- Adaptive voxelization of the LiDAR cloud - instead of fixed cubic blocks, the point cloud is dynamically segmented, taking into account the shape and density of the data. This reduces the number of redundant elements in the 2D-3D projection search and matching.

- Feature extraction and matching - key geometric features are extracted from each voxel, which are then matched with 2D features from the camera images. This process facilitates the correct establishment of relationships between data from two sensors without physical targets.

- Optimization via bundle adjustment - extrinsic calibration parameters are calculated by minimizing a cost function that expresses the error of 2D-3D projection in the camera projection space. To speed up the calculations, the authors derived the second derivatives of this function with respect to extrinsic parameters.

- Validation and accuracy assessment - after optimization, the results are verified using different datasets in various scene scenarios, evaluating the calibration accuracy and stability of sensor alignment for autonomous navigation.

Compared to other targetless approaches that often rely on classical data structures or fixed segmentation steps, adaptive voxelization reduces computation time and increases overall calibration efficiency without compromising accuracy.

Tooling, stack, and reproducible setups

Optimization, errors, and accuracy metrics

During the extrinsic calibration process of camera-LiDAR systems, it is crucial to accurately configure the sensor parameters to ensure accurate sensor alignment. This means that the data from the LiDAR and the camera must be exactly superimposed on each other, and the 3D points from the LiDAR must be correctly projected onto the 2D image of the camera (2D-3D projection). To ensure this, various optimization algorithms are employed that search for the optimal rotation and translation of the sensors, thereby minimizing the errors between the projections and the actual features of the scene.

One of the primary errors evaluated during calibration is the reprojection error, which is the distance between the points projected from the LiDAR onto the image and the actual features in the camera. If this error is large, it means that the sensors are not completely aligned correctly. Another important metric is the alignment error, which shows how accurately the sensors are aligned with each other in space. These indicators enable us to assess the overall calibration accuracy, i.e., how well the calibration results align with the actual sensor positions.

To improve accuracy, optimization algorithms can use information from multiple frames or point clouds simultaneously, compare them, and gradually refine external parameters. This allows compensation for sensor noise, incomplete data, or small errors in the initial setup. It is also crucial to account for systematic errors, such as those resulting from inaccurate sensor mounting or incorrect time synchronization.

Summary

Calibration of camera-LiDAR systems is a crucial step in ensuring reliable autonomous navigation, as it determines the exact relationship between the sensors through extrinsic calibration. Proper sensor alignment enables the accurate combination of 3D LiDAR point clouds with camera images, ensuring precise 2D-3D projection and consistent sensor alignment.

For effective calibration, it is important to ensure high data quality, controlled conditions, and adequate sensor preparation. Methods can be targeted, using control objects to increase accuracy, or targetless, such as through adaptive voxelization, which allows calibration to be performed in the field without additional targets.

FAQ

What is extrinsic calibration in camera-LiDAR systems?

Extrinsic calibration determines the rotation and translation between the camera and LiDAR. It ensures accurate sensor alignment for combining 3D point clouds with camera images.

Why is sensor alignment important?

Proper sensor alignment enables LiDAR points to overlay correctly on camera images (2D-3D projection). This is essential for accurate perception and autonomous navigation.

What is a 2D‑3D projection?

2D‑3D projection maps 3D points from LiDAR onto 2D camera images. It is used to verify calibration and improve calibration accuracy.

What are target-based calibration methods?

Target-based methods utilize known patterns, such as chessboards or markers, to compute extrinsic parameters. They provide high calibration accuracy but require controlled environments.

What are targetless calibration methods?

Targetless methods rely on natural features or sensor motion to perform calibration without physical targets. Adaptive voxelization is an example that improves sensor alignment in the field.

How is calibration accuracy evaluated?

Calibration accuracy is assessed using metrics like reprojection error and alignment error. Low errors indicate precise extrinsic calibration.

What role does optimization play in calibration?

Optimization algorithms adjust rotation and translation to minimize projection errors. This step directly improves sensor alignment and 2D‑3D projection fidelity.

Why is reproducibility important in calibration setups?

Reproducible setups using tools like Docker or ROS ensure consistent extrinsic calibration results across different systems. This maintains reliable calibration accuracy.

What data are necessary for effective calibration?

High-quality, synchronized LiDAR and camera data covering various angles and distances are needed. This supports accurate sensor alignment and robust 2D‑3D projection.

How do errors affect autonomous vehicle perception?

Errors in calibration reduce calibration accuracy, resulting in misaligned 2D-3D projections. This can lead to incorrect object detection and unsafe navigation.

Comments ()