Calibration Test Sets: Annotating Data to Assess Model Reliability

Bad cables or misconfigured instruments can derail entire projects, wasting time and resources. Ensuring reliability in data-driven systems requires thorough validation at every stage.

Implement an approach integrating detailed data annotation to assess performance metrics and support confidence evaluation, ensuring each component meets stringent standards.

Regular validation confirms equipment stability, similar to how semiconductor manufacturers rely on contamination control metrics for quality control. This strategy improves device performance and builds confidence in the results.

Key Points

- Structured test suites uncover hidden flaws in measurement systems.

- Automated verification tools reduce human error and delays.

- Regular verification aligns instruments with industry standards.

- Proactive verification prevents costly mistakes in critical workflows.

Calibration Test Sets Overview

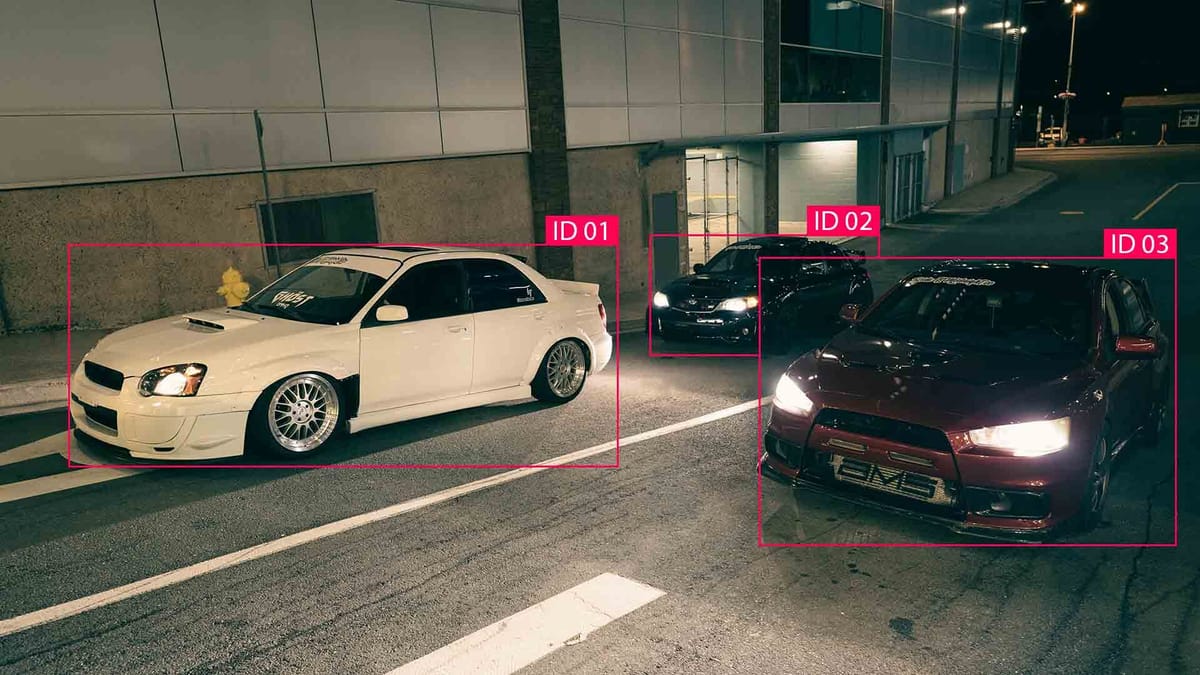

Calibration test sets consist of input data combined with verified results. They provide a controlled environment for evaluating whether devices or algorithms produce values that meet predetermined criteria.

Three principles define test sets:

- Standardization. Compliance with factory protocols ensures consistency between measurements.

- Traceability. Each data point is linked to standards for verification.

- Details. Capturing small differences in the output data prevents the accumulation of errors.

Understanding Calibration and Validation Checks

Calibration is the process of matching predicted probabilities to outcomes so that an AI model is not overconfident or underconfident in its predictions.

Validation checks are methods for assessing the quality and generalizability of an AI model on new, unknown data. They help ensure the model is not overtrained and performs well on real-world problems.

Key Concepts and Terminology

- Traceability. Matching measurements to recognized standards allows for auditability across laboratories.

- Uncertainty Budgets. Calculated ranges account for environmental factors and instrument limitations.

- Tolerance Thresholds. Specified limits of error separate acceptable drift from critical failures.

Step-by-step process for data annotation for testing

Start by cleaning the raw data to remove corrupted or incomplete records. For sensor validation projects, this includes:

- Filtering readings outside of operating temperature ranges.

- Aligning timestamps across multiple devices.

- Annotating raw data using factory methods.

Software interface sequences:

- Press the Calibration softkey on the main menu.

- Select Auto Calibration mode.

- Run a “Plausibility Check” to compare real-time measurements to stored reference data.

Methods for assessing AI model validity

- Parallel tests with known control samples.

- Setting accuracy thresholds based on equipment tolerances.

- Documenting deviation patterns for future protocol updates.

These methods help reduce false positives, allowing teams to complete system audits faster with automatic notifications of discrepancies.

Implementing calibration practices

Automatic calibration modules in machine learning allow AI models to be automatically tuned to improve the consistency between predicted probabilities and actual results. This is important in medicine, finance, or autonomous driving.

Automatic calibration is also used post-processing after training an AI model. Using special tools, they analyze validation data, compare the probabilities produced by the model with real labels, and build a correction function that adjusts the model output to real probabilistic estimates. This improves the interpretation of predictions and allows the models to be used in environments with high-reliability requirements.

Tools and frameworks for automatic calibration with plain text

- A popular tool is Scikit-learn. It allows you to apply calibration methods such as Platt scaling or isotonic regression combined with cross-validation. The tool provides flexibility and integrates with sklearn-based classifiers.

- TensorFlow Calibration is a component for the TensorFlow framework that supports calibration functions in large neural networks, especially in medicine or autonomous systems. It allows you to monitor output distributions and apply correction without changing the underlying architecture of the model.

- PyTorch has libraries such as `netcal` that evaluate and improve the calibration of deep AI models. It supports various metrics and calibration methods.

These tools simplify the development of AI models and increase their reliability in real systems.

Industry applications and intermediate validations

Intermediate validations are important because they allow you to detect problems with an AI model before it is implemented.

In medicine, AI models that analyze tomography images undergo intermediate validations on validation subsets to ensure that the algorithm is not biased toward individual disease subtypes or device-specific data.

In the financial sector, intermediate validations are used for risk modeling. Predictions undergo regular calibration and verification to avoid adapting to outdated market conditions.

In the industry, intermediate validations monitor the accuracy of AI models when predicting technical equipment failures and adjusting for changing sensor data or external factors (temperature, load).

Using intermediate validations helps identify conceptual drift and model quality decline and allows for rapid changes, which increases confidence in AI decisions in critical areas.

Summary

Accurate measurements are the foundation of reliable systems across industries. We’ve examined how structured test suites and accurate data annotation create accountability in measurement workflows. Automated validation tools reduce error rates, and scheduled equipment comparisons extend the life of instruments.

We’ve outlined three key principles: standardized protocols, traceable documentation, and proactive verification. These steps ensure that devices meet acceptance thresholds, even under volatile conditions.

Implementing these methods minimizes risk in critical processes. To maintain productivity, review your calibration procedures per evolving industry guidelines.

FAQ

How do calibration test suites improve AI model accuracy?

They measure how well an AI model’s confidence metrics match real-world results.

What role does human validation play in data annotation?

Annotators verify annotations and resolve edge cases, ensuring datasets reflect real-world complexity.

Why are interim equipment validations critical in manufacturing?

Regularly verifying devices during production detects measurement drift early.

What is the difference between calibration and validation?

Calibration adjusts devices to established standards, while validation confirms that systems meet predefined specifications.

What tools are used for automatic calibration?

Tools used include Scikit-learn, TensorFlow Calibration, and PyTorch’s netcal library.

Comments ()