Best Data Annotation Tools 2025: Complete Platform Guide

In 2025, annotation software is an inseparable part of any machine learning project. Raw materials, such as photographs, video streams, and spatial datasets, require detailed labels that train algorithms to recognize patterns.

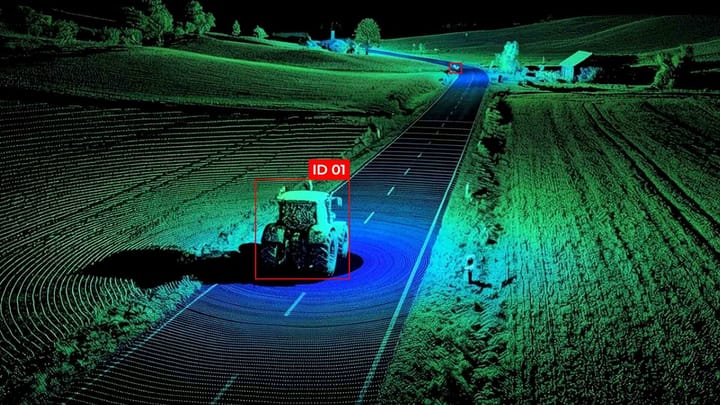

Effective labeling directly determines how well artificial intelligence performs tasks in the real world. The higher the quality of labeling, the greater the performance of models in various fields, from autonomous vehicles to medical diagnostics.

Over the past few years, annotation tools have evolved from simple labeling interfaces into full-scale solutions supporting complex, multimodal datasets - including images, video, text, audio, LiDAR, and even medical scans.

The Role of Data Annotation in AI Projects

AI systems learn from meticulously structured examples, not from raw information. This transformation process converts unstructured input data into learning moments for algorithms. Data annotation creates the contextual structure necessary for machines to interpret real-world patterns.

Labeling raw materials forms the foundation of machine learning pipelines. Teams assign meaning to data using tags, boundaries, and classifications, converting pixels into actionable analytical units.

Three key functions are highlighted:

- Establishes the ground truth for algorithmic decision-making

- Enables pattern recognition across diverse datasets

- Provides measurable benchmarks for model validation

Correctly labeled elements create clear connections between pixel patterns and their meanings. Although some perceive this stage as repetitive preparatory work, this is where a project's success is decided. Thus, modern enterprises face a key challenge: choosing solutions combining accuracy and operational efficiency.

Annotation Methodologies & Tools

Annotation methods determine not only the speed of the process but also the final quality of the training dataset. In 2025, these tools are divided into clear categories depending on the level of automation.

Manual Annotation Tools

This is the foundation upon which all data requiring high precision and human judgment is built. Even with the development of AI, the final verification and labeling of the most complex cases remain with humans.

Traditional manual labeling interfaces provide operators with a set of graphical tools to create and edit annotations. Key features and tools of most platforms include:

- Polygon Segmentation. For precise outlining of complex-shaped objects.

- Bounding Boxes. Fast rectangular labeling.

- Keypoints. For determining poses or identifying anatomical landmarks.

- Quality Control. Interfaces have built-in review workflow processes that allow QA specialists to check the work of annotators, return it for refinement, and measure inter-annotator agreement.

Semi-Automatic and AI-Assisted Annotation

This method increases efficiency by using AI models for data preprocessing, leaving the function of quick editing and verification to humans.

The platform uses machine learning models to pre-label data before it reaches a human annotator. The operator simply adjusts boundaries or classification, which is 5-10 times faster than full manual labeling. Popular modern algorithms include:

- Model-in-the-Loop. This is a continuous feedback loop. The auxiliary model labels the data → the human corrections → the corrections are used for retraining or fine-tuning the auxiliary model.

- Active Learning. The most important mechanism for efficiency. The system evaluates unlabeled samples, identifies those in which the model is least confident or which contain the highest informational load, and prioritizes sending them to the human for labeling. This ensures that every hour of the annotator's work maximizes the accuracy of the final model.

Fully Automated Annotation Pipelines

This methodology aims for minimal or zero human intervention, using advanced generation and self-training methods.

Automated pipelines are fully integrated into MLOps. They are mostly used to create initial, large datasets or to generate data in closed, controlled environments. Key methods include:

- Vision-Language Models Usage. Advanced models can generate descriptions, tags, and even segmentation for images and videos based on text prompts. For example, the model can generate a detailed caption for a medical image, which is later used as an annotation.

- Synthetic Data. Instead of labeling real images, data is generated in a virtual environment. Annotations are provided automatically by the simulator, without human involvement.

- Self-Labeling / Pseudo-Labeling. A model trained on a small amount of labeled data is used to predict labels on a large array of unlabeled data. High-confidence predictions are added to the set for further model training, accelerating its development.

These methodologies demonstrate that choosing a tool is primarily a choice of balance between human precision and automated speed.

Data Annotation Platform Evaluation Criteria

In 2025, a data annotation platform is no longer just a "tool for drawing boxes." It has become an integrated part of the MLOps pipeline, requiring deep automation, multimodal support, and flawless quality management.

The annotation landscape is currently becoming more diverse, intelligent, and integrated than ever before. Understanding how these platforms differ and how to choose the right one has become essential for data scientists, AI teams, and enterprises looking to stay competitive in the era of multimodal intelligence.

Tool Functionality and Data Types

A modern platform must be universal and support specialized formats used in cutting-edge research.

- Multimodality. Support for annotating different data types (image, video, text, audio) within a single project or a unified interface. This is crucial for training complex systems that perceive the world holistically.

- 3D Specialization. The presence of specialized annotation tools for working with point clouds, necessary for autonomous driving and robotics.

- Advanced NLP. Support for complex tasks such as Relation Extraction, Coreference Resolution, and Hierarchical Tagging.

- RLHF Support. Functionality for collecting human feedback is used for aligning LLMs. This includes comparing responses and ranking.

Infrastructure and Integration

The platform must "embed" itself into the company's existing engineering and MLOps stack.

- API/SDK and MLOps Integration. The availability of a powerful API/SDK for automating data upload/download. Integration with Git, DVC, and MLflow for data versioning is important.

- Cloud Compatibility. Seamless connection to popular cloud storage solutions (AWS S3, Google Cloud Storage, Azure Blob Storage) without the need for data duplication.

- Deployment Options. Support for deployment in a private cloud or on owned servers. This is mandatory for companies with strict security requirements.

- Export Formats. Support for all major formats and flexibility in creating custom formats.

Team and Quality Management

The quality of the annotated data directly affects the model's accuracy.

- Review Pipelines. Multi-level workflows: annotator → QA specialist → ML engineer. The ability to reject and return tasks for refinement.

- Consensus Annotation. Assigning a task to multiple annotators to measure their "agreement." This is the best method for identifying ambiguous instructions.

- Performance Metrics. Detailed reporting on speed, accuracy, and agreement for each team member.

- Instruction Tools. The ability to create detailed, versioned instructions and glossaries accessible directly in the labeling interface.

Security and Compliance

Given the increase in sensitive data, security requirements are mandatory.

- Certification and Compliance. The presence of security standards and compliance with industry regulations.

- Role-Based Access Control. Clear segregation of access rights for different users.

- De-identification and Anonymization. Built-in functions for automatically or semi-automatically removing Personally Identifiable Information (PII) from data before annotation begins.

Key Players in the Annotation Market

The data annotation platform market is divided between universal giants and specialized tools that offer unique technologies. The choice of platform depends on the scale, budget, and, most importantly, the requirements for security and automation.

Enterprise-level

These platforms focus on ensuring scalability, enterprise-level security, and deep integration into all parts of the AI production cycle. They are ideal for companies with strict compliance requirements (HIPAA, GDPR) and large teams.

For Startups / Researchers

These tools are ideal for rapid prototyping, academic projects, and startups that require high flexibility and low initial investment.

Specialized / Niche

These niche platforms solve specific, often the most complex, problems related to data management and automatic label creation.

When choosing a platform, it is important to understand which one meets the project's business needs—whether it's the need for maximum automation or guaranteed quality and security.

The Future of Annotation Platforms

In 2025, data annotation has definitively ceased to be merely a "preparatory stage" and has transformed into a strategic component of the entire Artificial Intelligence ecosystem. The success of any model, from autonomous driving to medical diagnostics, directly depends on the quality, accuracy, and lack of bias in the labeled data it is trained on.

The Era of Hybrid Solutions and Automation

The key takeaway from this comprehensive guide is that the best annotation solutions are not just the fastest or cheapest tools. They are complex platforms that expertly combine:

- Technology. The application of Active Learning and Model-in-the-Loop to minimize manual labor and maximize efficiency.

- Interface. Convenient, ergonomic UIs that ensure human control and high speed in correcting AI suggestions.

- Human Factor. Reliable quality control workflows, consensus annotation, and multi-layer verification to ensure the highest accuracy in critical sectors.

Advice for Businesses

In the context of increasing data complexity (LiDAR, RLFH, VLM tags) and strengthening regulatory requirements, the choice of platform must be carefully considered.

Businesses should choose a platform, guided not only by price but also by these general questions:

- Flexibility and Integration: Can the platform integrate with your MLOps stack? Does it support on-premise deployment or in your private cloud?

- Specialization and Quality: Does the platform have specialized tools specifically for your data type and does it offer quality guarantees?

- Long-Term Perspective: Does the platform invest in future technologies, such as automatic data generation and tools for LLM alignment?

Ultimately, investment in the right annotation solution is a direct investment in the competitiveness and reliability of the final AI system.

FAQ

What role do annotation tools play in modern AI development?

Annotation tools are the backbone of AI development, transforming raw data into structured information that models can learn from and utilize. The performance of machine learning systems depends directly on the quality of labeling provided by these platforms.

What are the main categories of annotation software?

Annotation software is generally divided into manual, semi-automatic, and fully automated categories. Each level represents a different balance between human precision and machine efficiency.

Why is tool evaluation critical for enterprises?

Tool evaluation ensures that a chosen platform meets requirements for accuracy, scalability, and compliance. Selecting the wrong system can reduce labeling quality and compromise model reliability.

How does semi-automatic annotation improve efficiency?

Semi-automatic annotation utilizes AI-assisted pre-labeling, enabling human annotators to verify and refine results rather than labeling from scratch. This approach accelerates data preparation by up to ten times.

What are the main criteria in platform comparison?

Key factors in platform comparison include multimodal support, integration with MLOps tools, security compliance, and quality management features. A well-balanced platform must combine technical flexibility with robust performance monitoring.

Which annotation tools are best suited for enterprises?

Enterprise-grade platforms, such as Labelbox, Scale AI, and Keymakr, offer robust integration, enterprise-grade security, and managed annotation services. They are ideal for projects requiring large-scale QA and strict regulatory compliance.

What makes open-source labeling platforms appealing to startups?

Open-source tools, such as CVAT and Label Studio, are popular due to their flexibility, free access, and ease of integration into custom pipelines. They allow startups to experiment with tool selection and adapt workflows without high costs.

How do specialized annotation solutions differ from general-purpose tools?

Specialized solutions, such as Snorkel Flow or Kili Technology, target specific use cases, including programmatic labeling and data governance. They emphasize automation, quality control, and scalability over general labeling tasks.

What trends define the future of annotation tools?

The future of annotation tools lies in hybrid automation, human-in-the-loop verification, and advanced data governance. Platforms that merge automation with precise human oversight will dominate the next generation of annotation solutions.

Comments ()