Abstract of Multimodal Sensor Data Fusion

Multimodal data fusion from sensors such as cameras, LiDAR, and radar is a component of a modern autonomous perception system. Each sensor has its own advantages. Cameras provide detailed visual and semantic information, LiDAR generates accurate 3D scene geometry, and radar displays objects in difficult weather conditions and at long distances. However, differences in data formats, time, and spatial dimensions create difficulties for their integration.

We will consider methods for aligning and integrating heterogeneous sensor data, exclusively with calibration, data synchronization, and spatial fusion, and also clarify key challenges and open issues in the field of sharing camera, LiDAR, and radar data.

Key Takeaways

- The alignment of camera, lidar, and radar data improves object detection and system security.

- Data, features, and solutions create different tradeoffs between accuracy and cost.

- Emphasis on calibration, data synchronization, and projection ensures consistent sensor data.

- Representative prior methods guide design choices and serve as benchmarks for comparison.

- Aligned data results in better quality, cloud-based workflows, and business-relevant applications.

Camera, Lidar, and Radar Capabilities and Gaps

A multimodal sensor fusion annotation system combines data from camera, LiDAR, and radar to provide a complete perception of the environment. Each sensor has unique advantages and limitations.

Formalization of fusion levels for heterogeneous sensors

The fusion of heterogeneous sensor data is formalized through a hierarchy of levels that differ in the degree of abstraction and the moment of information integration. This enables us to systematically describe how data from the camera, LiDAR, and radar are combined, as well as to assess the tradeoffs between accuracy, complexity, and computational cost.

- The early fusion level combines measurements at the sensor signal or point level. This provides maximum information completeness, but requires calibration, synchronization, and is computationally expensive.

- The feature-level fusion level integrates the features already extracted from each sensor into a common representation space. This approach is robust to noise and sensor gaps, and preserves a significant portion of the valuable information.

- The late fusion level combines the independent results of models or detectors built for each sensor. It is easy to implement and scale, but it is limited by information lost in previous processing stages.

The formalization of these levels provides a basis for designing multimodal perception systems and selecting the optimal fusion strategy, depending on the task, environmental conditions, and system constraints.

Annotation and Alignment Pipeline for Multimodal Sensor Data

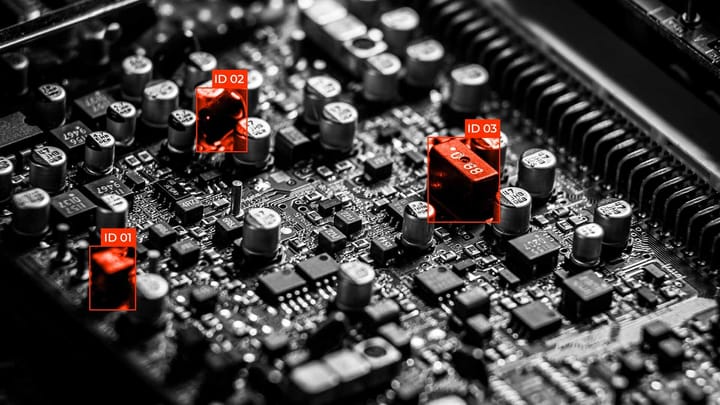

The annotation pipeline for multimodal sensor data is a multi-step process designed to align, synchronize, and semantically label information. Its goal is to create a coherent, consistent dataset suitable for training and evaluating sensor fusion models.

Multimodal Coding and Compression for Edge and Cloud Workloads

Multimodal coding and compression are designed to transmit and process data from various sensors in distributed systems. This approach enables you to reduce the amount of data transmitted, minimize latency, and decrease the network load.

Multimodal coding is the process of transforming heterogeneous data into a common compact representation that includes features, binarized maps, or latent spaces. This allows you to combine information from multiple sensors and provides compatibility with machine learning algorithms.

Data compression is used at different levels.

- Edge for fast local processing and minimizing the transfer of "heavy" raw data to the cloud;

- In the cloud for centralized storage and large-scale analysis.

Methods include both lossy and lossless compression, as well as adaptive approaches that preserve information for scene modeling.

The integration of multimodal coding and compression is essential for modern autonomous driving, robotics, and IoT systems.

Evaluation Protocols: Metrics, Benchmarks, and Ablation

Evaluation protocols in multimodal sensory systems determine how effectively models perceive and integrate data from different sources. They include various metrics for monitoring performance and ablation studies. Formalized protocols provide a reliable and reproducible assessment of perceptual systems.

Advanced Modeling: Transformers, VLM/LLM, and Cross-Modal Attention

Advanced modeling of multimodal data relies on modern AI architectures. Among them are transformers, VLMs (Vision-Language Models), LLMs (Large Language Models), and cross-modal attention mechanisms that combine and weight the contributions of each modality, playing a crucial role in this process.

Transformers offer a self-attention mechanism that enables the model to capture dependencies between data elements, regardless of their spatial or temporal positions. This is useful for processing image sequences, point clouds, and radar signals.

VLM and LLM integrate semantic information and context from natural language or other high-level features into the analysis of sensory data. VLM combines visual and textual representations, while LLM provides understanding and generation of multi-channel information to interpret the scene or explain the model's decisions.

Cross-modal attention provides selective integration of information from different sensors, allowing the model to focus on relevant features for a specific task. This improves prediction accuracy, compensates for gaps in individual sensors, and provides a more integrated understanding of the scene.

Annotation Quality: Processes, Tools, and Error Reduction

Annotation quality is essential for the efficiency of multimodal systems, as the accuracy and consistency of annotations determine the training and performance of models. Quality maintenance involves a combination of formalized processes, modern tools, and error control methods.

Quality control processes include standardized annotation protocols, multi-level data verification and validation, and feedback loops. This enables the maintenance of consistency and repeatability of annotations across large datasets.

Annotation tools offer interactive and automated mechanisms for rapidly and accurately labeling objects, regions of interest, and events. Modern platforms support both manual annotation and semi-automatic or automatic methods, including interpolation and pre-prediction algorithms.

Error reduction can be achieved through several strategies, including double-checking of annotations by different reviewers, the use of control sets, statistical consistency analysis, and automatic anomaly detection. The combination of these approaches increases the reliability and accuracy of annotations, reduces noise in the data, and provides a foundation for training multimodal models.

Problems, limitations, and open issues

The efficiency and accuracy of multimodal models depend on the quality of annotations. Therefore, it is essential to identify key problems and apply effective methods to mitigate them.

FAQ

What is the purpose of aligning camera, lidar, and radar data in sensor fusion projects?

The purpose of aligning camera, LiDAR, and radar data is to align their measurements in a single spatiotemporal system for accurate scene analysis.

What are the primary fusion levels, and how do they differ?

The main fusion levels are early, feature-level, and late fusion. They differ in the moment of integration and the degree of information abstraction, from raw to processed.

What preprocessing steps improve the robustness of fusion in adverse conditions?

Preprocessing steps, such as sensor calibration, noise filtering, data normalization, and signal synchronization, enhance the robustness of multimodal data fusion in adverse conditions.

What are the common fusion architectures for 3D detection and perception?

Common fusion architectures for 3D detection include early fusion, late fusion, and feature-level fusion, as well as transform and cross-modal networks for integrating data from cameras, LiDAR, and radar.

How is performance measured and ablated for fusion models?

The performance of fusion models is evaluated using accuracy and efficiency metrics, and ablation is performed by sequentially disabling sensors or model components.

What quality assurance practices reduce annotation errors in multi-sensor datasets?

Using standardized annotation protocols, double-checking, control sets, and automatic anomaly detection reduces errors in multimodal datasets.

What are the challenges and open issues in sensor data fusion?

The main challenges are synchronization, calibration, noise handling, and data heterogeneity.

Comments ()