Panoptic Scene Segmentation

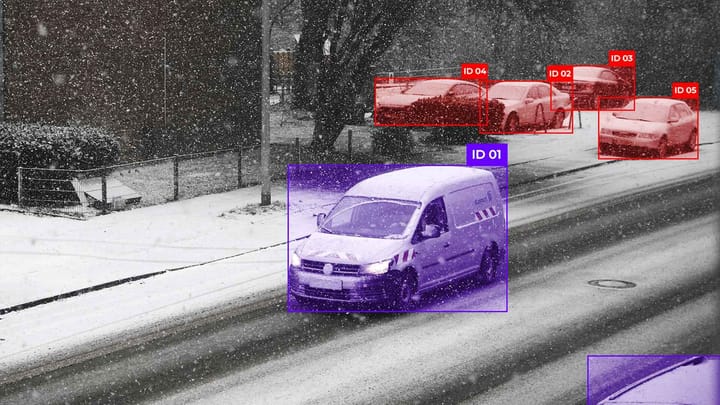

Panoptic scene segmentation combines semantic and instance-based segmentation, enabling simultaneous segmentation of large areas and individual objects. In the context of urban and road environments, this means the precise hierarchical marking of roadways, sidewalks, street objects such as signs, street lights, and benches.

This approach provides a comprehensive understanding of the scene necessary for autonomous driving, accurate maps, and urban infrastructure management systems.

Key Takeaways

- Combining rules and deep learning improves the speed of object detection and labeling.

- Preprocessing and trajectory estimation are critical for ultimate accuracy.

- It is important that the pipeline can scale from road surfaces to small roadside objects with repeatable results.

- The priority of modularity helps to customize each stage to the needs of US agencies.

Understanding the data fundamentals

In road conditions, laser-scanning data helps create accurate maps, analyze infrastructure, and support the development of autonomous transportation. Understanding the differences between MLS, ALS, and TLS enables you to choose the right technology for a specific task.

- MLS (Mobile Laser Scanning) is a mobile laser scanning performed from vehicles equipped with LiDAR sensors, cameras, and GNSS/IMU navigation systems. MLS allows you to quickly and thoroughly collect data about the roadway, road markings, signs, barriers, curbs, and adjacent infrastructure in real-world traffic conditions. The advantage of MLS is a dense point cloud and good geometric accuracy at street level. Still, data quality is highly dependent on the stability of the navigation system and GNSS conditions.

- ALS (Airborne Laser Scanning) is an aerial survey using LiDAR, performed from aircraft or drones. ALS is used to build regional or national road networks, analyze terrain, slopes, and drainage, and plan infrastructure projects. ALS provides extensive coverage and is well-suited for the macro level. Still, its spatial resolution is insufficient for detailed analysis of road elements, and viewing angles can limit visibility of vertical structures.

- TLS (Terrestrial Laser Scanning) is a ground-based, stationary laser scanning performed from fixed points. TLS is used to accurately measure individual sections, including complex intersections, bridges, tunnels, landslide zones, and accident sites. This technology provides great detail but is labor-intensive, making it less suitable for large-scale data collection along long roads.

Thus, in real road scenarios, these approaches do not compete directly, but rather complement each other. Together, they form a multi-level data foundation necessary for modern transportation systems, HD mapping, and autonomous driving.

Mapping workflow

From acquisition to export, this workflow transforms raw point cloud images into functional map layers.

- Scan-to-Twin focuses on relevant semantic models. It uses geospatial priors to pre-slice cloud data and reduce training costs.

- Scan-to-BIM focuses on asset representation and richer attributes.

Scan-to-Twin is used when data volume and minimization are important. Scan-to-BIM for comprehensive records and synchronization.

Sequential stages

Divide the pipeline into clear blocks: data acquisition, trajectory refinement, registration, preprocessing segmentation, feature recognition, geometry extraction, and model generation. Each block has defined input data and audit artifacts.

Basics of feature extraction for point clouds

Reliable features allow you to separate land, poles, and small objects in extensive cloud data.

Classic fits and descriptors:

Segmentation strategies

Prioritize approaches that process millions of points and preserve geometrically meaningful segments.

Region growing and connected components

Classical region growing approaches and the connected components method lay the foundation for scene interpretation. Region growing and connected components are classical methods in scene parsing, forming the foundation for understanding complex road environments. The algorithm analyzes neighboring elements and adds them to the region if they satisfy similarity criteria. The result is a segmentation that reflects the natural boundaries of objects, as regions "grow" according to the local structure of the data. In road and 3D scenarios, this approach highlights road surfaces, building facades, and large planes, but it is sensitive to noise and strongly depends on the choice of seed points and similarity thresholds.

The connected components method is based on finding groups of elements connected by adjacency. In 2D images, this is usually 4- or 8-connectivity between pixels, and in 3D point clouds, it is a spatial neighborhood within a given radius. The algorithm goes through the data and labels all pixels or points that belong to the same connected region as a separate object. This approach is well-suited for separating road signs, vehicles, or pedestrians. However, it does not work well in situations where objects touch each other or have weak boundaries.

Graph slicing and quality check

Graph slicing is a segmentation strategy that is based on representing data as a graph, where nodes correspond to pixels, superpixels, or points, and edges describe the degree of similarity or connection between them.

The goal is to divide the graph into subgraphs so that connections within segments are strong and those between segments are minimal. Classical approaches such as minimum cut, normalized cut, or multiway cut aim to find an optimal balance between segment compactness and boundary clarity. In visual and 3D data, these methods capture the global structure of the scene well, accounting not only for local but also for long-range connections between elements, which is helpful in complex environments with noise or heterogeneous textures. The value of graph cutting lies in its flexibility. Edge weights can encode features such as color, depth, geometry, semantic clues, or even neural network probabilities.

This makes the segmentation more robust to local errors and better consistent with the global regularities of the scene. These methods are computationally expensive, especially for large graphs, so in practice, approximations, hierarchical cuts, or data pre-aggregation into superpixels or clusters are used.

Hybrid PCS + PCSS

The hybrid approach addresses the limitations of rule-based methods and neural models in the segmentation and interpretation of spatial data.

- PCS (Point Cloud Segmentation) relies on geometric rules, heuristics, and a priori expectations of the scene's structure. Such rules provide high interpretability and stable results under well-described conditions, but quickly lose effectiveness in complex or atypical scenes.

- PCSS (Point Cloud Semantic Segmentation) uses neural networks to assign semantic labels to individual points or regions, allowing the model to learn complex patterns without explicit programming, but making the system sensitive to data quality and the distribution of training examples.

Combining these two approaches into a hybrid scheme allows you to leverage the strengths of each. Rules and a priori expectations provide a framework that limits the solution space and reduces false positives. At the same time, neural networks add flexibility and the ability to adapt to the diversity of real scenes.

The advantage of hybrid PCS + PCSS is its robustness in critical scenarios. A priori knowledge allows us to filter out physically impossible or unlikely configurations, and neural networks compensate for the imperfections of complicated rules in complex, noisy environments.

Using geospatial priors for better segmentation

Using geospatial priors is a tool for improving segmentation quality. They include information about the terrain, terrain topology, object orientation, their relative height, and spatial relationships.

Geospatial priors are integrated at different levels of data processing. Initially, they are used for normalization and filtering. During the segmentation stage, this prior defines the rules for merging or separating regions, with decisions made based on local features while accounting for the global structure of the space.

The value of this approach lies in increasing segmentation robustness to noise, missing data, and outliers. When sensor data is incomplete or distorted, geospatial priors maintain a realistic interpretation of the scene.

As a result, segments become more stable in time and space, better consistent across different sensors, and human-interpretable.

Solving Common Problems

Understanding the roadscape is the foundation for autonomous driving, mapping, and driver assistance systems. However, processing sensor data in real-world environments poses several common challenges.

FAQ

What is Panoptic Scene Segmentation for road environments?

Panoptic segmentation is the task of simultaneously separating and classifying each pixel or point into individual objects and the background to provide a complete understanding of the scene. Panoptic scene segmentation combines semantic and instance-based segmentation to distinguish stuff vs things, enabling simultaneous labeling of continuous areas like road surfaces and discrete **street objects** such as signs or benches.

What is the difference between MLS, ALS, and TLS?

MLS collects data from mobile platforms for detailed street mapping, ALS covers large areas from the air for macro analysis, and TLS provides ultra-precise local measurements of critical areas.

What to choose, Scan-to-Twin or Scan-to-BIM?

Choose Scan-to-Twin when the goal is accurate, object-oriented digital replicas for operations and monitoring. Choose Scan-to-BIM when you need construction-level detail, as-built verification, and architectural semantics.

What are the main steps in a practical workflow from data acquisition to labeled output?

Typical steps include data acquisition, registration and georeferencing, preprocessing, land filtering, primitive fitting and feature extraction, pre-segmentation, supervised learning or rule-based classification, and final geometry extraction and quality assurance.

When to use RANSAC, Hough transform, or PCA for feature extraction?

Use RANSAC to fit planes and lines in noisy data. Hough transform for detecting parametric shapes, such as curbs or painted stripes. PCA is suitable for local shape descriptors, such as distinguishing linear columns from planar facades.

What are the scalable segmentation strategies that work on large point clouds?

Scalable segmentation strategies for large point clouds include hierarchical clustering, superpixel/superpoint approaches, and graph methods with local and global connections.

What is the advantage of hybrid approaches that combine rules and neural networks?

Hybrid approaches combine the stability and interpretability of rules with the flexibility and ability of neural networks to learn complex patterns.

Comments ()