Labeling Occluded Objects for Autonomous Systems

For an autonomous car, the ability to recognize hidden objects is one of the most difficult skills that distinguishes a simple program from true intelligence. In the real world, we almost never see objects perfectly whole: a car in front obscures part of a pedestrian, a road sign hides behind tree branches, and a truck in the adjacent lane completely blocks the view of an entire intersection. Such situations are called occlusions, and they are a daily norm of road traffic.

For artificial intelligence, occlusion creates a serious problem because sensors see only fragments of data. If a camera captures only part of a head and a shoulder, the neural network might simply fail to recognize the person, perceiving them as random visual noise. Full blocking is even more insidious: the object physically exists and could step onto the road at any moment, but for the perception system, it becomes "invisible".

This affects safety because, without correct labeling of such instances, the model will not be able to learn to predict danger. If the AI does not understand that a hidden moving object might be behind a stopped bus, it will not be able to reduce speed in time. Therefore, the task of occlusion annotation is to teach the algorithm to "reason out" the hidden parts of objects and be aware of their presence even when they have temporarily disappeared from view.

Quick Take

- Most objects in the city are always partially hidden, and without labeling them, the autopilot will not be safe.

- AI must be taught to see a holistic object, even if only its mirror or wheel is visible.

- Radar sees through obstacles, LiDAR refines the distance, and the camera determines the object type.

- Training is shifting from object recognition to understanding the logic of the entire road situation.

Working with Obstacles and Methods to Overcome Them

For safe movement, a self-driving vehicle must understand the context of the scene when part of the information is hidden from its "eyes". The ability to work with such situations allows the system to avoid accidents even in complex urban conditions.

Types of Obstacles in Real-World Conditions

On the road, occlusion occurs every time one object obscures another. Depending on the situation, the complexity of recognition changes, so several main scenarios are identified:

- Partial Visibility. When we see only part of an object, for example, only the rear lights of a car or the legs of a pedestrian stepping out from behind a truck.

- Full Occlusion. The object completely disappears from the camera's field of view, as happens with "hidden pedestrians" standing directly behind a tall bus.

- Static Obstacles. Elements of urban infrastructure, such as trees, billboards, or cars parked along the curb, create permanent blind spots.

- Dynamic Obstacles. Moving objects, for example, a semi-truck overtaking the self-driving vehicle and completely blocking the view of the adjacent lane for several seconds.

Such situations require special attention during data labeling, as the algorithm must learn to predict the appearance of an object even before it fully emerges.

Tools for "Seeing" Through Obstacles

For effective behind-obstacle detection, autonomous systems use a combination of different sensors. Each has its own advantages that help compensate for the limitations of others in complex scenarios.

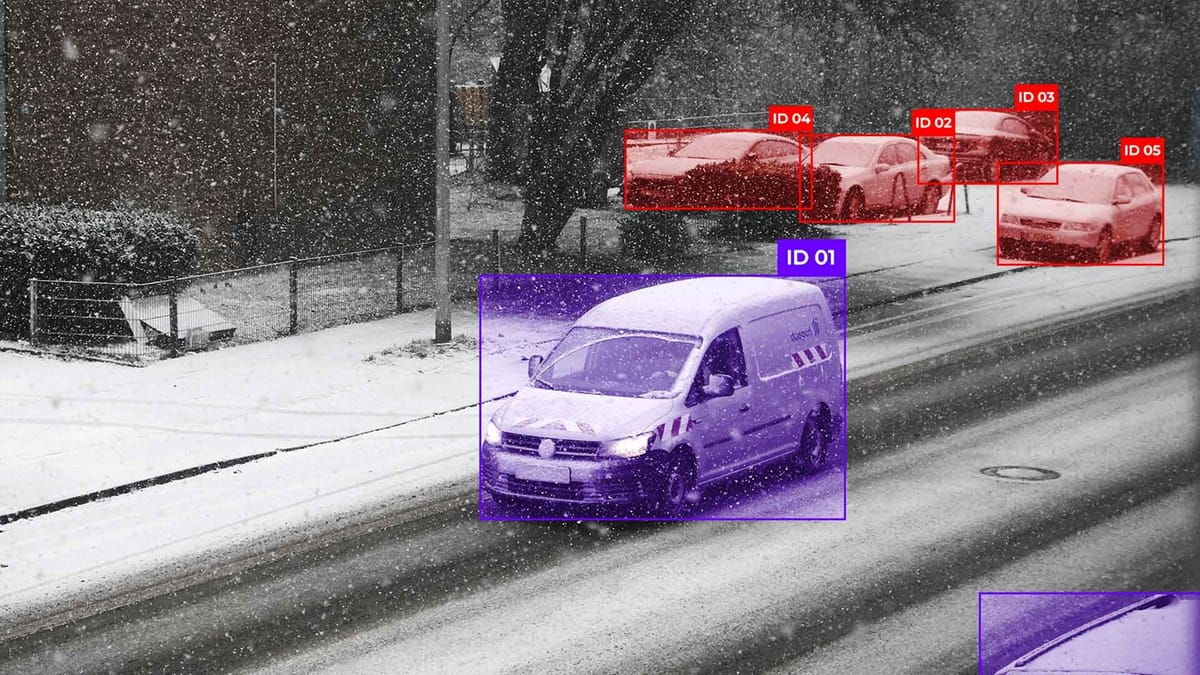

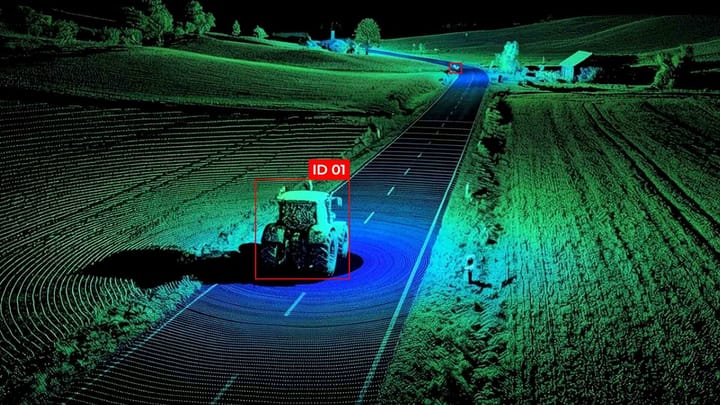

Cameras provide great detail and allow for the identification of an object type by its visible fragment. However, they are the most vulnerable to overlaps. Here, LiDAR comes to the rescue, building an accurate three-dimensional map using laser beams. Since LiDAR emits thousands of pulses, some can pass through small gaps, providing clues about hidden targets. For example, between tree leaves or under the chassis of a large truck

The most stable tool in occlusion handling tasks is radar. Radio waves can bounce off metallic surfaces or pass under cars, detecting movement where a camera sees only the wall of another vehicle. Combining data from these sensors allows the system to create a full picture of the world: the camera recognizes the object, LiDAR refines its exact position, and radar confirms movement even in a blind spot.

Labeling Techniques According to Quality Standards

Correct annotation of occluded objects requires specialists to have not only attentiveness but also a deep understanding of spatial geometry. It is important not just to outline what is visible, but to convey information about the object's real state to the model.

Approaches to Annotating Occluded Objects

The labeling process in situations with obstacles is usually divided into several levels to teach the AI to distinguish between the visible and the hidden.

- Modal and Amodal Annotation. Specialists draw two contours. The first covers only the visible part, while the second reproduces the full shape of the object, which we assume based on logic and dimensions.

- Tracking Over Time. If a car completely disappears behind a van for a few seconds, the annotator continues to track its path in the system. This helps the model not to "forget" about the existence of the threat.

- Special Occlusion Tags. Each object is assigned an occlusion level, such as low, medium, or high. This allows developers to filter data for training different algorithms.

For accurate reproduction of the hidden shape, annotators often use an interpolation function. If we see the beginning and end of an object's movement, the system can automatically calculate its position during the moments it was completely hidden behind an obstacle.

Quality Control and Labeling Consistency

Working with hidden objects always carries a risk of subjectivity, as different people may "reason out" hidden contours differently. To minimize errors, companies implement strict rules and automatic checks:

Impact of Correct Annotation on System Behavior

High-quality occlusion labeling directly affects how smoothly and safely an autonomous car moves. If the model is trained on correct examples, it begins to demonstrate "proactive" behavior.

For example, seeing a bus at a stop, the system accounts for the probability of a pedestrian appearing from behind it in advance. Correct annotation reduces the number of false decisions, such as when a car brakes sharply due to incorrectly estimated object boundaries. Ultimately, this increases passenger trust, as the self-driving vehicle behaves like an experienced driver who knows how to predict the development of a situation even when they cannot see the full picture.

The Future of Hidden Object Annotation

Labeling technologies are evolving from simple outlining of visible pixels to creating deep digital models where the computer understands not only what it sees but also what should be there according to the logic of things.

Latest Labeling Methods and Technologies

The main trend is multi-sensor labeling, where annotators work not with a single video stream, but with a fused data flow. This allows for leveraging the advantages of each sensor: if the camera is blinded or the object is hidden, data from radar or LiDAR helps to accurately establish its position.

Annotation development is moving in the following directions:

- 3D and Temporal Annotation. Instead of drawing boxes on individual frames, specialists create volumetric models that exist in time. This allows the system to see an object as a continuous "track", even if it disappears from view for a long period.

- Synthetic Data. Using perfectly labeled virtual worlds allows training models on thousands of complex occlusions that are difficult to find or safely capture in reality.

- Scene-Oriented Approach. Shifting from labeling individual cars to analyzing the entire scene. The model learns to understand that if a large vehicle has stopped before a crossing, there is a high probability of a pedestrian behind it.

Gradual Automation

The labeling process is becoming increasingly intelligent thanks to the help of AI itself. Modern annotation tools can already independently "complete" the hidden parts of objects, offering the annotator a ready-made version for verification. This significantly speeds up the work and makes results more predictable.

In the future, we will see a transition to systems that can independently assess the degree of danger of a hidden object based on its previous speed and the surrounding context. This will make autonomous vehicles not just attentive, but capable of true analytical thinking on the road.

FAQ

Does synthetic data help in understanding occlusions better?

Yes, in a simulator, we know the exact coordinates of every pixel, even hidden ones. Training a model on such data helps it better guess the hidden elements in the real world.

What is the main difficulty in tracking occlusions in video?

The hardest part is not losing the object ID. When a car goes behind an obstacle, and a similarly colored one emerges from the other side, the system must be sure it is the same car, not a different one.

What is "self-occlusion" and why does it hinder algorithms?

This is a situation where an object obscures itself due to a certain angle. For example, when a long truck turns, its cab may completely block the semi-trailer from the autonomous vehicle's cameras. If the annotator does not label the entire object as a single whole, the car might think there is only a small van in front of it and attempt a maneuver that results in a collision with the trailer.

How do annotators understand that there is one object behind an obstacle, not two?

Movement analysis helps here. If the front part of a blue car peeks out from one side of a truck and the rear part of a similar color peeks out from the other, and they move at the same speed, the annotator merges them into one object. This teaches the AI the logic of the world's integrity.

Are there limitations for radar when "seeing" through objects?

Yes, radar sees well through light materials, such as plastic bumpers or bushes. However, it cannot pass through massive metal objects or reinforced concrete walls. In such cases, the system relies on "memory": it remembers that an object went behind the wall and expects its appearance from the other side.

Comments ()