Labeling Autonomous Vehicle Data in Low Light and Infrared

Labeling data for autonomous vehicles in low-light conditions and utilizing infrared radiation is a crucial step in developing safe autonomous driving systems. Night scenes present unique challenges for sensors, but traditional cameras often lose contrast and detail, and objects can remain poorly visible or distorted due to glare and shadows. The use of infrared sensors allows for partial compensation for these limitations by providing additional information about the thermal contours of objects and people. However, to train autonomous driving models, it is necessary not only to collect such data but also to correctly annotate it, using infrared annotation techniques that account for the peculiarities of the nighttime environment, incomplete visibility, and edge cases that affect the system's reliability in real-world conditions.

Key Takeaways

- LED Projection Improves Depth RMS Error Across Architectures.

- The abstract should include depth, segmentation, and edge-case taxonomies adapted for limited visibility.

- Sensor integration and camera improvements are changing the cost and reliability of the system for real-world use.

- The ethics and design of urban lighting impact safety and environmental outcomes.

Low light, glare, and sensor performance

Night changes the perception of the environment for humans and for technical systems. Low light reduces the amount of available visual information, glare from artificial light sources distorts the contours of objects, and shadows hide details that seem obvious during the day. For sensors and computer vision systems, this means reduced accuracy, increased noise, and the need for other approaches to data processing. It is in night conditions that the limits of sensor capabilities and, at the same time, the importance of their correct combination and adaptation are most clearly manifested.

Low-light annotation

Low-light data annotation is a challenging task for preparing datasets for computer vision and sensor systems, especially for low light detection tasks where objects are barely visible or obscured by shadows and glare. In low light, it becomes more difficult for humans to interpret a scene unambiguously; therefore, the annotation process requires clear rules, additional context, and thorough checks. The main task of annotation is not only to label objects, but also to capture the degree of uncertainty with which these objects are perceived in the dark.

Label types in low-light scenarios usually go beyond standard bounding boxes or segmentation masks. In addition to object localization, it is essential to indicate their visibility, partial occlusion, level of blur or illumination, and light sources affecting the scene.

Attribute labels are often used to describe the shooting conditions, such as night, dusk, rain, fog, artificial lighting, headlights, or lanterns. This enables models to distinguish between real-world object properties and artifacts caused by lighting conditions.

Edge cases are significant because they determine the model's reliability in real-world scenarios. These include objects that are only partially visible, pedestrians in dark clothing, objects on the edge of the lighting zone, and scenes with sharp contrast between bright and dark areas.

In such situations, annotation often requires a compromise between accuracy and consistency. Sometimes it is necessary to label an object even with minimal signs of its presence, and sometimes it is required to fix uncertainty or abandon a rigid classification.

It is precisely the correct handling of these edge cases that makes the dataset suitable for training systems that can operate stably in night and complex conditions.

Nighttime benchmarking

Benchmarking progress requires robust datasets, repeatable metrics, and protocols that reflect real-world road performance. Let's review and compare them:

Metrics and cross-domain protocols

Standard computer vision metrics, such as accuracy, completeness, mAP, or IoU, do not fully reflect real-world performance in low-light conditions. Therefore, in such scenarios, extended or conditional metrics are used that analyze the results separately for well-lit and poorly lit areas, for partially visible objects, or for different degrees of noise and contrast.

Assess the stability of the model, not just its average accuracy. Robustness metrics enable you to understand how recognition quality changes with the gradual deterioration of lighting conditions, the appearance of glare, or local illumination. To achieve this, performance curves are used that depend on the scene's brightness, error analysis is conducted in edge cases, and separate counts are made of false positives and false negatives in night scenes. This approach provides a practical understanding of the system's behavior in a real environment.

Cross-domain evaluation protocols are designed to assess the model's ability to generalize knowledge across various conditions and data sources. This means training on daytime or well-lit scenes and testing on night scenes, or vice versa.

These protocols enable you to uncover the hidden dependencies of the model on specific shooting conditions and assess its robustness to domain shifts. If performance drops sharply when switching between domains, this signals the need for domain adaptation or dataset expansion.

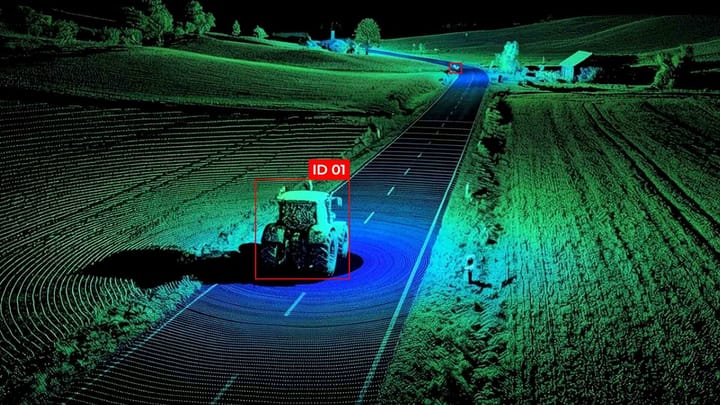

Infrared, thermal, and RGB fusion

Thermal and infrared imaging detect thermal signatures independently of visible details. They reliably detect pedestrians and animals in complete darkness, eliminating errors caused by glare from the camera.

We recommend combining RGB, thermal, and LiDAR to balance range, resolution, and reliability.

Depth estimation at night

Traditional supervised learning methods that rely on precisely labeled LiDAR or stereo data remain an important benchmark for quality. Still, their application at night is limited by the high cost of data acquisition and the difficulty of obtaining reliable annotations.

Self-supervised approaches that utilize the internal structure of the data, rather than manual labeling, are actively being developed. Such methods learn from the photometric consistency between consecutive frames or between stereo pairs, enabling unsupervised depth estimation.

Domain adaptation is a key trend in depth estimation at night. Instead of training models from scratch on limited nighttime data, daytime datasets are used as a starting point, and models are adapted to the nighttime domain using stylistic transformations, feature alignment, or joint training across multiple domains. This approach enables the preservation of geometric knowledge acquired under favorable conditions and enhances robustness to changes in illumination. Combined with self-supervised signals, domain adaptation forms hybrid solutions that are better suited to stable depth estimation in real-world nighttime scenarios.

Application and impact

The development of methods for perceiving and analyzing scenes in complex lighting conditions has a significant impact on practical applications, where errors can have severe consequences. In driver assistance systems (ADAS), object recognition and depth estimation at night form the basis for timely collision warnings, pedestrian detection, and the correct operation of adaptive lighting. It is during the dark that the risk of accidents increases, so a slight improvement in night vision accuracy can significantly impact traffic safety and reduce the number of accidents.

Intelligent traffic signals and traffic management systems benefit from improved night perception models. Cameras and sensors that can operate stably in low light enable a more accurate assessment of traffic intensity, allowing for the detection of congestion or hazardous situations. At night, such systems help compensate for reduced driver attention and improve the overall predictability of the road infrastructure, making it more "sensitive" to the real situation on the road.

For law enforcement agencies, night vision and data analytics open up new opportunities. Automatic detection of suspicious behavior, traffic violations, or dangerous crowds at night reduces the workload on operators and increases the speed of decisions. At the same time, algorithms enable the reduction of false positives, which is particularly important in conditions of limited visibility and complex scenes.

FAQ

What physical factors complicate perception after dark?

After dark, perception is complicated by low light, high contrast between light and dark areas, glare, shadows, and increased visual noise.

What annotation tasks are important for low-visibility scenarios?

Important annotation tasks include accurately labeling objects while taking into account partial visibility, noise, glare, and the degree of uncertainty of their contours.

How do depth estimation approaches handle the distribution shift from day to night?

Depth estimation approaches compensate for the distribution shift from day to night through domain adaptation, stylization transformations, and supervised learning, allowing the model to generalize geometric information regardless of illumination.

What are the practices for collecting and labeling images and videos after dark?

Nighttime image and video capture and labeling practices include the use of infrared and low-light cameras, multi-sensor setups for precise scene capture, and custom annotation protocols that capture object visibility, glare, and light levels.

What applications benefit most from improved perception after dark?

Autonomous driving systems, smart traffic signals, and security and law enforcement platforms benefit the most from these advancements.

Comments ()