Annotating Autonomous Vehicles in Adverse Weather: Rain, Fog & Snow Scenarios

The development of autonomous vehicles is a key area of modern research in the fields of AI and computer vision. One of the primary conditions for the safe operation of such systems is the ability to accurately perceive the environment under various operating conditions. Adverse weather phenomena, particularly rain, fog, and snow, remain particularly challenging for autonomous vehicles, significantly affecting the quality of sensor data and the accuracy of object recognition.

Standard approaches to marking often overlook the specific challenges of degraded weather conditions, which can compromise the reliability of systems in real-world scenarios. In this regard, there is a growing need for adapted annotation methods that can reflect the complexity of the visual environment in the presence of atmospheric interference.

Key Takeaways

- Consistent labels directly improve perception and detection in tough conditions.

- Large datasets exist but vary in density, modality, and label quality.

- Visibility, streaks, and texture loss make object labeling challenging.

- Domain shift from clear to harsh scenes reduces model generalization.

- Clear class definitions and occlusion rules are essential for fair evaluation.

Why annotation under rain, fog, and snow remains a bottleneck for autonomous driving perception

Adverse weather conditions, such as rain, fog, and snow, pose significant challenges for autonomous vehicle perception systems. One of the main reasons for this is low visibility, which makes it difficult to clearly distinguish between road infrastructure objects, pedestrians, and other road users. Under such conditions, even high-quality sensor data can lose its informativeness, which directly affects the annotation process.

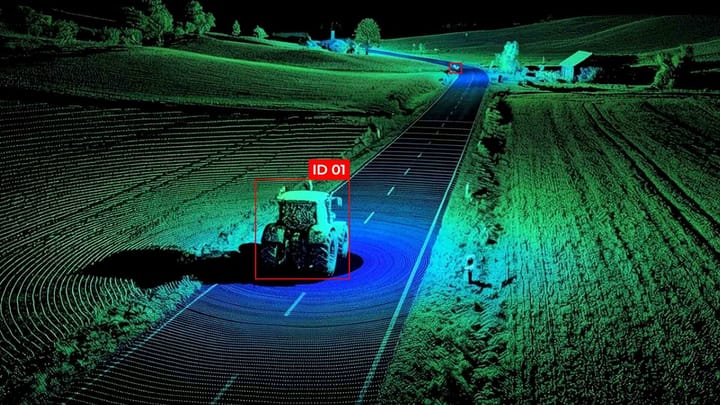

An additional factor is sensor degradation, caused by atmospheric phenomena, which distorts the operation of cameras, lidars, or radars. This makes it difficult to create accurate and consistent annotations, as the boundaries of objects become blurred, and parts of the scenes are ambiguous even for expert marker makers. As a result, the risk of errors in training datasets increases.

Since the quality of the annotation directly determines the effectiveness of perception models, these limitations become a serious bottleneck in the development of autonomous systems. Overcoming these difficulties is a necessary condition for creating weather-robust models that can function stably in real-world road scenarios, regardless of weather conditions.

State of the art in adverse weather perception: from domain adaptation to reference-free frameworks

One of the most common directions is domain adaptation methods, which aim to reduce the gap between the source and target data domains. Such approaches enable partial compensation for sensor degradation by utilizing both synthetic data and knowledge transfer across different weather scenarios. At the same time, their effectiveness largely depends on the availability of reference data or paired samples.

An alternative to these methods is reference-free frameworks, which do not require exact matches between scenes under different weather conditions. They are focused on training models without direct dependence on ideal standards, which makes them more suitable for real-world operating conditions. Such approaches are increasingly seen as a promising way to create weather-robust models that can adapt to a wide range of atmospheric influences.

Core datasets used in adverse weather research and annotation practice.

Object detection under harsh weather via transfer learning and dataset merging

Object detection in autonomous vehicles under adverse weather conditions remains a significant challenge in modern machine vision. Low visibility, which occurs during rain, fog, or snow, and sensor degradation caused by atmospheric phenomena, significantly complicate the accurate detection of pedestrians, vehicles, and road signs.

One effective approach to overcoming these limitations is transfer learning, where models trained on large datasets under standard conditions are adapted to adverse weather scenarios. This reduces the need for a large amount of annotated data for rainy, foggy, or snowy scenes, while simultaneously increasing the models’ resistance to sensor degradation.

Another approach is dataset merging, i.e., combining multiple datasets with different weather conditions, which enables the creation of a more diverse and representative training set. This approach contributes to the development of weather-robust models that can operate effectively in a wide range of weather scenarios and maintain a high level of accuracy, even in low visibility conditions.

Designing training curricula for mixed-weather conditions

Conventional datasets collected under favorable weather conditions often fail to accurately reflect the complexity of real-world conditions, such as rain, fog, snow, or their combinations, which can create low visibility and lead to sensor degradation. This degradation makes it difficult to accurately detect, segment, and assess objects in the environment.

To ensure a variety of weather scenarios in the datasets, it is important to include scenes with different intensities of rain, fog, and snow, as well as combinations of these phenomena, since each scenario affects the sensor data differently. The balance between clear weather and adverse weather conditions helps maintain model performance and forms a more representative training set. For this, synthetic weather effects and data augmentations that simulate low visibility and sensor degradation are widely used.

Combining multiple datasets with different weather conditions (dataset merging) expands the scope of scenarios and increases the overall robustness of models. The use of transfer learning enables the adaptation of pre-trained models to rare or extreme weather conditions without the need for a large amount of new annotated data. Correct annotation is also an important aspect: fuzzy object boundaries, which are partially hidden or noisy due to weather, require standardized rules that guarantee the consistency of the dataset.

Domain adaptation, domain generalization, and domain transfer in adverse weather

Annotation strategies that improve cross-weather generalization

- Multi-weather annotation including scenes with different weather conditions in training datasets ensures that models see a wide range of weather conditions, which contributes to their ability to cross-weather generalization.

- Hard-case labeling paying special attention to objects that are poorly visible due to low visibility or partially disappeared due to sensor degradation helps create more accurate weather-robust models.

- Annotation consistency setting standards for labeling objects that are partially hidden or noisy due to weather effects reduces errors and ensures stable training of models for different adverse weather scenarios.

- Using synthetic data (Synthetic weather augmentation) creating annotated scenes with synthetic rain, fog, or snow allows for supplementing real datasets and improving the robustness of models to sensor degradation and low visibility.

- Multi-sensor annotation combining camera, LiDAR, and radar data in the annotation process provides more reliable training of models that can cope with different types of sensor degradation and form weather-robust models.

Summary

Developing autonomous vehicles that can reliably operate in adverse weather conditions requires a comprehensive approach to data preparation and model training. The high level of low visibility and sensor degradation in rain, fog, or snow significantly complicates the perception and processing of information from sensors. Effective solutions to these problems include the creation of balanced and diverse datasets, the merging of existing datasets, and the use of knowledge transfer methods that enable models to adapt to new weather scenarios without requiring a large amount of additional annotated data. Proper annotation strategies, particularly multi-weather and multi-sensor markup, along with an emphasis on complex scenes, ensure the development of weather-robust models that can operate effectively under a wide range of weather conditions. In general, the integration of these approaches enables systems to increase their resistance to adverse environmental factors, ensure accurate perception of objects, and significantly improve the safety of autonomous driving in real-world road conditions.

FAQ

Why is object detection challenging under adverse weather?

Adverse weather conditions, such as rain, fog, and snow, create low visibility and cause sensor degradation, making it difficult for autonomous vehicles to accurately detect pedestrians, vehicles, and road signs.

What role do training datasets play in handling adverse weather?

Well-designed datasets expose models to a variety of weather conditions, allowing them to learn robust features that improve performance under low visibility and sensor noise.

How does domain adaptation help in adverse weather perception?

Domain adaptation transfers knowledge from a source domain with favorable conditions to a target domain with challenging weather conditions, mitigating the effects of sensor degradation.

What is the purpose of domain generalization?

Domain generalization trains models to perform reliably in unseen weather scenarios without requiring further adaptation, thereby contributing to weather-robust models that can handle low visibility.

How does dataset merging improve model robustness?

Merging multiple datasets from different weather conditions increases diversity and coverage, helping models generalize and resist sensor degradation across varied adverse weather.

What are key annotation strategies for cross-weather generalization?

Multi-weather and multi-sensor annotation, consistent labeling of occluded objects, and synthetic augmentation help models handle low visibility and form weather-robust models.

Why is transfer learning effective for adverse weather scenarios?

Transfer learning enables models trained on clear weather data to adapt efficiently to rare or extreme conditions, thereby reducing the need for large annotated datasets for adverse weather conditions.

How does sensor degradation affect perception models?

Rain, fog, and snow can distort camera images, reduce LiDAR returns, and introduce radar noise, decreasing model accuracy if weather-robust models are not employed.

What advantages do synthetic weather datasets provide?

Synthetic augmentation simulates challenging weather conditions, increasing dataset diversity and enhancing model resilience to low visibility and sensor degradation, without the need for costly real-world collection.

How do weather-robust models improve autonomous driving safety?

By integrating diverse datasets, robust annotation, and adaptation techniques, weather-robust models maintain accurate perception in adverse conditions, thereby reducing risk in low-visibility scenarios.

Comments ()