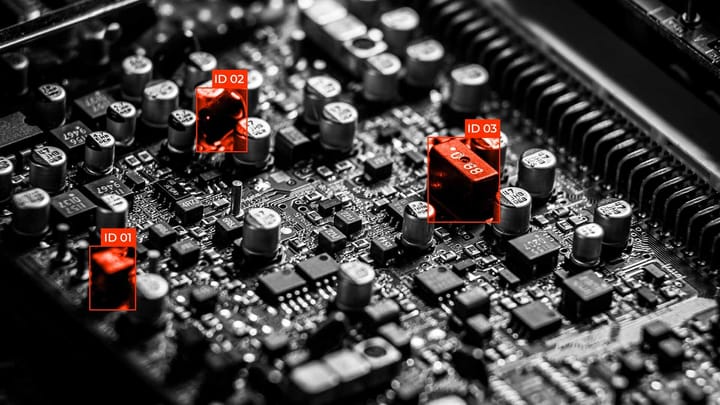

AI for Real-Time Defect Detection on Edge Devices

Artificial Intelligence is already actively used for quality control, finding the smallest defects. However, if this traditional AI works in the cloud, it first has to send a photo over the internet to a remote server, process it there, and then send the decision back to the factory.

This round trip creates a delay. In the world of high-speed manufacturing, where decisions must be made in milliseconds, such a delay can be critical. If the AI is even a moment late, faulty parts may slip further down the line, resulting in significant losses.

The primary goal of edge AI is to deploy a powerful and complex AI model, trained on vast amounts of data, on a small, resource-constrained device, enabling it to run instantly. This ensures reliable, fast, and autonomous quality control, eliminating the need for an internet connection.

Quick Take

- AI models trained in the cloud must undergo compression and optimization to fit and run fast on the device's limited memory.

- The full edge AI cycle includes three stages: frame capture, instant AI inference, and physical action by the actuator.

- Model accuracy declines over time due to changes in lighting or materials, necessitating active monitoring for timely retraining.

- To save bandwidth, the edge device only sends frames with confirmed defects or those where the AI has low confidence to the cloud.

- For reliable quality control, different sensors are combined: RGB for visible defects, IR for heat leaks, and depth for checking geometry.

AI Model Requirements on Edge Devices

When we move AI from a powerful data center to a small device near the conveyor belt, the model must meet very strict demands. It is like transplanting a large computer brain into a small, yet intelligent, smartphone, making it part of smart factories.

Speed and Lightness

The primary requirement is that the model must be lightweight and fast. For defect detection on the production line, edge AI must process each frame in milliseconds. Therefore, you cannot use large, "heavy" models that take a long time to compute.

Optimized architectures are chosen to accomplish the task efficiently, utilizing minimal resources. The model must be compact to conserve space in the device's limited memory while maintaining high accuracy in defect recognition.

Hardware Optimization

Peripheral devices do not have powerful general-purpose processors. Instead, specialized chips are used, such as a GPU, TPU, or NPU. The AI model must be optimized explicitly for these chips.

This ensures maximum use of the limited computing power for fast AI inference. Special tools are used to "translate" the model into the language that the specific chip understands, enabling the highest performance with minimal energy consumption.

Stability and Resources

Edge devices have limited memory and power consumption. This means the model must demonstrate stability even when resources are scarce. It must be reliable and not "crash" from overload.

These devices are often battery-powered or have limited cooling, so minimum power consumption is a must for continuous operation in the factory. The model must be able to manage memory effectively to ensure 24/7, autonomous monitoring.

Resilience to Harsh Conditions

AI in manufacturing does not work in ideal studio conditions. The model must be resilient to difficult shooting conditions. It must confidently recognize defects, even if dust gets on the camera, lighting changes suddenly, or the conveyor background shifts.

This ensures that edge AI remains accurate, regardless of external factors within the factory. The model must be trained on very diverse data that mimics real production "noise" to ensure high reliability in challenging conditions.

Choosing the Right Hardware Platform

The success of edge AI depends on choosing the proper hardware that can execute the model's computations quickly and efficiently. We need more than just a camera; we need a "brain" optimized for AI.

Popular Edge Computing Platforms

Several specialized platforms explicitly designed for AI at the edge dominate the market:

- NVIDIA Jetson. These are powerful microcomputers that use a GPU. They are ideal when high speed and the ability to run more complex AI models are required. Suitable for large production facilities or multi-sensor systems.

- Google Coral and Intel Movidius. These platforms use specialized accelerators, known as TPUs or VPUs. They are excellent for mass deployment as they are energy efficient, compact, and perfectly optimized for fast execution of lighter AI models.

- Qualcomm. Often found in embedded systems, mobile devices, and industrial cameras, where integration and low power consumption are important.

Cameras with Built-in Processing

Sometimes, it is better to use cameras with built-in processing instead of a separate microcomputer. They are ideal for simple, well-defined tasks, such as checking a label for accuracy or verifying the presence of a part.

All data processing occurs directly inside the camera. This greatly simplifies the architecture and reduces latency to an absolute minimum, as data does not need to be transmitted via cable for analysis.

Sensors for Accurate Defect Detection

The accuracy of AI depends on the quality and type of data it receives. Different sensors are used for defect detection:

- RGB Cameras. These are standard cameras that capture colors, just like the human eye. They are used to detect visible defects, such as cracks, scratches, incorrect color, or surface damage.

- IR Cameras. These cameras see heat. They are necessary for detecting invisible problems, such as component overheating on a circuit board or heat leaks in insulation.

- Depth Sensors. These sensors, often based on LiDAR technology, measure the distance to an object. They are needed when precise geometry is important, for example, for measuring volume, checking surface flatness, or detecting deformations. Using a combination of these sensors ensures reliable defect detection.

Edge AI Architecture and Deployment

The physical placement and management of an edge AI solution requires a clear, integrated architecture. The edge device is a fully autonomous mini system capable of analysis and action.

Edge Device Components

Every device that executes AI at the periphery consists of three main integrated components:

- Sensor. This is the system's "eyes." High-speed cameras are typically used to capture images or video at a high frame rate. This is necessary to "freeze" the movement of an object on the conveyor and obtain a clear frame for analysis.

- Computing Device. This is the system's "brain," where the AI model is executed. This can be a powerful Nvidia Jetson platform for complex tasks, a less resource-intensive Raspberry Pi, or an industrial PC to ensure reliability on the shop floor.

- Actuator. This is the system's "hands," a device that performs a physical action based on the AI inference. Examples include a pneumatic pusher that automatically rejects a defective product from the conveyor or a light indicator that signals a problem to the operator.

Continuous Data Flow

The entire value of edge AI lies in the speed and continuity of the process. Instant decision-making occurs in three key stages:

- Capture. The camera captures the image of the object.

- Inference. The AI model on the computing device instantly analyzes the image.

- Action. The actuator performs the physical command.

All these stages must occur within milliseconds to guarantee that a defect is detected and reacted to before the object leaves the control zone.

Over The Air Updates

Even a perfectly trained AI model needs updates if materials, lighting, or new types of defects change. In a large factory, hundreds of edge devices may be operating. Physically connecting to each one to update the model is inefficient and expensive.

Using OTA updates means that new versions of the AI model, bug fixes, or security patches are managed and deployed remotely to all edge devices simultaneously. This allows the system's relevance and accuracy to be maintained without production downtime.

Edge AI Lifecycle

For the system to continue working effectively for years, constant monitoring and management of its lifecycle are necessary. AI models in production are prone to degradation, so their support is crucial.

Model Drift

AI reliability decreases over time, a phenomenon known as model drift. The accuracy of the AI model drops due to constant changes in the real world. This can happen due to a change in material supplier, the appearance of new defect types, gradual equipment wear, or a change in the shooting angle.

A model that was perfect a month ago can start making mistakes. Active monitoring of AI accuracy at the edge is required. The system must constantly compare how confidently the model makes decisions and signal the operator if its accuracy metrics fall below a set threshold. This allows for timely model retraining.

Gathering "Interesting" Data

New data is needed for retraining, but constant transfer of the entire data stream is impossible. Sending all video data or thousands of photos that an edge device takes in a day to the cloud for storage or analysis is impossible due to bandwidth limitations and high cost.

The edge device must be a "smart filter." It should only send "interesting" frames to the cloud:

- Frames with Defects. Images where the model confidently found a problem.

- Doubtful Frames. Images where the model has low confidence. This data is the most valuable for future retraining and model improvement.

Ethics and Security

The reliability of AI decisions and data security are the foundation of trust in the system. Since processing occurs locally, it is essential to ensure that local data remains secure and not compromised. It is important to implement reliable encryption and access control mechanisms directly on the peripheral device.

A false positive by the model can lead to a conveyor stop and significant financial losses. The system must be set up so that before performing a critical action, it either requests human confirmation or requires a double check by another sensor or model to ensure that a false positive does not lead to a collapse.

FAQ

How is the physical durability of edge devices ensured on the shop floor?

Industrial edge devices are placed in special protective housings. These protect the equipment from dust, moisture, extreme temperatures, and vibration, which is essential for continuous operation.

What programming languages are used for developing edge applications?

The most popular languages are Python for prototyping and C++ for high-performance real-time deployment. Frameworks like TensorFlow Lite and OpenVINO are often used to optimize models.

What is the difference between "latency" and "throughput"?

Latency measures how quickly the AI makes a single decision. Throughput measures how many objects the system can process in a unit of time. Edge AI is optimized for minimal latency and high throughput.

What happens if the edge device loses power or connection?

The system must have built-in fault tolerance. In the event of power or connection loss, the device should automatically switch to a safe mode, such as stopping the conveyor or switching to manual quality control, to prevent the release of unverified products.

Comments ()