3D Occupancy Prediction for Autonomous Vehicles: BEV Representation & Voxel Grids

The development of autonomous vehicles is intensively stimulating research in the field of robotics and computer vision, where accurate and reliable scene understanding is of particular importance. To ensure safe navigation, autonomous control systems must form a detailed understanding of spatial occupancy, determining which areas of the environment are occupied by objects and which are free for movement. In this context, the task of 3D occupancy prediction is considered a crucial element in forming a high-quality 3D scene representation.

One of the most important aspects of 3D modeling is selecting an effective form of spatial representation. The bird's-eye view of the BEV provides a compact and informative top-down projection of the scene, which greatly simplifies the analysis of the road situation and trajectory planning. In turn, voxel grids enable the formation of a dense three-dimensional representation of the environment, allowing for the detailed modeling of object structure and space. The combination of these approaches allows the creation of models that can simultaneously achieve high accuracy and meet real-time requirements.

Key Takeaways

- Occupancy-focused grids give richer scene understanding than detection alone.

- BEV methods and octree/voxel encodings trade fidelity for runtime and memory.

- Sparse and Gaussian-based pipelines make large-scale training tractable.

Research context and user intent: who needs 3D occupancy prediction and why now

The need for highly accurate spatial occupancy prediction is rapidly increasing due to the active development of autonomous transportation technologies, intelligent robotic systems, and modern solutions for urban mobility. 3D occupancy prediction is a critical component for any system that must reliably interpret the environment and make decisions in real time based on deep scene understanding.

Firstly, these technologies are essential for autonomous vehicles, as they necessitate an accurate and dynamic 3D scene representation that can display both static and moving objects. The use of such methods enables control systems to safely plan maneuvers, predict potential risks, and effectively interact with complex traffic situations.

Second, accurate spatial occupancy models are important for ground-based mobile robots, drones, and autonomous logistics platforms. Their ability to navigate in space largely depends on full 3D modeling of the environment, which is often implemented through voxel grids or combined approaches that combine dense voxel structures with simplified projections based on a bird’s eye view BEV.

A third important direction is smart cities, where 3D occupancy prediction can be used in monitoring systems, traffic coordination, and traffic flow analysis. Here, accurate 3D scene representation enables the enhancement of safety and responsiveness in infrastructure systems. The relevance of these solutions is due to several factors:

- The sharp increase in computing capabilities enables the processing of large arrays of 3D data in real-time.

- The emergence of massive multimodal datasets for training models.

- Increasing requirements for the security of autonomous systems.

Informational intent for engineers and researchers

Foundations: occupancy, BEV, and voxelized 3D scene representations

Spatial occupancy provides a binary or probabilistic description of whether a given region of space is occupied by an object or remains unoccupied. This concept is important for tasks such as collision avoidance, high-level reasoning, and safe motion planning. By assigning occupancy values to discrete volumetric or planar elements, autonomous systems can interpret both static structures and dynamic obstacles in real-time.

The BEV bird’s-eye view representation provides a top-down projection of the environment, transforming complex 3D scenes into a structured 2D layout that preserves the spatial relationships essential for navigation. BEV is widely used due to its computational efficiency and compatibility with convolutional architectures, making it a practical basis for large-scale perceptual pipelines.

Meanwhile, voxel meshes provide a dense and fully three-dimensional structure for representing space. By dividing the environment into uniform volumetric cells, voxelized representations allow for accurate modeling of object geometry, elevation changes, and free space estimation. Although voxel meshes require more computational resources compared to BEV, they remain indispensable for achieving detailed 3D reconstructions and fine-grained spatial reasoning.

3D occupancy prediction for autonomous vehicles

3D occupancy prediction enables enhanced scene understanding and reliable decision-making in dynamic driving environments. Unlike traditional object detection, which focuses solely on identifying individual objects, occupancy prediction provides a dense 3D representation of the scene that captures the spatial distribution of both static and dynamic entities.

Current approaches to 3D occupancy prediction often utilize aerial BEVs and voxel-based meshes to strike a balance between efficiency and accuracy. BEVs provide a top-down view of the environment, which simplifies trajectory planning and facilitates the integration of multiple sensor modalities, such as LiDAR, radar, and cameras. In parallel, voxel-based meshes enable detailed volumetric modeling, allowing for the accurate representation of object shapes and heights, which is essential for precise navigation and collision avoidance. Key benefits of 3D occupancy prediction for autonomous vehicles include:

- Increased navigation safety – Dense occupancy maps enable vehicles to detect and avoid obstacles, even in cluttered or confined areas.

- Improved path planning – Integration of BEV representations simplifies trajectory calculations while maintaining spatial awareness.

- Robust scene understanding – Voxel-based 3D scene representations support accurate modeling of complex urban environments.

- Sensor fusion – BEVs and voxel-based grids provide a single framework for combining data from multiple sensors into a coherent spatial map.

The growing demand for high-resolution 3D occupancy prediction is driven by the need for autonomous vehicles to reliably operate in complex real-world scenarios. Advances in computing resources, machine learning architectures, and large-scale datasets have enabled the performance of dense spatial reasoning in real-time, thereby bridging the gap between perception and practical planning.

BEV-centric pipelines: view transformation and feature lifting

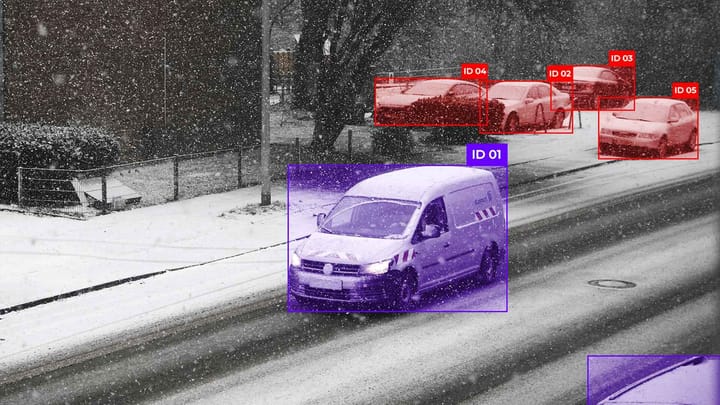

Modern autonomous perception systems often rely on BEV-centric pipelines to achieve efficient and accurate scene understanding. These pipelines transform sensor data from perspective images into a bird’s-eye view representation of the BEV, facilitating tasks such as 3D occupancy prediction, path planning, and object tracking. The main stages of BEV-centric pipelines can be described as follows:

Sensor data acquisition.

- Raw data from LiDAR, camera, or radar sensors is collected to capture the environment.

- The data encodes the spatial information needed to build a detailed 3D representation of the scene.

Feature extraction.

- Features are extracted from the raw sensor input using convolutional neural networks (CNNs) or other deep learning architectures.

- This stage generates high-dimensional embeddings that encode both semantic and geometric information, which are critical for spatial reasoning about occupancy.

Appearance transformation.

- Perspective features are transformed into a bird's-eye view representation of the BEV.

- This involves projecting 2D image features or 3D point cloud features onto a top-down grid while preserving spatial relationships.

- Appearance transformation simplifies the interpretation of the environment and aligns data from multiple sensors, making it easier to understand.

Feature upscaling.

- Features in the BEV are "upscaled" to pseudo-3D or fully voxelized space, providing a richer 3D representation of the scene.

- Feature upscaling bridges the gap between 2D BEV projections and volumetric representations, allowing the network to reason about height and depth.

- This step is essential for accurate 3D occupancy prediction in complex scenes.

Occupancy and Scene Reasoning.

- The transformed and raised features are processed to predict spatial occupancy, detect obstacles, and determine free space.

- The output can be represented as BEV maps, voxel grids, or hybrid representations to support planning and navigation.

Integration with Downstream Tasks.

- The BEV-centric final outputs are used for path planning, collision avoidance, and autonomous decision-making.

- The combination of BEV and voxel-based reasoning ensures that autonomous systems support both computational efficiency and detailed scene understanding.

Voxel and octree structures: dense, sparse, and multi-granularity designs

Sparse voxel structures were introduced to address the inefficiency of dense representations. Sparse voxel grids store only occupied or relevant regions, which significantly reduces memory usage while preserving critical geometric information. This approach is particularly effective in outdoor and urban environments where much of the 3D space is empty. Sparse structures enable efficient processing for scene understanding tasks, such as obstacle detection and free space estimation, without compromising accuracy.

Octotree structures extend the concept of sparse voxels to include hierarchical representations with multiple resolutions. In octotrees, space is recursively divided into eight child cells, allowing for a fine-grained representation in regions of interest and a coarse-grained representation in empty or less important regions. This multi-grain design strikes a balance between accuracy and efficiency, making it suitable for real-time 3D occupancy prediction in autonomous vehicles. Octotrees facilitate adaptive resolution, enabling autonomous systems to allocate computational resources to areas critical for navigation, planning, and collision avoidance.

In practice, voxel and octotree structures are often combined with BEV bird’s-eye views. BEV provides an efficient 2D projection for global thinking, while voxel and octotree structures retain detailed 3D information for accurate spatial occupancy modeling. The combination of these representations supports robust scene understanding, bridging the gap between computational efficiency and the volumetric detail required for autonomous navigation.

Conclusion

Two basic representations — BEV bird’s-eye view and voxel meshes — play complementary roles in 3D occupancy prediction. BEV offers a computationally efficient top-down projection that simplifies trajectory planning, sensor fusion, and large-scale environmental thinking. In contrast, voxel meshes, including dense, sparse, and polyhedral octree-based structures, provide detailed volumetric modeling, capturing the geometry and elevation changes of objects required for accurate collision avoidance and obstacle detection.

The integration of BEV and voxel representations supports a unified framework for autonomous vehicles, striking a balance between computational efficiency and detailed environmental modeling. Dense voxel meshes provide maximum accuracy, while sparse meshes reduce computational overhead. Multi-partitioned octree-based structures allow for adaptive resource allocation between regions of interest. Together, these strategies form the basis for robust scene understanding, enabling robust autonomous operation in diverse and dynamic environments.

FAQ

What is 3D occupancy prediction in autonomous vehicles?

3D occupancy prediction estimates which regions of space are occupied or free, enabling robust scene understanding. It provides dense 3D scene representation for navigation and collision avoidance in dynamic environments.

Why is spatial occupancy important for autonomous systems?

Spatial occupancy allows vehicles to reason about free space and obstacles, supporting safe navigation and path planning. Accurate occupancy maps improve decision-making in complex urban scenarios.

What is the role of bird’s eye view (BEV) in perception pipelines?

Bird’s eye view BEV provides a top-down projection of the environment, simplifying spatial reasoning and trajectory planning. It efficiently integrates multi-sensor data while maintaining a global view of the scene.

How do voxel grids support 3D scene representation?

Voxel grids divide space into volumetric cells, allowing precise modeling of object geometry and height variations. They enable detailed scene understanding and accurate 3D occupancy prediction.

What are the advantages of sparse voxel structures?

Sparse voxels store only occupied or relevant regions, reducing memory and computation costs. They maintain accurate spatial occupancy modeling in large-scale or outdoor environments.

How do octree structures enhance voxel-based representations?

Octrees provide a hierarchical, multi-granularity design, allocating high resolution to important areas and coarser resolution to less important areas. This improves efficiency while retaining detailed 3D scene representation where needed.

What is feature lifting in BEV-centric pipelines?

Feature lifting converts 2D BEV features into pseudo-3D or voxelized representations, enabling reasoning about height and depth. It bridges the gap between efficient BEV projections and volumetric scene understanding.

Why combine BEV and voxel grids in autonomous systems?

Combining BEV and voxel grids leverages the computational efficiency of top-down projections with the geometric detail of volumetric models, thereby enhancing the overall performance. This hybrid approach enhances 3D occupancy prediction and safe navigation.

What challenges exist in 3D occupancy prediction?

Challenges include high computational cost, occlusions, sensor noise, and representing dynamic objects. Efficient scene understanding requires balancing precision, speed, and the integration of multiple sensors.

How does 3D occupancy prediction improve autonomous vehicle safety?

By predicting spatial occupancy, vehicles can detect obstacles and navigate around hazards in real time. Dense 3D scene representations enable robust planning and collision avoidance in complex environments.

Comments ()